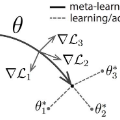

The recent focus on Fine-Grained Sketch-Based Image Retrieval (FG-SBIR) has shifted towards generalising a model to new categories without any training data from them. In real-world applications, however, a trained FG-SBIR model is often applied to both new categories and different human sketchers, i.e., different drawing styles. Although this complicates the generalisation problem, fortunately, a handful of examples are typically available, enabling the model to adapt to the new category/style. In this paper, we offer a novel perspective -- instead of asking for a model that generalises, we advocate for one that quickly adapts, with just very few samples during testing (in a few-shot manner). To solve this new problem, we introduce a novel model-agnostic meta-learning (MAML) based framework with several key modifications: (1) As a retrieval task with a margin-based contrastive loss, we simplify the MAML training in the inner loop to make it more stable and tractable. (2) The margin in our contrastive loss is also meta-learned with the rest of the model. (3) Three additional regularisation losses are introduced in the outer loop, to make the meta-learned FG-SBIR model more effective for category/style adaptation. Extensive experiments on public datasets suggest a large gain over generalisation and zero-shot based approaches, and a few strong few-shot baselines.

翻译:最近对基于精细的 Scarch 图像检索法(FG-SBIR) 的关注已经转向将一个模型推广到没有从它们获得任何培训数据的新类别。 然而,在现实世界的应用中,经过培训的FG-SBIR 模型往往既适用于新的类别,也适用于不同的人类素描者,即不同的绘画风格。尽管这使一般化问题更加复杂,但幸运的是,有少数例子存在,使模型能够适应新的类别/风格。在本文中,我们提出了一个新视角 -- -- 而不是要求一个概括化模型,我们主张快速调整模型,在测试中只有极少的样本。然而,为了解决这一新问题,我们引入了一个基于新类型模型的元化学习(MAML)新框架,并作了一些关键的修改:(1) 作为具有基于边际的对比性损失的检索任务,我们简化了内部循环中的MAML培训,使之更稳定、更可伸缩。 (2) 我们对比损失的幅度也是与模型的其余部分的元化,在测试中采用F-RSBMMMMMMMMM 的大规模常规化模型上,在常规化中引入了比重。