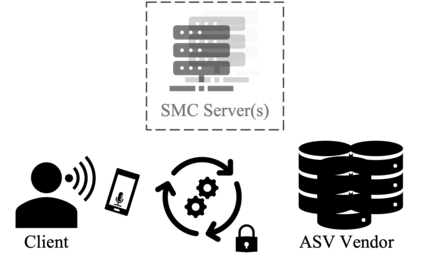

The development of privacy-preserving automatic speaker verification systems has been the focus of a number of studies with the intent of allowing users to authenticate themselves without risking the privacy of their voice. However, current privacy-preserving methods assume that the template voice representations (or speaker embeddings) used for authentication are extracted locally by the user. This poses two important issues: first, knowledge of the speaker embedding extraction model may create security and robustness liabilities for the authentication system, as this knowledge might help attackers in crafting adversarial examples able to mislead the system; second, from the point of view of a service provider the speaker embedding extraction model is arguably one of the most valuable components in the system and, as such, disclosing it would be highly undesirable. In this work, we show how speaker embeddings can be extracted while keeping both the speaker's voice and the service provider's model private, using Secure Multiparty Computation. Further, we show that it is possible to obtain reasonable trade-offs between security and computational cost. This work is complementary to those showing how authentication may be performed privately, and thus can be considered as another step towards fully private automatic speaker recognition.

翻译:开发保护隐私的自动扬声器核查系统是若干研究的重点,目的是让用户在不危及其声音隐私的情况下认证自己,但是,目前的保护隐私方法假定,用于认证的模板语音代表(或语音嵌入器)是由用户在当地提取的,这提出了两个重要问题:第一,发言人嵌入提取模型的知识可以为认证系统带来安全和稳健的赔偿责任,因为这一知识可能有助于攻击者设计能够误导系统的对抗性实例;第二,从服务提供者的角度来看,发言人嵌入提取模型可以说是系统中最有价值的组成部分之一,因此,披露该模型是非常不可取的。在这项工作中,我们展示如何在保持发言者的声音和服务提供商的模型私密的同时,使用安全多党调制,同时保持发言者的声音和服务提供商的模型私密。此外,我们表明,在安全和计算成本之间实现合理的权衡是可能的。这项工作与显示如何私下进行认证的工作相辅相成,因此可以被视为是完全私人自动承认的又一步。