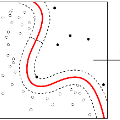

Medical anomaly detection is a crucial yet challenging task aimed at recognizing abnormal images to assist in diagnosis. Due to the high-cost annotations of abnormal images, most methods utilize only known normal images during training and identify samples deviating from the normal profile as anomalies in the testing phase. Many readily available unlabeled images containing anomalies are thus ignored in the training phase, restricting the performance. To solve this problem, we introduce one-class semi-supervised learning (OC-SSL) to utilize known normal and unlabeled images for training, and propose Dual-distribution Discrepancy for Anomaly Detection (DDAD) based on this setting. Ensembles of reconstruction networks are designed to model the distribution of normal images and the distribution of both normal and unlabeled images, deriving the normative distribution module (NDM) and unknown distribution module (UDM). Subsequently, the intra-discrepancy of NDM and inter-discrepancy between the two modules are designed as anomaly scores. Furthermore, we propose a new perspective on self-supervised learning, which is designed to refine the anomaly scores rather than detect anomalies directly. Five medical datasets, including chest X-rays, brain MRIs and retinal fundus images, are organized as benchmarks for evaluation. Experiments on these benchmarks comprehensively compare a wide range of anomaly detection methods and demonstrate that our method achieves significant gains and outperforms the state-of-the-art. Code and organized benchmarks are available at https://github.com/caiyu6666/DDAD-ASR.

翻译:医疗异常现象检测是一项关键但具有挑战性的任务,旨在识别异常图像以协助诊断。由于异常图像的成本说明很高,大多数方法在培训期间只使用已知的正常图像,并将与正常特征脱节的样本确定为测试阶段的异常现象。许多容易获得的含有异常现象的未贴标签图像因此在培训阶段被忽略,从而限制了性能。为了解决这一问题,我们引入了单级半监督学习(OC-SSL),以使用已知的正常和未贴标签的图像进行培训,并在此设置的基础上提出异常检测的双重分配差异。重建网络的组合旨在模拟正常图像的分发以及正常和未贴标签图像的分发,从而在培训阶段忽略了含有异常现象的常规分布模块(NDM)和未知的分布模块(UDMM)。随后,NDM和两个模块之间的内部差异性半监督性学习(OC-SSL)被设计为异常分数。 此外,我们提出了关于自我监督学习的新视角,目的是改进异常现象的评分数,而不是直接检测异常现象。五套医疗图像和无标签图像的分布式图像的分布图理学基准,包括组织式的CRIS-real-real-real-reasmamama-ress-remas-remas-ress-remas-ress-ress-ress-ress