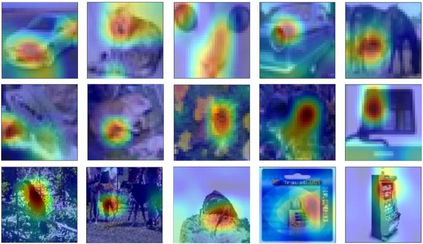

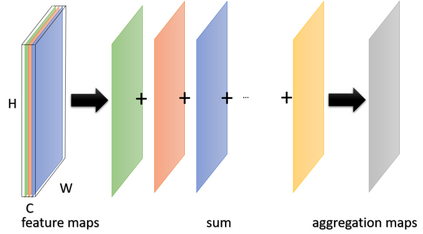

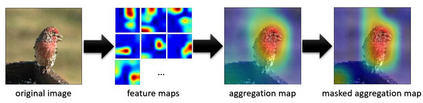

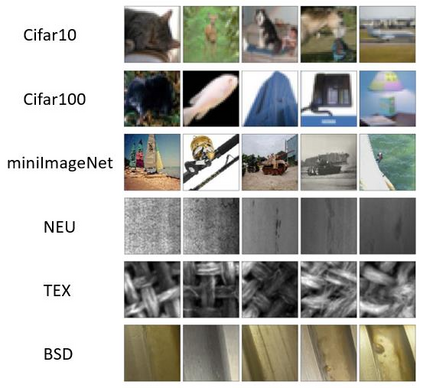

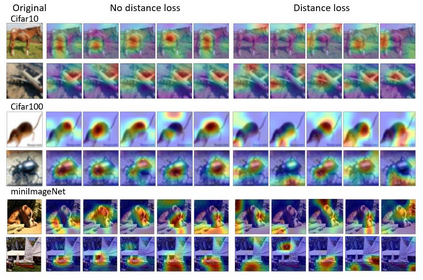

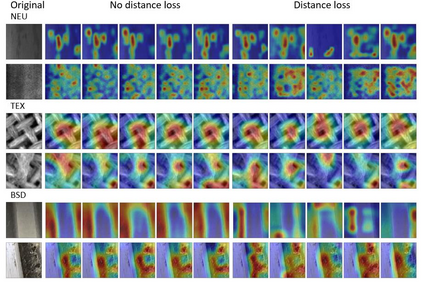

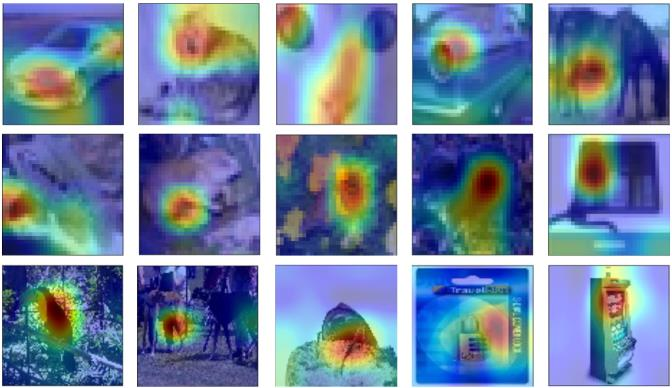

Ensembles of Convolutional neural networks have shown remarkable results in learning discriminative semantic features for image classification tasks. Though, the models in the ensemble often concentrate on similar regions in images. This work proposes a novel method that forces a set of base models to learn different features for a classification task. These models are combined in an ensemble to make a collective classification. The key finding is that by forcing the models to concentrate on different features, the classification accuracy is increased. To learn different feature concepts, a so-called feature distance loss is implemented on the feature maps. The experiments on benchmark convolutional neural networks (VGG16, ResNet, AlexNet), popular datasets (Cifar10, Cifar100, miniImageNet, NEU, BSD, TEX), and different training samples (3, 5, 10, 20, 50, 100 per class) show the effectiveness of the proposed feature loss. The proposed method outperforms classical ensemble versions of the base models. The Class Activation Maps explicitly prove the ability to learn different feature concepts. The code is available at: https://github.com/2Obe/Feature-Distance-Loss.git

翻译:进化神经网络的集合在为图像分类任务学习歧视性的语义特征方面显示出了显著的成果。虽然共构中的模型往往集中于图像中的类似区域。这项工作提出了一种新型方法,迫使一套基础模型学习分类任务的不同特征。这些模型被组合在一起,以进行集体分类。关键发现是,通过迫使模型集中关注不同特征,分类精确度提高了。为了学习不同的特征概念,在特征图上实施所谓的特征距离损失。基准的共构神经网络实验(VGG16、ResNet、AlexNet)、流行数据集(Cifar10、Cifar100、MinimiImaageNet、NEU、BSD、TEX)和不同的培训样本(3、5、10、20、50、100)都表明了拟议的地貌损失的有效性。拟议的方法超越了基础模型的古典同源版本。分类法地图明确证明了学习不同特征概念的能力。代码可以在 https://giuth/Disatrial.compt。