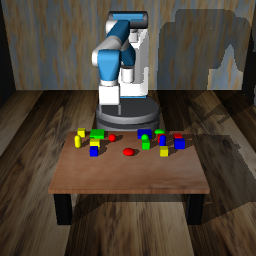

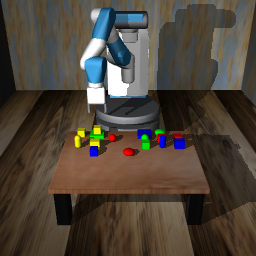

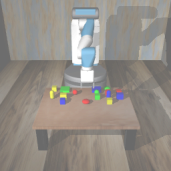

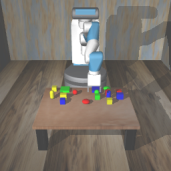

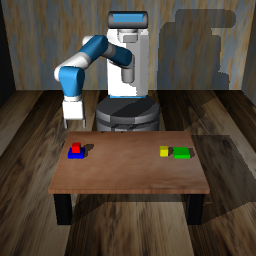

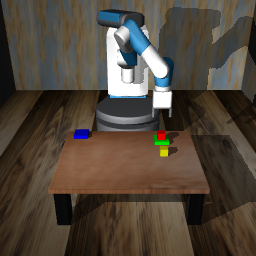

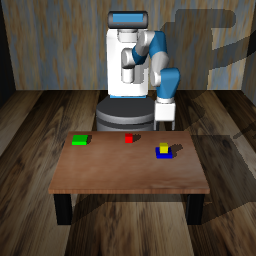

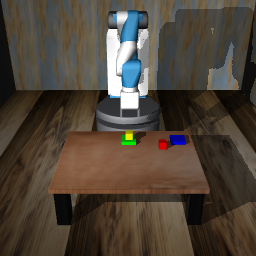

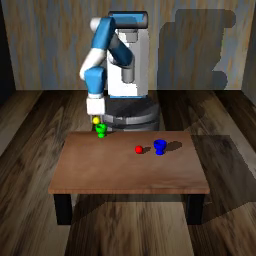

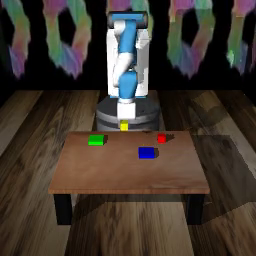

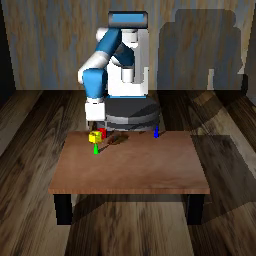

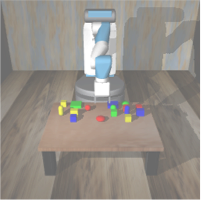

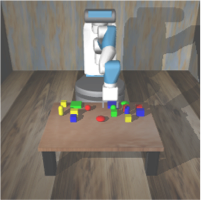

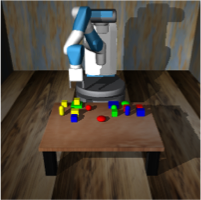

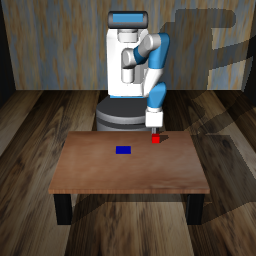

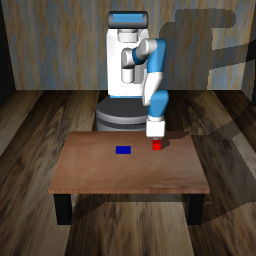

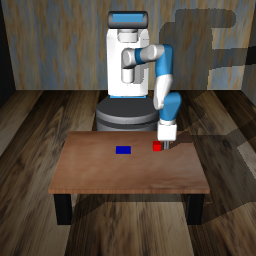

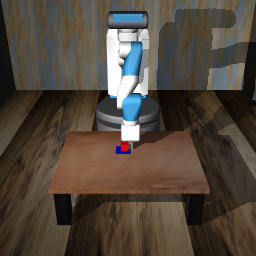

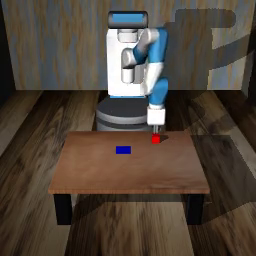

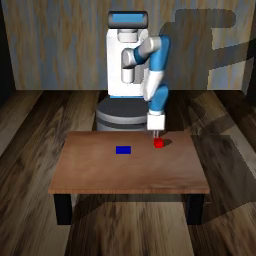

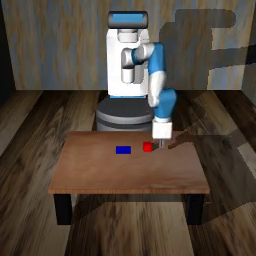

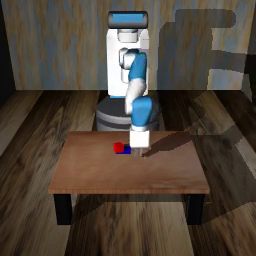

Visuomotor control (VMC) is an effective means of achieving basic manipulation tasks such as pushing or pick-and-place from raw images. Conditioning VMC on desired goal states is a promising way of achieving versatile skill primitives. However, common conditioning schemes either rely on task-specific fine tuning - e.g. using one-shot imitation learning (IL) - or on sampling approaches using a forward model of scene dynamics i.e. model-predictive control (MPC), leaving deployability and planning horizon severely limited. In this paper we propose a conditioning scheme which avoids these pitfalls by learning the controller and its conditioning in an end-to-end manner. Our model predicts complex action sequences based directly on a dynamic image representation of the robot motion and the distance to a given target observation. In contrast to related works, this enables our approach to efficiently perform complex manipulation tasks from raw image observations without predefined control primitives or test time demonstrations. We report significant improvements in task success over representative MPC and IL baselines. We also demonstrate our model's generalisation capabilities in challenging, unseen tasks featuring visual noise, cluttered scenes and unseen object geometries.

翻译:维苏莫托控制(VMC)是完成基本操作性任务的有效手段,例如从原始图像中推动或取出或取出,从原始图像中取出。在理想的目标状态上给VMC提供条件,是取得多才多艺原始技术的一个很有希望的方法。然而,常见的调节方案要么依靠任务特定的微调,例如使用一发模仿学习(IL),要么依靠使用先发制人模拟预测(MPC),或者利用先发制人图像动态模型(即模型预测控制(MPC))的先期模型取样方法,使可部署性和规划视野受到严重限制。在本文中,我们提出了一个调制方案,通过学习控制器及其端到端的调节来避免这些陷阱。我们的模型预测了基于机器人运动动态图像的复杂动作序列和与特定目标观测的距离。与相关工程相比,这使我们能够在原始图像观测中高效地完成复杂的操作任务,而没有预先定义控制原始原始控制或测试时间演示。我们报告在具有代表性的MPC和IL基线上的任务成功程度显著提高。我们还展示了我们的模型在挑战性、以视觉噪音、封闭场景象和视觉物体为目的的无形物体的目中展示方面的概括能力。