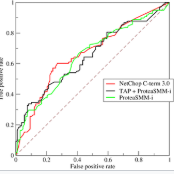

A basic task in explainable AI (XAI) is to identify the most important features behind a prediction made by a black box function $f$. The insertion and deletion tests of Petsiuk et al. (2018) are used to judge the quality of algorithms that rank pixels from most to least important for a classification. Motivated by regression problems we establish a formula for their area under the curve (AUC) criteria in terms of certain main effects and interactions in an anchored decomposition of $f$. We find an expression for the expected value of the AUC under a random ordering of inputs to $f$ and propose an alternative area above a straight line for the regression setting. We use this criterion to compare feature importances computed by integrated gradients (IG) to those computed by Kernel SHAP (KS) as well as LIME, DeepLIFT, vanilla gradient and input$\times$gradient methods. KS has the best overall performance in two datasets we consider but it is very expensive to compute. We find that IG is nearly as good as KS while being much faster. Our comparison problems include some binary inputs that pose a challenge to IG because it must use values between the possible variable levels and so we consider ways to handle binary variables in IG. We show that sorting variables by their Shapley value does not necessarily give the optimal ordering for an insertion-deletion test. It will however do that for monotone functions of additive models, such as logistic regression.

翻译:解释性 AI (XAI) 的基本任务是在黑盒函数 $f美元 的预测背后找到最重要的特征。 插入和删除 Petsiuk 等人的测试 (2018年) 用于判断将像素从最像类排到对分类来说最不重要的算法的质量。 我们受回归问题的驱使, 在某些主要效果和相互作用方面, 在曲线( AUC) 标准下, 某些主要效果和输入值为$f美元 的固定分解中, 为其区域设定一个公式。 我们发现在随机订购投入为$f美元的情况下, AUC 的预期值表示, 并提议一个位于直线之上的替代区域, 用于回归设置 。 我们使用这个标准来比较由整合性梯度( IG) 和由 Kernel SHAP (KS) 和 LIME、 DeepLIFFT、 Vanilla 梯度和 输入值为美元 梯度的方法。 KSS 在两个数据集中拥有最佳的总体性表现, 但它非常昂贵 。 我们发现IG 和 透明性模型之间的精确度值 。 我们比较问题是要显示某种变化的变量, 。