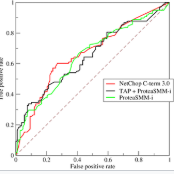

Deep learning has shown superb performance in detecting objects and classifying images, ensuring a great promise for analyzing medical imaging. Translating the success of deep learning to medical imaging, in which doctors need to understand the underlying process, requires the capability to interpret and explain the prediction of neural networks. Interpretability of deep neural networks often relies on estimating the importance of input features (e.g., pixels) with respect to the outcome (e.g., class probability). However, a number of importance estimators (also known as saliency maps) have been developed and it is unclear which ones are more relevant for medical imaging applications. In the present work, we investigated the performance of several importance estimators in explaining the classification of computed tomography (CT) images by a convolutional deep network, using three distinct evaluation metrics. First, the model-centric fidelity measures a decrease in the model accuracy when certain inputs are perturbed. Second, concordance between importance scores and the expert-defined segmentation masks is measured on a pixel level by a receiver operating characteristic (ROC) curves. Third, we measure a region-wise overlap between a XRAI-based map and the segmentation mask by Dice Similarity Coefficients (DSC). Overall, two versions of SmoothGrad topped the fidelity and ROC rankings, whereas both Integrated Gradients and SmoothGrad excelled in DSC evaluation. Interestingly, there was a critical discrepancy between model-centric (fidelity) and human-centric (ROC and DSC) evaluation. Expert expectation and intuition embedded in segmentation maps does not necessarily align with how the model arrived at its prediction. Understanding this difference in interpretability would help harnessing the power of deep learning in medicine.

翻译:深层学习显示在探测对象和对图像进行分类方面的超强性能,确保了分析医学成像的巨大前景。将深层学习的成功转化为医学成像,医生需要了解基本过程,这要求具备解释和解释神经网络预测的能力。深神经网络的解释性往往依赖于估计输入特征(例如像素)对结果(例如,等级概率)的重要性。然而,已经开发了一些重要的估计数据(也称为“清晰度偏差图 ” ),而且不清楚哪些数据对医学成像应用更为相关。在目前的工作中,我们调查了数个重要估计数据对于解释计算成的透视网络神经网络的预测性能,使用了三种不同的评价度指标。首先,模型偏重度测量了某些投入(例如,等级概率概率)对于模型的准确性会降低。第二,重要度和专家定义的分解面罩会通过一个接收器(ROC)操作特征(ROC)曲线的准确度评估,我们调查了一些重要估测度的测算性数据(我们测量了C) 的准确性数据,在轨迹上的精确度上,一个区域(我们测量了X-Sqollial Develilal Stalal Deal) 和Sild Stalalalalalalalalalalalalalalation的比值(通过两个版本) 。