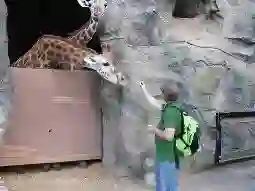

Existing attention mechanisms either attend to local image grid or object level features for Visual Question Answering (VQA). Motivated by the observation that questions can relate to both object instances and their parts, we propose a novel attention mechanism that jointly considers reciprocal relationships between the two levels of visual details. The bottom-up attention thus generated is further coalesced with the top-down information to only focus on the scene elements that are most relevant to a given question. Our design hierarchically fuses multi-modal information i.e., language, object- and gird-level features, through an efficient tensor decomposition scheme. The proposed model improves the state-of-the-art single model performances from 67.9% to 68.2% on VQAv1 and from 65.7% to 67.4% on VQAv2, demonstrating a significant boost.

翻译:现有关注机制要么关注当地图像网格,要么关注视觉问题解答(VQA)的物体级特征。由于发现问题可能与对象实例及其部分都相关,我们提出一个新的关注机制,共同考虑两个层面的视觉细节之间的互惠关系。由此产生的自下而上的关注与自上而下的信息进一步结合,仅侧重于与特定问题最相关的场景要素。我们的设计按等级将多种模式的信息,即语言、对象和标志级的特征,通过高效的高温分解方案结合在一起。拟议的模型将VQAv1上的最新单一模型性能从67.9%提高到68.2%,VQAv2上从65.7%提高到67.4%。这显示了巨大的推动力。