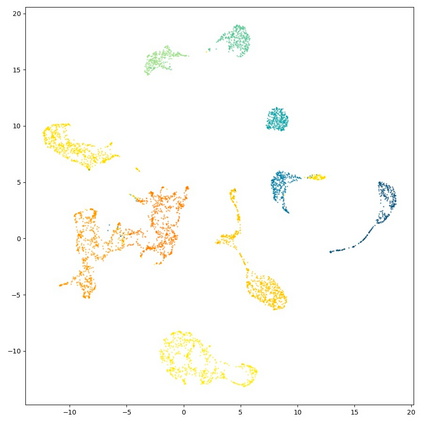

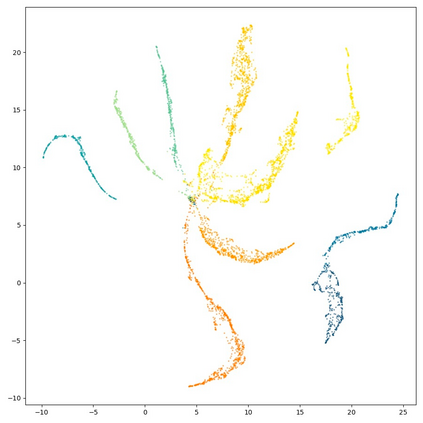

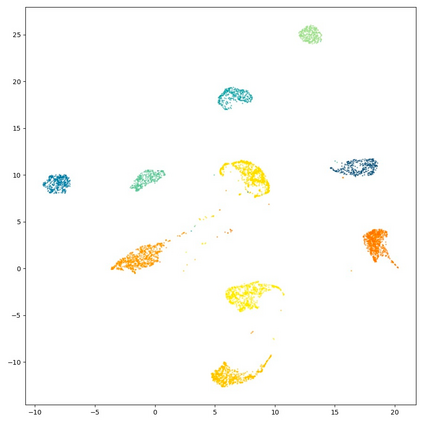

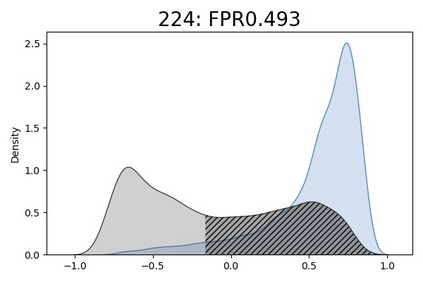

Recent advance in contrastive learning has shown remarkable performance. However, the vast majority of approaches are limited to the closed-world setting. In this paper, we enrich the landscape of representation learning by tapping into an open-world setting, where unlabeled samples from novel classes can naturally emerge in the wild. To bridge the gap, we introduce a new learning framework, open-world contrastive learning (OpenCon). OpenCon tackles the challenges of learning compact representations for both known and novel classes, and facilitates novelty discovery along the way. We demonstrate the effectiveness of OpenCon on challenging benchmark datasets and establish competitive performance. On the ImageNet dataset, OpenCon significantly outperforms the current best method by 11.9% and 7.4% on novel and overall classification accuracy, respectively. We hope that our work will open up new doors for future work to tackle this important problem.

翻译:最近的对比式学习进展显示了显著的成绩。 然而, 绝大多数方法都局限于封闭世界环境。 在本文中, 我们通过探索开放世界环境来丰富代表性学习的景观, 在那里, 新生类的无标签样本自然会在野外出现。 为了缩小差距, 我们引入了新的学习框架, 开放世界差异性学习( OpenCon ) 。 开放会议 应对了已知和新类学习契约性表述的挑战, 并便利了新颖的发现 。 我们展示了开放会议在挑战性基准数据集和建立竞争性业绩方面的有效性。 在图像网络数据集上, OpenCon 显著地超越了当前在创新和总体分类准确性方面的最佳方法,分别为11.9%和7.4%。 我们希望我们的工作将为未来解决这一重要问题的工作打开新的大门。