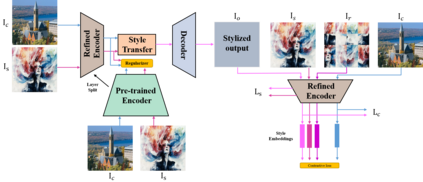

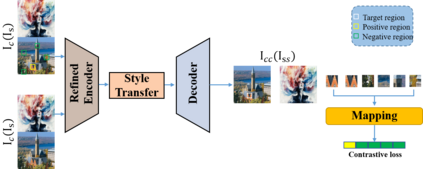

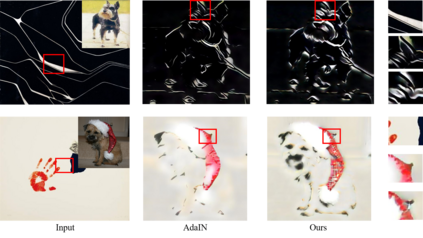

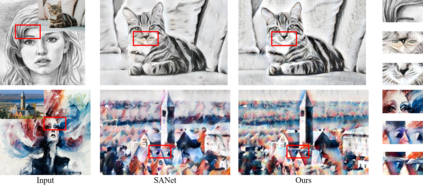

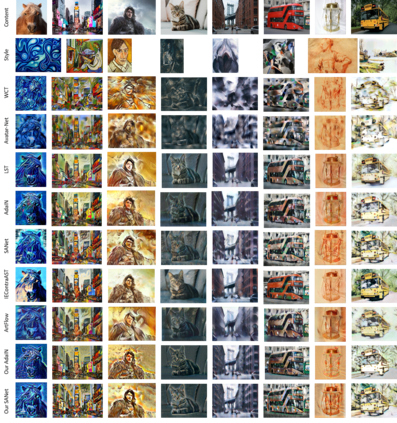

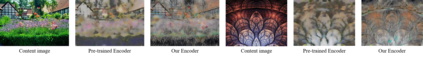

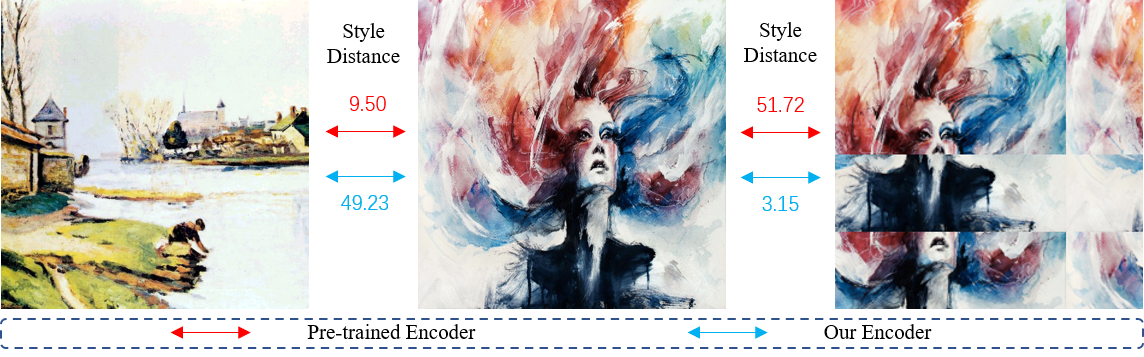

Existing neural style transfer researches have studied to match statistical information between the deep features of content and style images, which were extracted by a pre-trained VGG, and achieved significant improvement in synthesizing artistic images. However, in some cases, the feature statistics from the pre-trained encoder may not be consistent with the visual style we perceived. For example, the style distance between images of different styles is less than that of the same style. In such an inappropriate latent space, the objective function of the existing methods will be optimized in the wrong direction, resulting in bad stylization results. In addition, the lack of content details in the features extracted by the pre-trained encoder also leads to the content leak problem. In order to solve these issues in the latent space used by style transfer, we propose two contrastive training schemes to get a refined encoder that is more suitable for this task. The style contrastive loss pulls the stylized result closer to the same visual style image and pushes it away from the content image. The content contrastive loss enables the encoder to retain more available details. We can directly add our training scheme to some existing style transfer methods and significantly improve their results. Extensive experimental results demonstrate the effectiveness and superiority of our methods.

翻译:现有的神经风格传输研究已经研究过,以将内容和风格图像的深层特征之间的统计信息匹配起来,这些内容和风格图像由经过事先培训的 VGG 所提取,在合成艺术图像方面也取得了显著改进。然而,在某些情况下,来自经过培训的编码器的特征统计数据可能与我们所看到的视觉风格不相符。例如,不同风格图像之间的风格距离不及同一风格的风格。在这种不适当的潜伏空间中,现有方法的客观功能将在错误方向上优化,导致错误的丝质化结果。此外,由经过培训的编码器所提取的特征缺乏内容细节,也导致内容泄漏问题。为了在通过风格传输的隐蔽空间解决这些问题,我们提出了两种对比式培训计划,以获得一种更适合这项工作的精密的编码器。在这种结构上,将标准化的结果拉近了同一视觉风格的图像,并将它推离内容图像的距离。内容对比式损失使得由经过培训的编码器生成的特征能够保留更多的可用细节。为了在风格传输中解决这些问题,我们可以直接地展示我们现有的优越性方法。