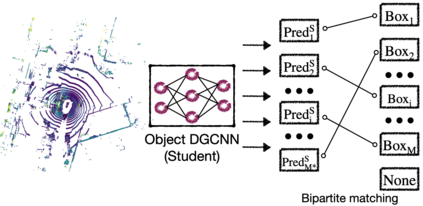

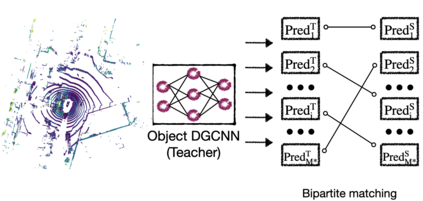

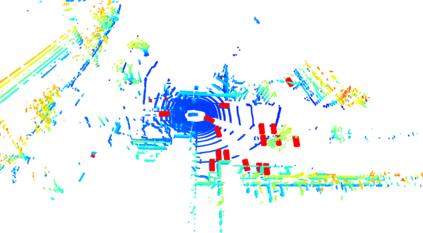

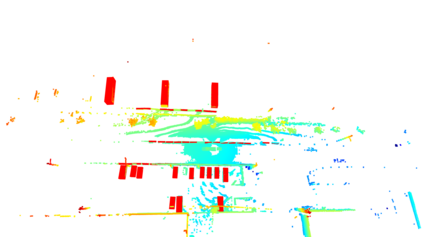

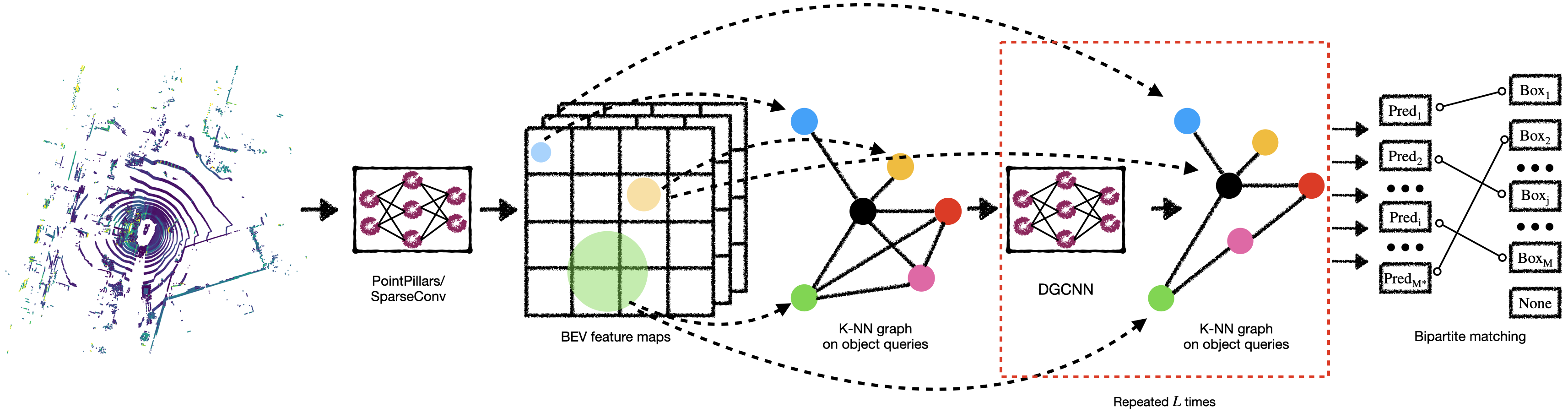

3D object detection often involves complicated training and testing pipelines, which require substantial domain knowledge about individual datasets. Inspired by recent non-maximum suppression-free 2D object detection models, we propose a 3D object detection architecture on point clouds. Our method models 3D object detection as message passing on a dynamic graph, generalizing the DGCNN framework to predict a set of objects. In our construction, we remove the necessity of post-processing via object confidence aggregation or non-maximum suppression. To facilitate object detection from sparse point clouds, we also propose a set-to-set distillation approach customized to 3D detection. This approach aligns the outputs of the teacher model and the student model in a permutation-invariant fashion, significantly simplifying knowledge distillation for the 3D detection task. Our method achieves state-of-the-art performance on autonomous driving benchmarks. We also provide abundant analysis of the detection model and distillation framework.

翻译:3D天体探测往往涉及复杂的培训和测试管道,这需要大量关于单个数据集的域域知识。在近期非最大抑制型2D天体探测模型的启发下,我们提议在点云上建立一个3D天体探测结构。我们的方法模型3D天体探测作为信息传递到动态图上,将DGCNN框架概括化,以预测一组天体。在我们的构造中,我们取消了通过物体信任聚合或非最大抑制进行后处理的必要性。为了便利从稀疏云层中探测物体,我们还提议了一个适合3D探测的定置蒸馏方法。这个方法将教师模型和学生模型的输出以变异式方式加以调整,大大简化了3D探测任务的知识蒸馏过程。我们的方法实现了自主驾驶基准的状态。我们还对探测模型和蒸馏框架进行了大量分析。