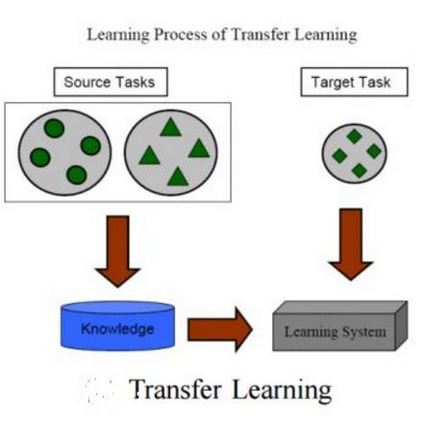

Transfer learning can boost the performance on the targettask by leveraging the knowledge of the source domain. Recent worksin neural architecture search (NAS), especially one-shot NAS, can aidtransfer learning by establishing sufficient network search space. How-ever, existing NAS methods tend to approximate huge search spaces byexplicitly building giant super-networks with multiple sub-paths, anddiscard super-network weights after a child structure is found. Both thecharacteristics of existing approaches causes repetitive network trainingon source tasks in transfer learning. To remedy the above issues, we re-duce the super-network size by randomly dropping connection betweennetwork blocks while embedding a larger search space. Moreover, wereuse super-network weights to avoid redundant training by proposinga novel framework consisting of two modules, the neural architecturesearch module for architecture transfer and the neural weight searchmodule for weight transfer. These two modules conduct search on thetarget task based on a reduced super-networks, so we only need to trainonce on the source task. We experiment our framework on both MS-COCO and CUB-200 for the object detection and fine-grained imageclassification tasks, and show promising improvements with onlyO(CN)super-network complexity.

翻译:借助源域的知识, 转移学习能够提高目标值的性能。 最近的神经结构搜索工程( NAS), 特别是一发NAS, 可以通过建立足够的网络搜索空间来帮助转移学习。 如何, 现有的NAS 方法倾向于通过在发现一个子路径后, 以直观的方式建立具有多个子路径的巨型超级网络, 并丢弃超网络重量来接近巨大的搜索空间。 两种现有方法的特性都会导致在传输学习中重复网络培训源的任务。 为了纠正上述问题, 我们通过随机降低网络块之间的连接, 从而帮助进行转移。 此外, 我们使用超网络重量来避免冗余的培训, 方法是提出由两个模块组成的新框架, 即用于结构转移的神经结构研究模块和用于转移重量的神经重量搜索模块。 这两个模块都根据一个减少的超级网络进行目标任务搜索, 因此我们只需在源任务上培训。 我们用一个框架, MS- CNCO 和 CUB- 200来随机降低网络的网络规模, 以展示具有前景的图像级化。