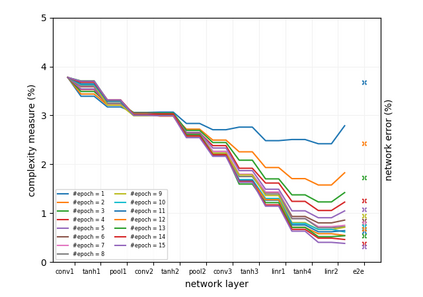

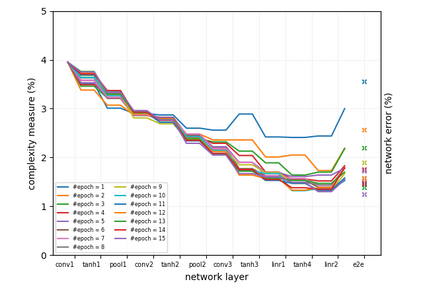

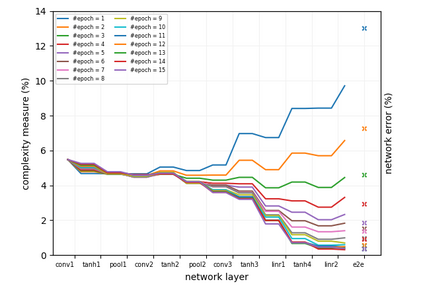

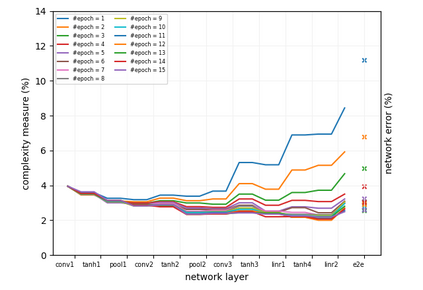

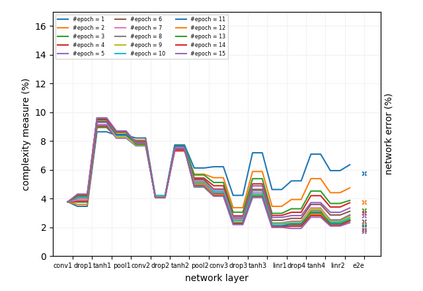

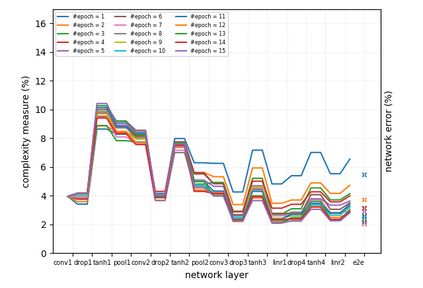

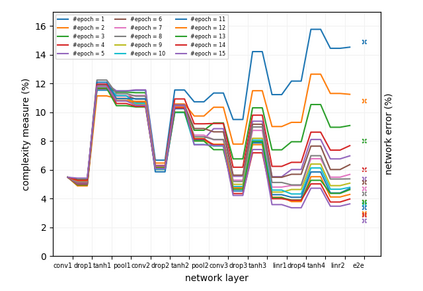

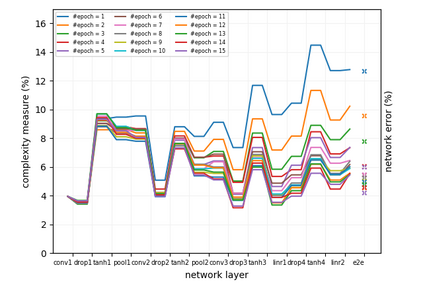

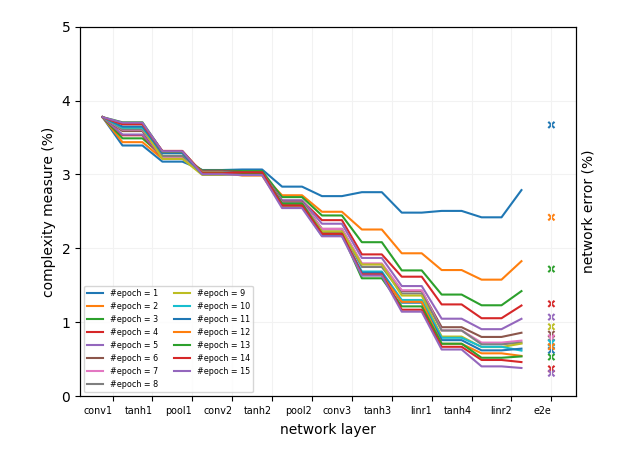

Deep neural networks use multiple layers of functions to map an object represented by an input vector progressively to different representations, and with sufficient training, eventually to a single score for each class that is the output of the final decision function. Ideally, in this output space, the objects of different classes achieve maximum separation. Motivated by the need to better understand the inner working of a deep neural network, we analyze the effectiveness of the learned representations in separating the classes from a data complexity perspective. Using a simple complexity measure, a popular benchmarking task, and a well-known architecture design, we show how the data complexity evolves through the network, how it changes during training, and how it is impacted by the network design and the availability of training samples. We discuss the implications of the observations and the potentials for further studies.

翻译:深神经网络使用多层功能绘制由输入矢量代表的物体图,逐渐到不同的表达形式,并经过充分培训,最终最终达到每一类的单一分数,这是最终决定函数的输出。理想的情况是,在这个输出空间,不同类的物体能够实现最大程度的分离。由于需要更好地了解深层神经网络的内部运行情况,我们分析从数据复杂角度将各类区分开来所学到的表达方式的有效性。我们使用简单的复杂度、流行的基准任务和众所周知的结构设计,我们展示了数据复杂性如何通过网络演变,培训期间如何变化,以及数据如何受到网络设计和培训样本的影响。我们讨论了观测的影响以及进一步研究的可能性。