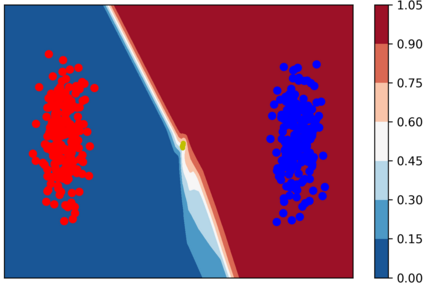

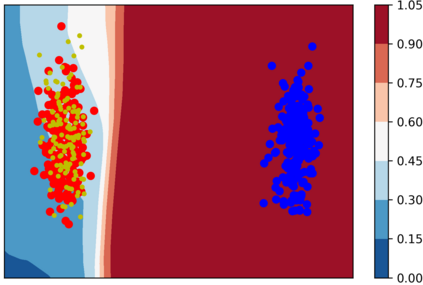

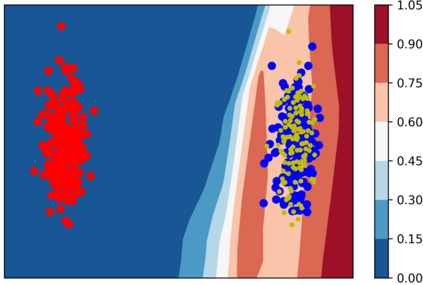

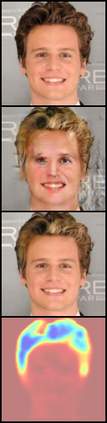

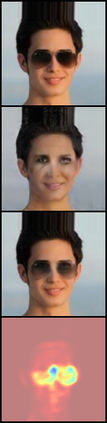

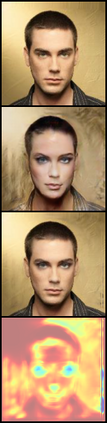

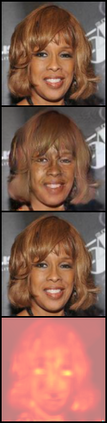

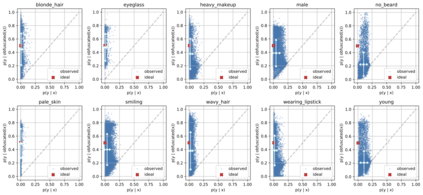

Personal photos of individuals when shared online, apart from exhibiting a myriad of memorable details, also reveals a wide range of private information and potentially entails privacy risks (e.g., online harassment, tracking). To mitigate such risks, it is crucial to study techniques that allow individuals to limit the private information leaked in visual data. We tackle this problem in a novel image obfuscation framework: to maximize entropy on inferences over targeted privacy attributes, while retaining image fidelity. We approach the problem based on an encoder-decoder style architecture, with two key novelties: (a) introducing a discriminator to perform bi-directional translation simultaneously from multiple unpaired domains; (b) predicting an image interpolation which maximizes uncertainty over a target set of attributes. We find our approach generates obfuscated images faithful to the original input images, and additionally increase uncertainty by 6.2$\times$ (or up to 0.85 bits) over the non-obfuscated counterparts.

翻译:个人个人照片在网上分享时,除了展示大量难忘的细节外,还揭示了广泛的私人信息,并有可能带来隐私风险(例如在线骚扰、跟踪)。为了减轻这种风险,至关重要的是研究各种技术,使个人能够限制视觉数据中泄漏的私人信息。我们用一个新颖的图像模糊框架来解决这个问题:在目标隐私属性的推论上最大限度地放大对目标隐私属性的误判,同时保持图像的忠诚性。我们根据编码解码风格结构来处理这个问题,我们有两个关键的新颖之处:(a) 引入一个歧视器,同时从多个未读领域进行双向翻译;(b) 预测图像互译,使一组目标属性的不确定性最大化。我们发现,我们的方法产生与原始输入图像相符的模糊图像,并给非模糊对应方带来6.2美元(或0.85位)的不确定性增加6.2美元(或0.85位)。