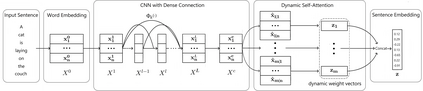

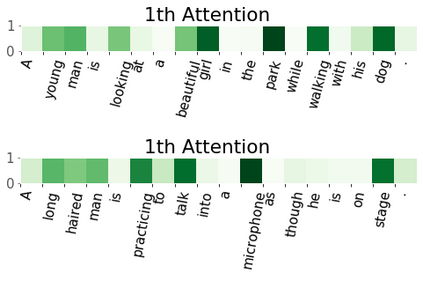

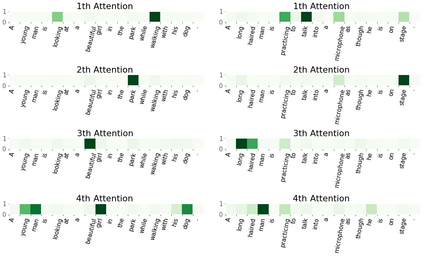

In this paper, we propose Dynamic Self-Attention (DSA), a new self-attention mechanism for sentence embedding. We design DSA by modifying dynamic routing in capsule network (Sabouretal.,2017) for natural language processing. DSA attends to informative words with a dynamic weight vector. We achieve new state-of-the-art results among sentence encoding methods in Stanford Natural Language Inference (SNLI) dataset with the least number of parameters, while showing comparative results in Stanford Sentiment Treebank (SST) dataset.

翻译:在本文中,我们提出动态自我注意(DSA),这是一个新的自留机制,用于嵌入刑期。我们设计DSA,方法是修改胶囊网络的动态路径(Sabouretal.,2017年),用于自然语言处理。DSA关注带有动态重量矢量的信息单词。我们在斯坦福自然语言推断(SNLI)数据集的句码编码方法中取得新的最新结果,参数最少,同时在斯坦福敏感树库数据集中显示比较结果。

相关内容

专知会员服务

85+阅读 · 2020年1月15日

专知会员服务

19+阅读 · 2019年10月22日

Arxiv

16+阅读 · 2018年1月31日

Arxiv

13+阅读 · 2018年1月18日