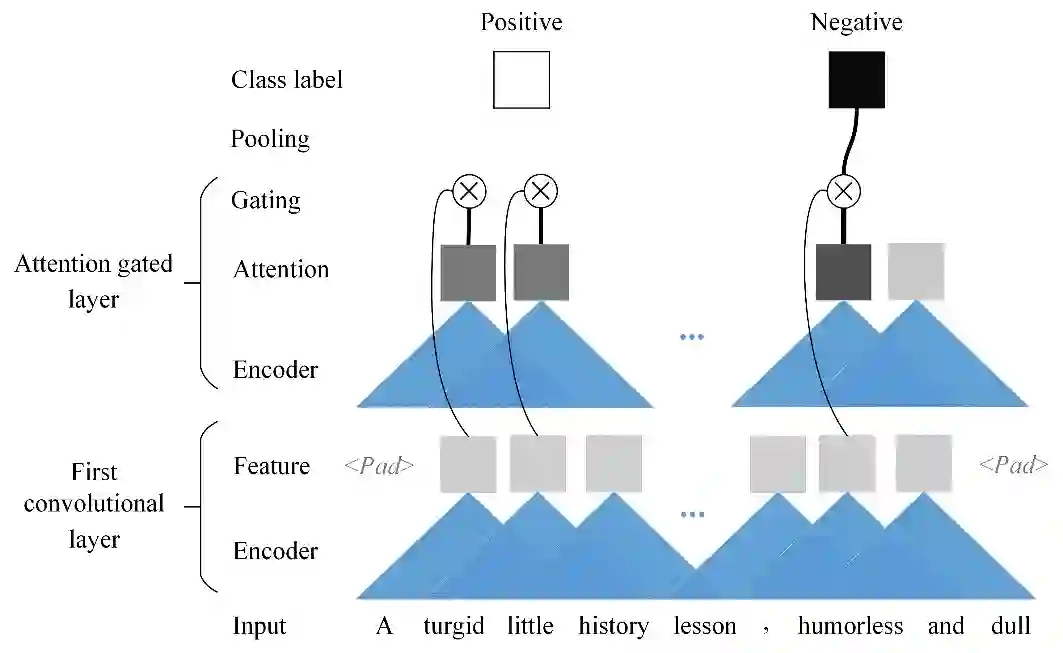

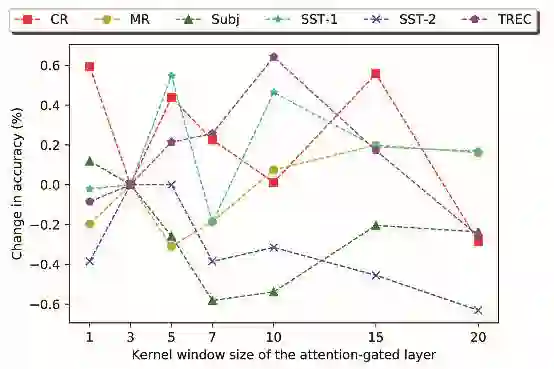

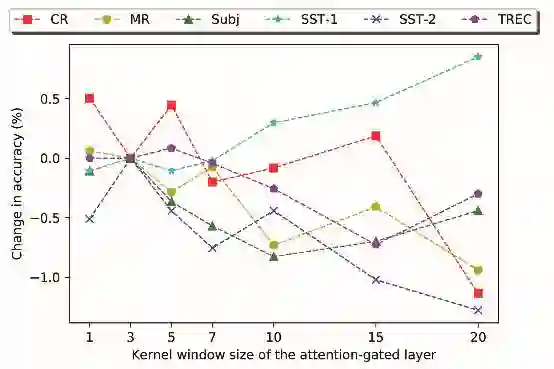

Recently, Attention-Gated Convolutional Neural Networks (AGCNNs) perform well on several essential sentence classification tasks and show robust performance in practical applications. However, AGCNNs are required to set many hyperparameters, and it is not known how sensitive the model's performance changes with them. In this paper, we conduct a sensitivity analysis on the effect of different hyperparameters s of AGCNNs, e.g., the kernel window size and the number of feature maps. Also, we investigate the effect of different combinations of hyperparameters settings on the model's performance to analyze to what extent different parameters settings contribute to AGCNNs' performance. Meanwhile, we draw practical advice from a wide range of empirical results. Through the sensitivity analysis experiment, we improve the hyperparameters settings of AGCNNs. Experiments show that our proposals achieve an average of 0.81% and 0.67% improvements on AGCNN-NLReLU-rand and AGCNN-SELU-rand, respectively; and an average of 0.47% and 0.45% improvements on AGCNN-NLReLU-static and AGCNN-SELU-static, respectively.

翻译:最近,引人注意的革命神经网络(AGCNNs)在几项关键句分级任务上表现良好,并表现出在实际应用中表现良好。然而,AGCNNs需要设置许多超参数,但尚不知道模型的性能变化如何敏感。在本文中,我们对AGCNN的不同超参数的影响进行了敏感分析,例如,窗口大小和地貌图数量。我们还调查了超参数设置的不同组合对模型性能的影响,以分析不同参数设置在多大程度上有助于AGCNNs的性能。与此同时,我们从广泛的实验结果中征求实用建议。通过敏感度分析实验,我们改进了AGCNNNs的超参数设置。实验表明,我们的提案在AGCNN-NLLUrand和AGCNN-SELREUst上分别实现了0.81%和0.67%的改进;在ANNA-NG-LLUs分别实现了0.47和0.45%的改进。