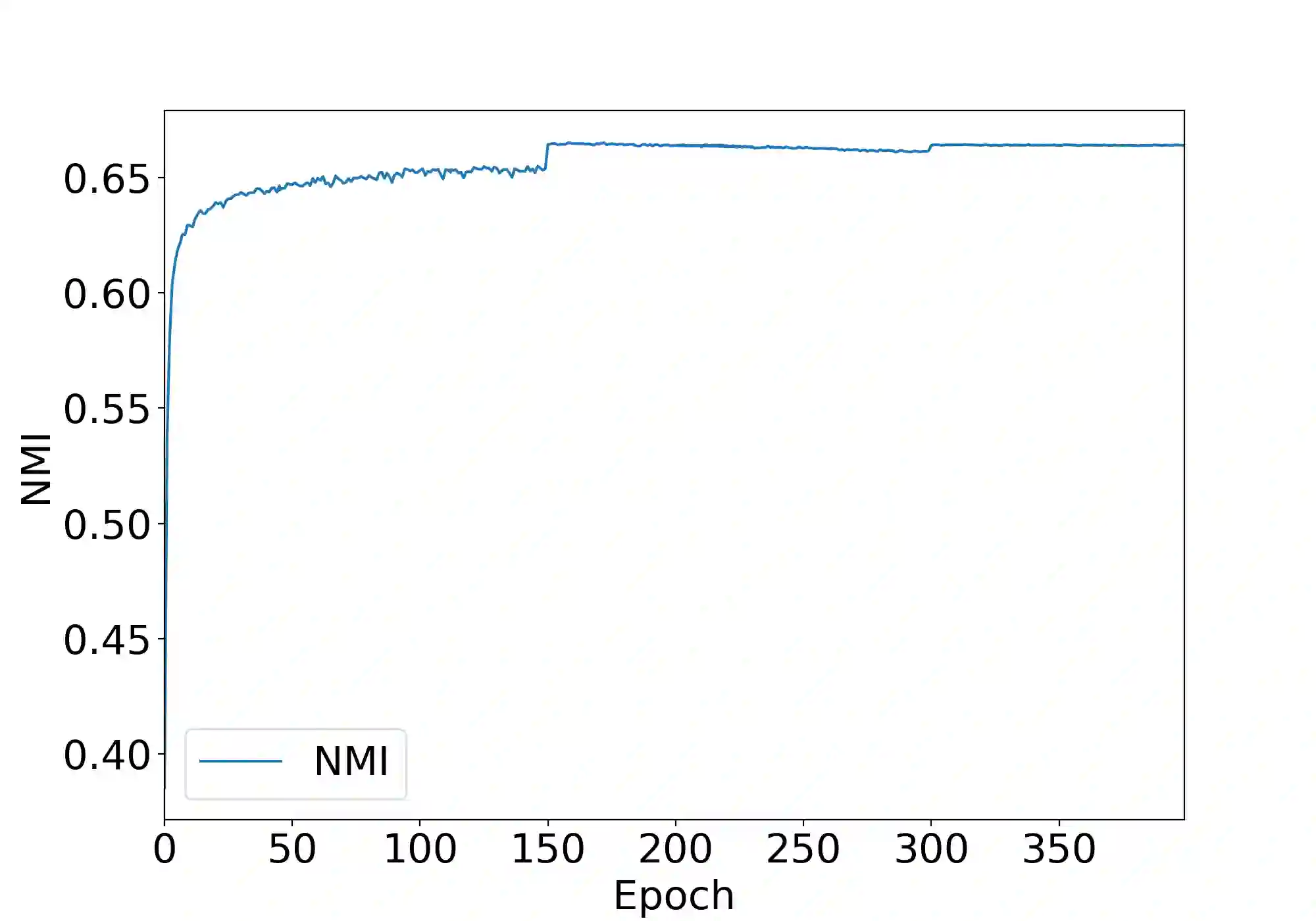

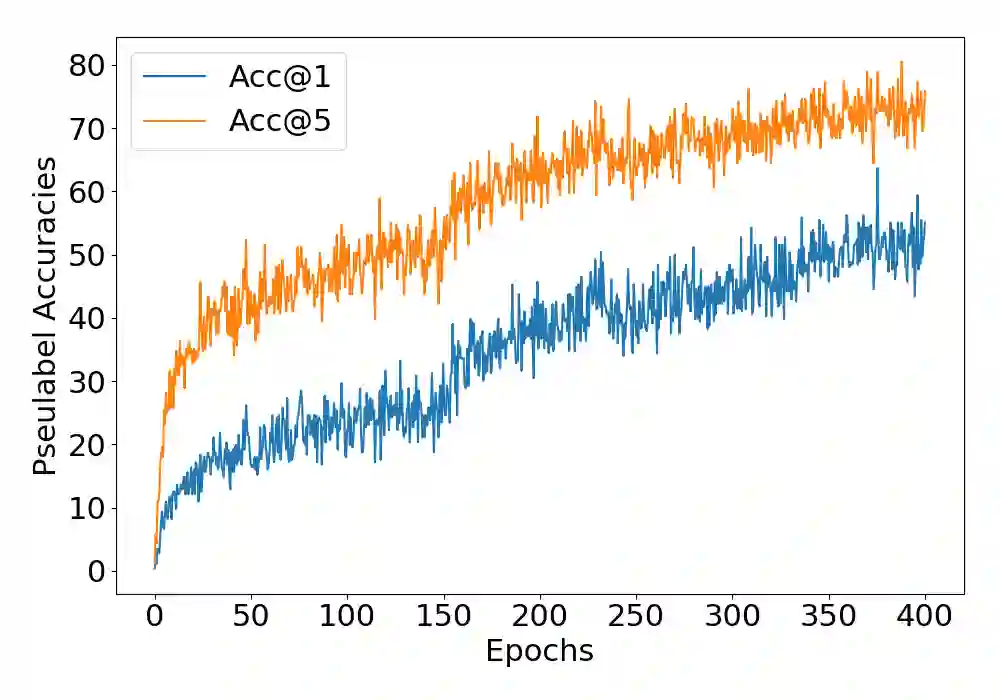

Combining clustering and representation learning is one of the most promising approaches for unsupervised learning of deep neural networks. However, doing so naively leads to ill posed learning problems with degenerate solutions. In this paper, we propose a novel and principled learning formulation that addresses these issues. The method is obtained by maximizing the information between labels and input data indices. We show that this criterion extends standard cross-entropy minimization to an optimal transport problem, which we solve efficiently for millions of input images and thousands of labels using a fast variant of the Sinkhorn-Knopp algorithm. The resulting method is able to self-label visual data so as to train highly competitive image representations without manual labels. Our method achieves state of the art representation learning performance for AlexNet and ResNet-50 on SVHN, CIFAR-10, CIFAR-100 and ImageNet.

翻译:将集群和代表性学习结合起来是不受监督地学习深层神经网络的最有希望的方法之一,然而,这样做天真地导致以堕落的解决方案造成不正确的学习问题。在本文件中,我们提出一个处理这些问题的新颖和有原则的学习方式。这个方法是通过在标签和输入数据指数之间尽量扩大信息而获得的。我们表明,这一标准将标准的跨物种最小化扩大到一个最佳运输问题,我们利用Sinkhorn-Knopp算法的快速变式,有效地解决了数百万个输入图像和数千个标签的问题。由此产生的方法可以自标为视觉数据,以便培训没有手工标签的高度竞争性的图像表达方式。我们的方法实现了AlexNet和ResNet-50在SVHN、CIFAR-10、CIFAR-100和图像网络上的艺术表现学习成绩。