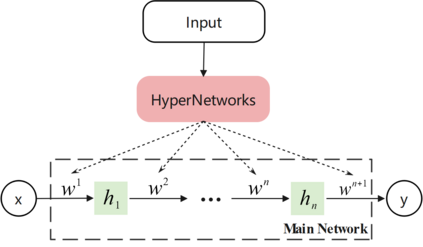

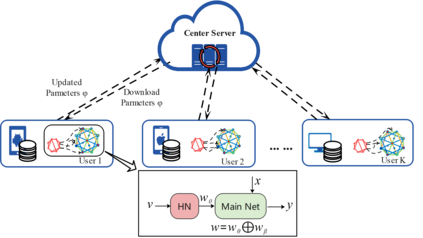

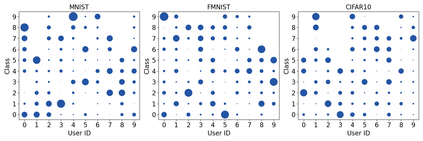

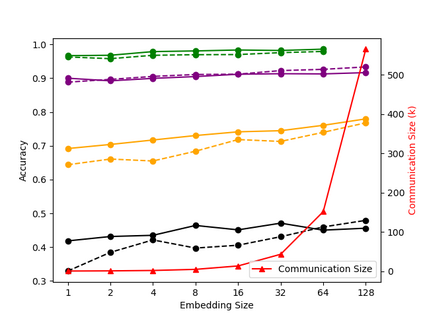

There are still many challenges in Federated Learning (FL). First, during the model update process, the model parameters on the local user need to be sent to the server for aggregation. This involves the consumption of network bandwidth, especially when the number of users participating in FL is large. High communication costs may limit the application of FL in certain scenarios. Secondly, since users participating in FL usually have different data distributions, this heterogeneity of data may lead to poor model performance or even failure to converge. Third, privacy and security issues are also challenges that need to be addressed in FL. There is still a risk of information leakage during model aggregation. Malicious users may obtain sensitive information by analyzing communications during model updates or aggregation processes. To address these challenges, we propose HyperFedNet (HFN), an innovative approach that leverages hypernetwork. HFN introduces a paradigm shift in transmission aggregation within FL. Unlike traditional FL methods that transmit a large number of parameters from the main network, HFN reduces the communication burden and improves security by transmitting a compact set of hypernetwork parameters. After the parameters of the hypernetwork are deployed locally to the user, the local database features quantified by the embedding vector can be used as input, and parameters can be dynamically generated for the FL main network through user forward propagation. HFN efficiently reduces communication costs while improving accuracy. Extensive experimentation demonstrates that HFN outperforms traditional FL methods significantly. By seamlessly integrating this concept into the conventional FL algorithm, we achieve even more impressive results compared to the original approach.

翻译:暂无翻译