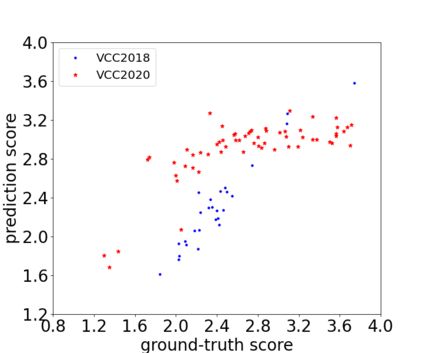

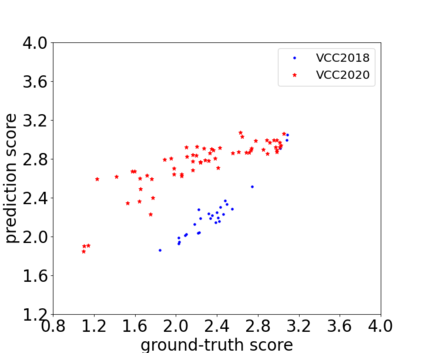

Neural evaluation metrics derived for numerous speech generation tasks have recently attracted great attention. In this paper, we propose SVSNet, the first end-to-end neural network model to assess the speaker voice similarity between natural speech and synthesized speech. Unlike most neural evaluation metrics that use hand-crafted features, SVSNet directly takes the raw waveform as input to more completely utilize speech information for prediction. SVSNet consists of encoder, co-attention, distance calculation, and prediction modules and is trained in an end-to-end manner. The experimental results on the Voice Conversion Challenge 2018 and 2020 (VCC2018 and VCC2020) datasets show that SVSNet notably outperforms well-known baseline systems in the assessment of speaker similarity at the utterance and system levels.

翻译:本文提出SVSNet,这是第一个端到端神经网络模型,用来评估发言者在自然言词和合成言词之间的声音相似性。与大多数使用手工制作特征的神经评价尺度不同,SVSNet直接将原始波状作为投入,以便更全面地利用语音信息进行预测。SVSNet由编码器、共同注意、远程计算和预测模块组成,并以端到端方式进行培训。2018年和2020年语音转换挑战(VCC2018和VCC202020)的实验结果显示,SVSNet在评估语音和系统层面的相似性时,明显优于众所周知的基线系统。