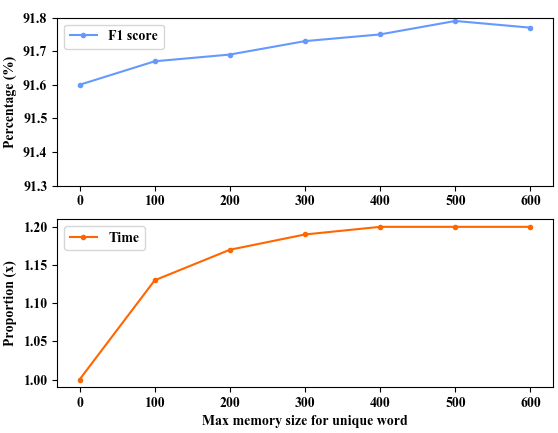

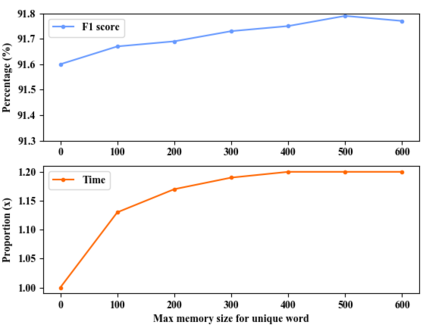

Named entity recognition (NER) models are typically based on the architecture of Bi-directional LSTM (BiLSTM). The constraints of sequential nature and the modeling of single input prevent the full utilization of global information from larger scope, not only in the entire sentence, but also in the entire document (dataset). In this paper, we address these two deficiencies and propose a model augmented with hierarchical contextualized representation: sentence-level representation and document-level representation. In sentence-level, we take different contributions of words in a single sentence into consideration to enhance the sentence representation learned from an independent BiLSTM via label embedding attention mechanism. In document-level, the key-value memory network is adopted to record the document-aware information for each unique word which is sensitive to similarity of context information. Our two-level hierarchical contextualized representations are fused with each input token embedding and corresponding hidden state of BiLSTM, respectively. The experimental results on three benchmark NER datasets (CoNLL-2003 and Ontonotes 5.0 English datasets, CoNLL-2002 Spanish dataset) show that we establish new state-of-the-art results.

翻译:命名实体识别(NER)模式通常基于双向LSTM(BILSTM)(BILSTM)的架构; 顺序性质限制和单一输入模型的建模使得不仅整个句子,而且整个文件(数据集)无法从更广泛的范围充分利用全球信息; 在本文件中,我们解决了这两个缺陷,并提出了一个以分级背景表示方式扩大的模型:判决一级代表和文件一级代表; 在判决一级,我们将单句中的不同词句贡献考虑在内,以加强通过标签嵌入关注机制从独立的BILSTM(BilSTM)中学习的句号表示方式。 在文件一级,关键价值存储网络用于记录每个与背景信息相似的独特词的文件感知信息。我们分级的分级背景表示方式分别与BILSTM(BILTM)的每个输入符号嵌入和相应的隐藏状态相结合。 在三个基准的NER数据集(CONLLL-2003和Onto notes 5.0 Eng Eng datas, CoNLL-2002 Span Datase)的实验结果显示我们建立了新的状态。