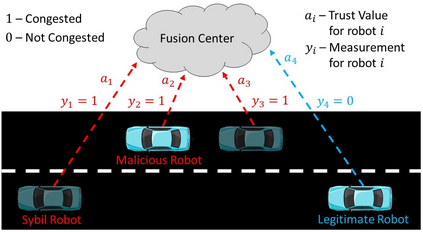

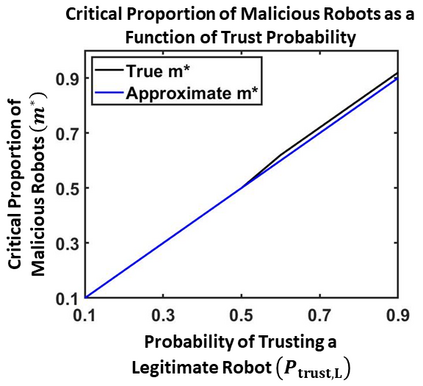

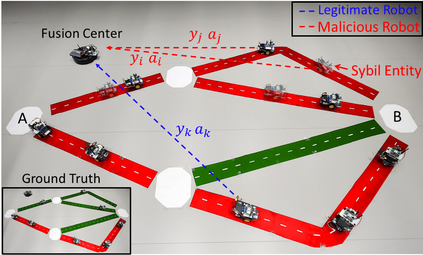

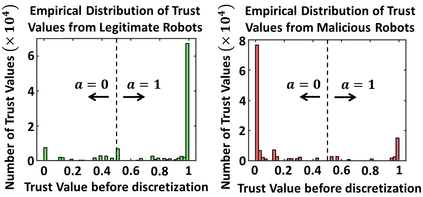

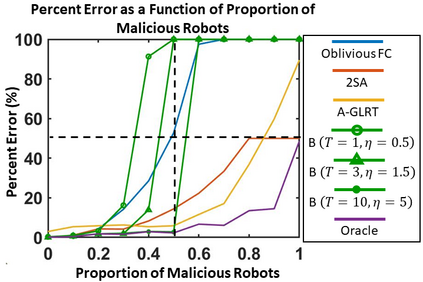

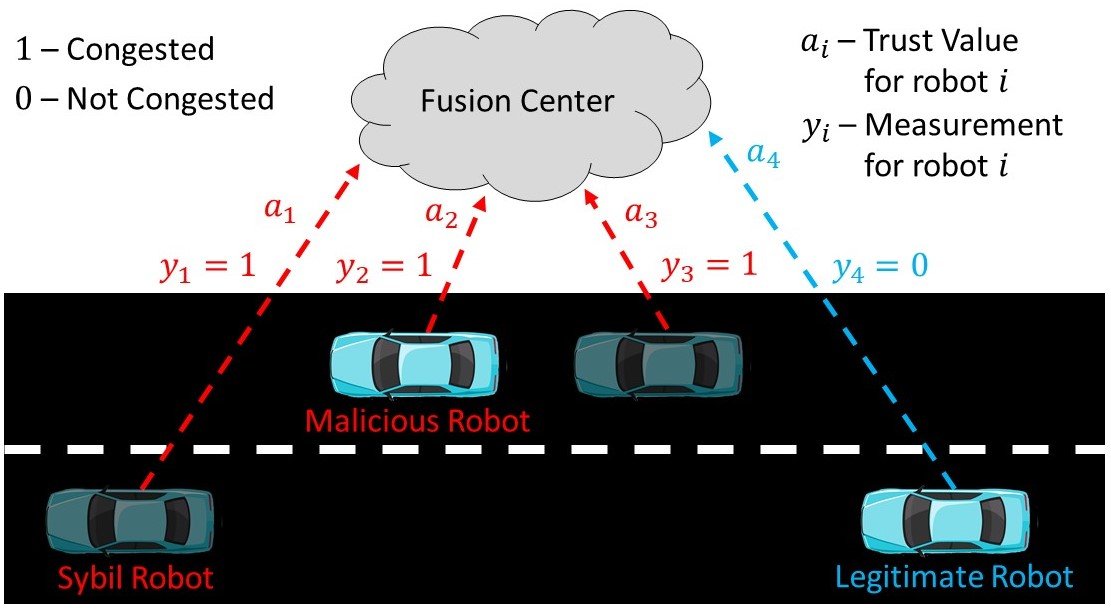

We develop a resilient binary hypothesis testing framework for decision making in adversarial multi-robot crowdsensing tasks. This framework exploits stochastic trust observations between robots to arrive at tractable, resilient decision making at a centralized Fusion Center (FC) even when i) there exist malicious robots in the network and their number may be larger than the number of legitimate robots, and ii) the FC uses one-shot noisy measurements from all robots. We derive two algorithms to achieve this. The first is the Two Stage Approach (2SA) that estimates the legitimacy of robots based on received trust observations, and provably minimizes the probability of detection error in the worst-case malicious attack. Here, the proportion of malicious robots is known but arbitrary. For the case of an unknown proportion of malicious robots, we develop the Adversarial Generalized Likelihood Ratio Test (A-GLRT) that uses both the reported robot measurements and trust observations to estimate the trustworthiness of robots, their reporting strategy, and the correct hypothesis simultaneously. We exploit special problem structure to show that this approach remains computationally tractable despite several unknown problem parameters. We deploy both algorithms in a hardware experiment where a group of robots conducts crowdsensing of traffic conditions on a mock-up road network similar in spirit to Google Maps, subject to a Sybil attack. We extract the trust observations for each robot from actual communication signals which provide statistical information on the uniqueness of the sender. We show that even when the malicious robots are in the majority, the FC can reduce the probability of detection error to 30.5% and 29% for the 2SA and the A-GLRT respectively.

翻译:我们开发了一个具有弹性的二进制假设测试框架, 用于在对抗性多机器人人群测量任务中进行决策。 这个框架利用机器人之间的随机信任观察, 以便在一个中央集散中心( FC) 达成可移动的、耐久的决策, 即使i) 网络中存在恶意机器人, 其数量可能大于合法机器人的数量, 并且ii) FC 使用来自所有机器人的一次性杂音测量方法。 我们从中得出两种算法来实现这一目标。 第一个是两个阶段方法( 2SA ), 根据收到的信任观察来估计机器人的合法性, 并且可以将最坏的恶意袭击中的检测误差最小化。 这里, 恶意机器人的比例是已知的, 但也是任意的。 对于一个未知的恶意机器人比例, 我们开发了Aversarial通用的隐性比比对等测试( A- GLRT), 使用报告的机器人测量和信任观察方法来估算机器人的可信度, 他们的汇报策略, 以及正确的假设。 我们利用特殊的问题结构来显示这个方法, 即使在最坏的情况下, 也能够计算出Sylormal missal missial miss missal 。</s>