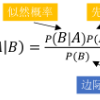

The Naive Bayesian classifier is a popular classification method employing the Bayesian paradigm. The concept of having conditional dependence among input variables sounds good in theory but can lead to a majority vote style behaviour. Achieving conditional independence is often difficult, and they introduce decision biases in the estimates. In Naive Bayes, certain features are called independent features as they have no conditional correlation or dependency when predicting a classification. In this paper, we focus on the optimal partition of features by proposing a novel technique called the Comonotone-Independence Classifier (CIBer) which is able to overcome the challenges posed by the Naive Bayes method. For different datasets, we clearly demonstrate the efficacy of our technique, where we achieve lower error rates and higher or equivalent accuracy compared to models such as Random Forests and XGBoost.

翻译:暂无翻译