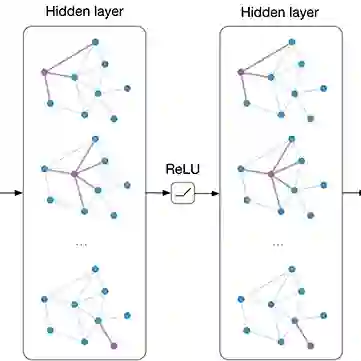

Graph Convolutional Networks (GCNs) show promising results for semi-supervised learning tasks on graphs, thus become favorable comparing with other approaches. Despite the remarkable success of GCNs, it is difficult to train GCNs with insufficient supervision. When labeled data are limited, the performance of GCNs becomes unsatisfying for low-degree nodes. While some prior work analyze successes and failures of GCNs on the entire model level, profiling GCNs on individual node level is still underexplored. In this paper, we analyze GCNs in regard to the node degree distribution. From empirical observation to theoretical proof, we confirm that GCNs are biased towards nodes with larger degrees with higher accuracy on them, even if high-degree nodes are underrepresented in most graphs. We further develop a novel Self-Supervised-Learning Degree-Specific GCN (SL-DSGC) that mitigate the degree-related biases of GCNs from model and data aspects. Firstly, we propose a degree-specific GCN layer that captures both discrepancies and similarities of nodes with different degrees, which reduces the inner model-aspect biases of GCNs caused by sharing the same parameters with all nodes. Secondly, we design a self-supervised-learning algorithm that creates pseudo labels with uncertainty scores on unlabeled nodes with a Bayesian neural network. Pseudo labels increase the chance of connecting to labeled neighbors for low-degree nodes, thus reducing the biases of GCNs from the data perspective. Uncertainty scores are further exploited to weight pseudo labels dynamically in the stochastic gradient descent for SL-DSGC. Experiments on three benchmark datasets show SL-DSGC not only outperforms state-of-the-art self-training/self-supervised-learning GCN methods, but also improves GCN accuracy dramatically for low-degree nodes.

翻译:图形变迁网络(GCNs) 显示在图形上半监督度学习任务(GCNs) 的可喜结果, 从而与其他方法相比变得有利。 尽管GCNs取得了显著的成功, 但很难在监管不力的情况下培训GCNs。 当标签数据有限时, GCNs的性能变得不满意低度节点。 尽管先前的一些工作对整个模型水平GCN的成败进行了分析, 单个节点水平的GCN分析仍然未得到充分探讨。 在本文中, 我们分析有关节点权分配的GCNs。 从实验观察到理论证据, 我们确认GCNs偏向较精确度更高的节点。 即使高度节点在大多数图表中代表了低度节点。 我们进一步开发了一个新的自超度度点点GCN(S-DSS-DGC), 仅从模型和数据方面减轻了GCNserrations的偏差值, 但是我们建议一个特定的GCN(N) 水平, 从不连接低度的内值的内值的内值- NCNs- dalder- dald- dealdealdaldal- devalmental deval 数据, 数据会通过不同的内分数的内分数, 数据也降低了数据显示了我们不甚深。