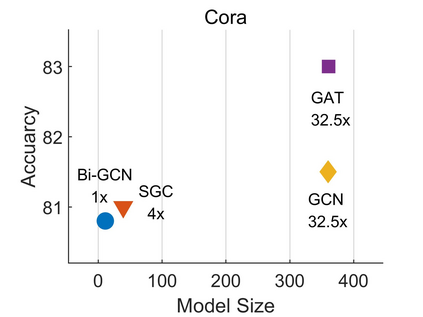

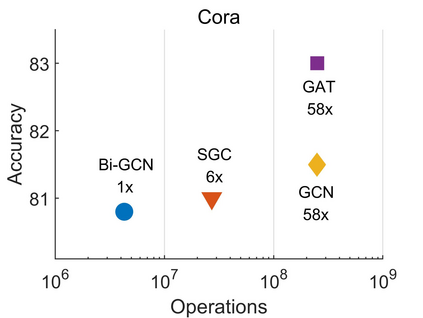

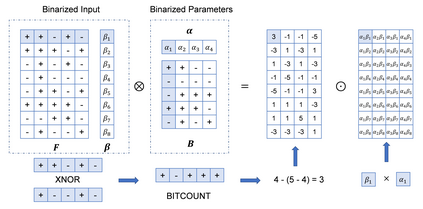

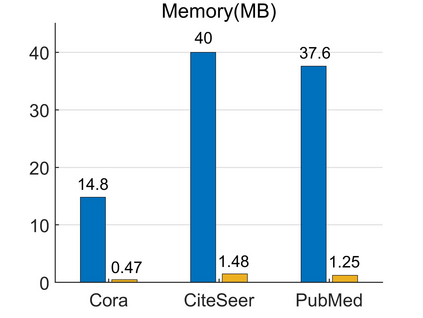

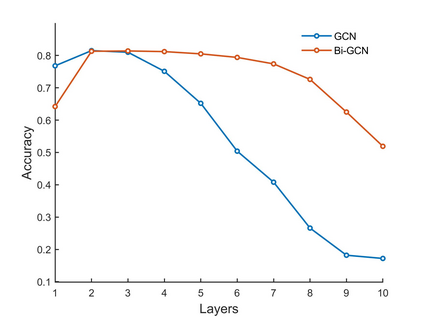

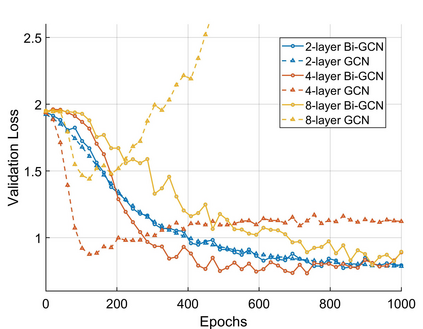

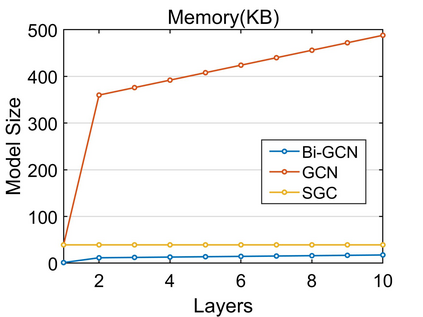

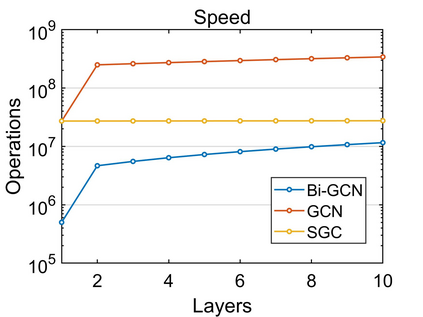

Graph Neural Networks (GNNs) have achieved tremendous success in graph representation learning. Unfortunately, current GNNs usually rely on loading the entire attributed graph into the network for processing. This implicit assumption may not be satisfied with limited memory resources, especially when the attributed graph is large. In this paper, we propose a Binary Graph Convolutional Network (Bi-GCN), which binarizes both the network parameters and input node features. Besides, the original matrix multiplications are revised to binary operations for accelerations. According to the theoretical analysis, our Bi-GCN can reduce the memory consumption by ~31x for both the network parameters and input data, and accelerate the inference speed by ~53x. Extensive experiments have demonstrated that our Bi-GCN can give a comparable prediction performance compared to the full-precision baselines. Besides, our binarization approach can be easily applied to other GNNs, which has been verified in the experiments.

翻译:图表神经网络(Neal Networks)在图形演示学习方面取得了巨大成功。 不幸的是,目前的GNNs通常依赖将整个属性图装入网络进行处理。 这一隐含的假设可能无法满足有限的记忆资源, 特别是当该属性图巨大时。 在本文中,我们建议建立一个二进制图表革命网络(Bi-GCN), 它将网络参数和输入节点功能二进制。 此外, 原始矩阵乘数被修改为加速的二进制操作。 根据理论分析, 我们的Bi- GNNs可以将网络参数和输入数据的内存消耗量减少~ 31x, 加速 ~ 53x 的推断速度。 广泛的实验表明, 我们的Bi-GCN 能够提供与全精度基线可比的预测性。 此外, 我们的二进制方法很容易适用于其他GNs, 实验中已经核实过的其他GNs 。