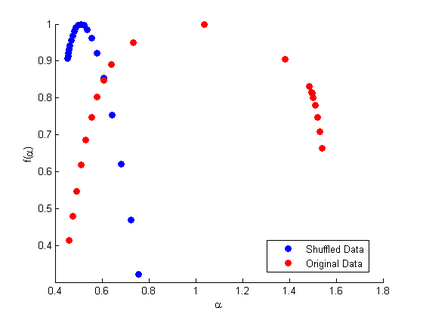

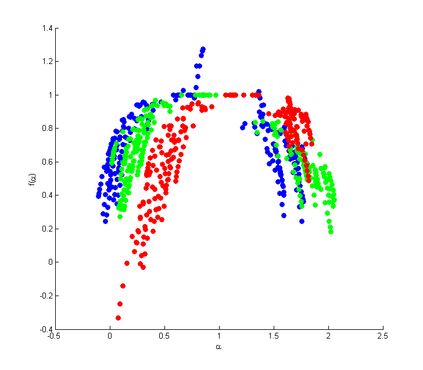

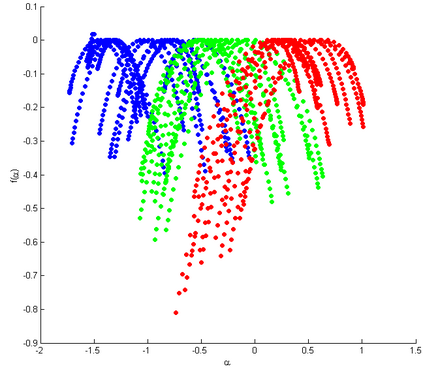

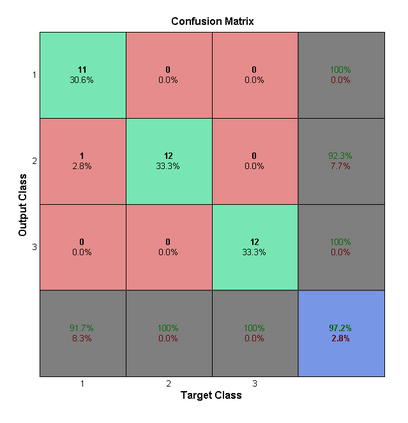

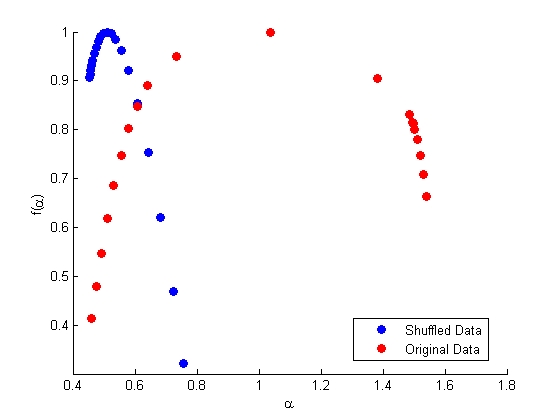

At present emotion extraction from speech is a very important issue due to its diverse applications. Hence, it becomes absolutely necessary to obtain models that take into consideration the speaking styles of a person, vocal tract information, timbral qualities and other congenital information regarding his voice. Our speech production system is a nonlinear system like most other real world systems. Hence the need arises for modelling our speech information using nonlinear techniques. In this work we have modelled our articulation system using nonlinear multifractal analysis. The multifractal spectral width and scaling exponents reveals essentially the complexity associated with the speech signals taken. The multifractal spectrums are well distinguishable the in low fluctuation region in case of different emotions. The source characteristics have been quantified with the help of different non-linear models like Multi-Fractal Detrended Fluctuation Analysis, Wavelet Transform Modulus Maxima. The Results obtained from this study gives a very good result in emotion clustering.

翻译:目前,由于语言的多种应用,从语言中提取情感是一个非常重要的问题。 因此,绝对有必要获得一些模型,这些模型要考虑一个人的语音风格、声道信息、音质品质和其他先天性关于其声音的信息。 我们的语音制作系统与大多数其他现实世界系统一样,是一个非线性系统。 因此,有必要使用非线性技术模拟我们的语音信息。 在这项工作中,我们用非线性多分形分析模拟了我们的表达系统。 多分形光谱宽度和缩放指数揭示了与所使用语言信号相关的复杂性。 多分形频谱谱非常可辨别不同情感情况下低波动区域。 源特性在多种非线性模型(如多光学性脱尘变形分析,Wavelet变形Modulus Maxima)的帮助下被量化。 从这项研究获得的结果在情感组合方面产生了很好的结果。