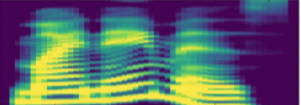

Tacotron-based text-to-speech (TTS) systems directly synthesize speech from text input. Such frameworks typically consist of a feature prediction network that maps character sequences to frequency-domain acoustic features, followed by a waveform reconstruction algorithm or a neural vocoder that generates the time-domain waveform from acoustic features. As the loss function is usually calculated only for frequency-domain acoustic features, that doesn't directly control the quality of the generated time-domain waveform. To address this problem, we propose a new training scheme for Tacotron-based TTS, referred to as WaveTTS, that has 2 loss functions: 1) time-domain loss, denoted as the waveform loss, that measures the distortion between the natural and generated waveform; and 2) frequency-domain loss, that measures the Mel-scale acoustic feature loss between the natural and generated acoustic features. WaveTTS ensures both the quality of the acoustic features and the resulting speech waveform. To our best knowledge, this is the first implementation of Tacotron with joint time-frequency domain loss. Experimental results show that the proposed framework outperforms the baselines and achieves high-quality synthesized speech.

翻译:这种框架通常包括一个地貌预测网络,用于绘制频率-界域声学特征的字符序列,然后是波形重建算法或神经电解码,从声学特征生成时-界域波形。由于损失函数通常只针对频率-界域声学特征计算,这些特征并不直接控制生成的时间-界域波形的质量。为了解决这个问题,我们为塔克坦基域波形(称为WaveTTTS)提出一个新的培训计划,它有两个损失功能:1)时间-界域损失,以波形损失为代号,以测量自然和生成波形之间的扭曲;和2)频率-界值损失,以测量自然和生成的声学特征之间的梅尔级声学特征损失。WaveTTS确保了声学特征的质量以及由此产生的语音波状。据我们所知,这是首次实施塔科ron系统,同时进行时间-频域损失。实验结果显示,拟议框架将超越高质量基准和高质量合成。