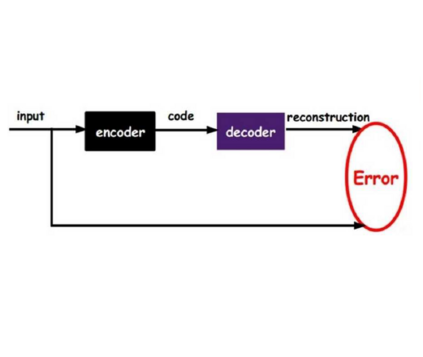

Image and language modeling is of crucial importance for vision-language pre-training (VLP), which aims to learn multi-modal representations from large-scale paired image-text data. However, we observe that most existing VLP methods focus on modeling the interactions between image and text features while neglecting the information disparity between image and text, thus suffering from focal bias. To address this problem, we propose a vision-language masked autoencoder framework (VLMAE). VLMAE employs visual generative learning, facilitating the model to acquire fine-grained and unbiased features. Unlike the previous works, VLMAE pays attention to almost all critical patches in an image, providing more comprehensive understanding. Extensive experiments demonstrate that VLMAE achieves better performance in various vision-language downstream tasks, including visual question answering, image-text retrieval and visual grounding, even with up to 20% pre-training speedup.

翻译:图像和语言建模对于视觉语言预科培训至关重要,该预科培训旨在学习大型配对图像-文字数据的多模式表达方式,然而,我们注意到,大多数现有的VLP方法侧重于模拟图像和文字特征之间的互动,同时忽视图像和文字之间的信息差异,从而产生焦点偏差。为解决这一问题,我们提议了一个视觉语言蒙面自动编码框架(VLMAE ) 。 VLMAE 使用视觉基因化学习,为模型获得精细的、不带偏见的特征提供便利。与以前的工作不同,VLMAE关注图像中几乎所有的关键补丁,提供更全面的理解。广泛的实验表明VLMAE在各种视觉语言下游任务中取得更好的表现,包括视觉问答、图像文本检索和视觉定位,即使培训前速度达到20%。