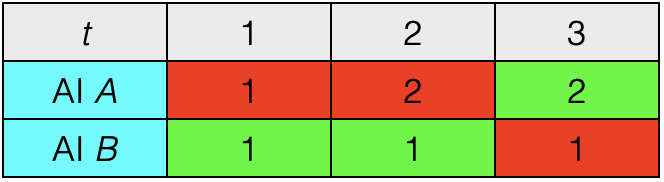

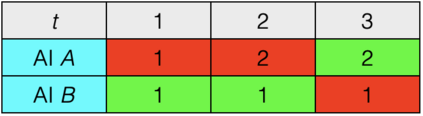

Prevailing methods for assessing and comparing generative AIs incentivize responses that serve a hypothetical representative individual. Evaluating models in these terms presumes homogeneous preferences across the population and engenders selection of agglomerative AIs, which fail to represent the diverse range of interests across individuals. We propose an alternative evaluation method that instead prioritizes inclusive AIs, which provably retain the requisite knowledge not only for subsequent response customization to particular segments of the population but also for utility-maximizing decisions.

翻译:用于评估和比较遗传性AI的常用方法鼓励为假设具有代表性的个人提供反馈。用这些术语评价模型假定了整个人口的单一偏好,并产生了集中性AI的选择,而这种选择未能代表个人的不同利益范围。我们建议了一种替代评价方法,而不是将包容性AI列为优先事项,这种方法不仅为随后针对特定人口群体的响应定制保留了必要的知识,而且也为实用性最大化决定保留了必要的知识。</s>