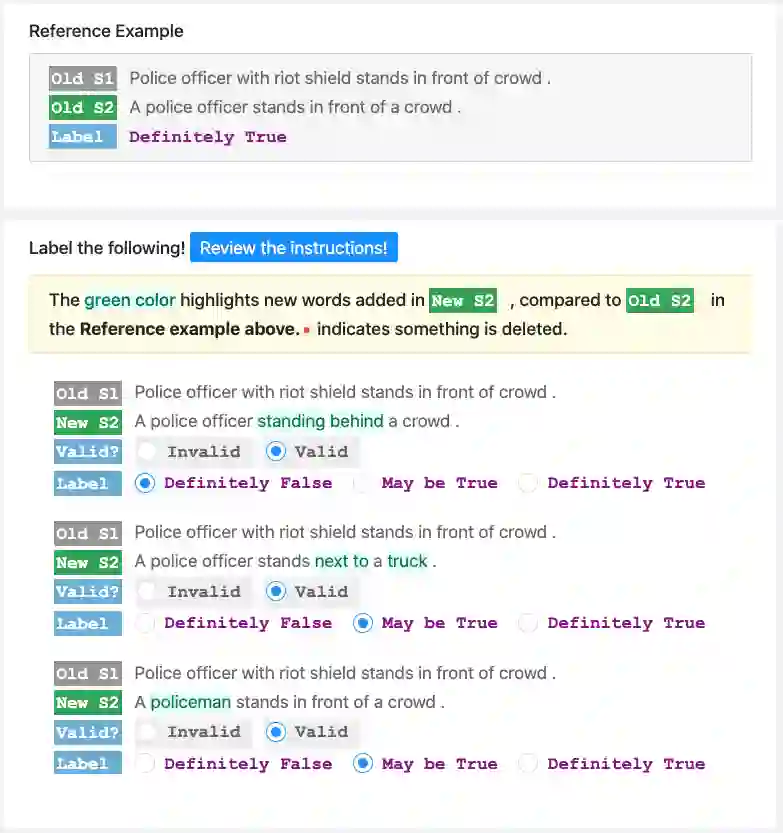

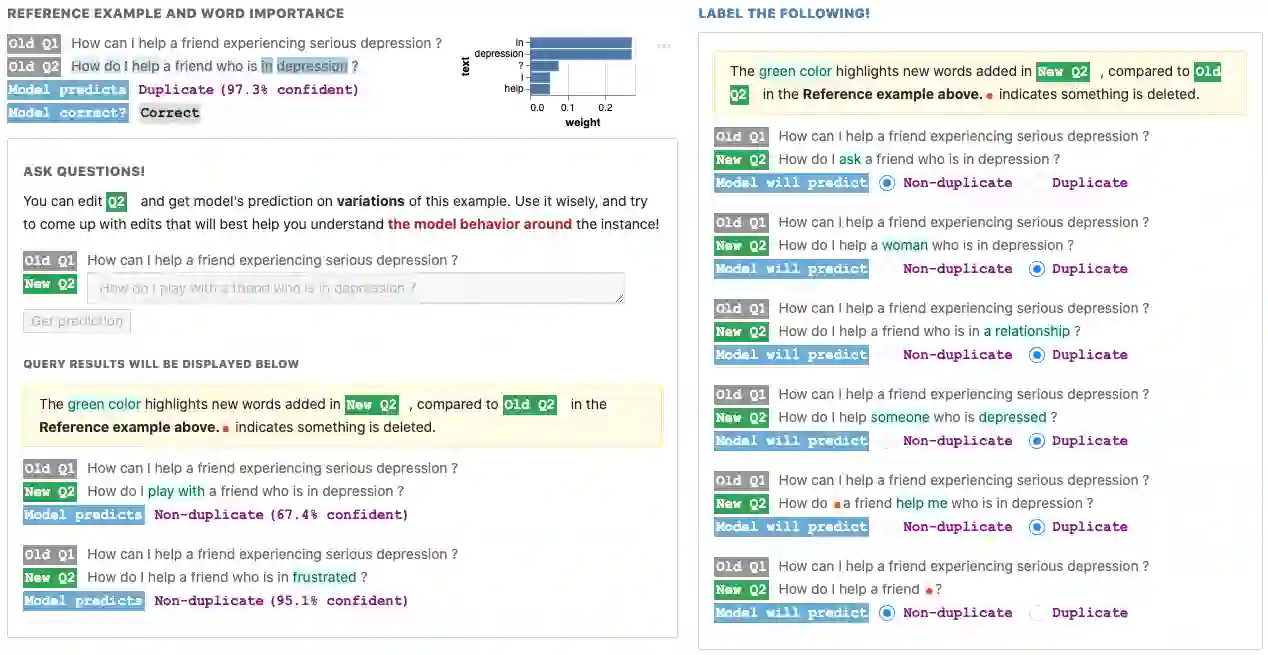

While counterfactual examples are useful for analysis and training of NLP models, current generation methods either rely on manual labor to create very few counterfactuals, or only instantiate limited types of perturbations such as paraphrases or word substitutions. We present Polyjuice, a general-purpose counterfactual generator that allows for control over perturbation types and locations, trained by finetuning GPT-2 on multiple datasets of paired sentences. We show that Polyjuice produces diverse sets of realistic counterfactuals, which in turn are useful in various distinct applications: improving training and evaluation on three different tasks (with around 70% less annotation effort than manual generation), augmenting state-of-the-art explanation techniques, and supporting systematic counterfactual error analysis by revealing behaviors easily missed by human experts.

翻译:虽然反事实例子对分析和培训NLP模式有用,但当代方法要么依靠体力劳动来创造很少的反事实,要么只是即时处理有限的扰动类型,如副词句或换词。 我们展示了多功能反事实生成器,即一种通用反事实生成器,能够控制扰动类型和地点,经过GPT-2关于对称判刑的多个数据集的微调培训。 我们显示,多功能生成了多种现实反事实,而这又在各种不同的应用中有用:改进三种不同任务的培训和评价(比人工生成少约70%的注解努力),增强最新解释技术,并通过揭示人类专家容易忽略的行为来支持系统性反事实错误分析。