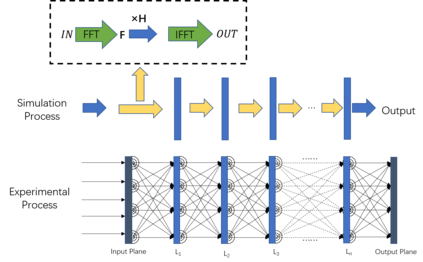

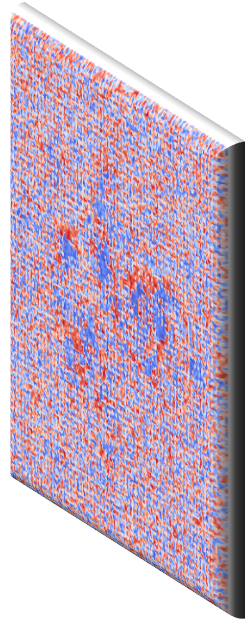

Deep neural networks (DNNs) have substantial computational requirements, which greatly limit their performance in resource-constrained environments. Recently, there are increasing efforts on optical neural networks and optical computing based DNNs hardware, which bring significant advantages for deep learning systems in terms of their power efficiency, parallelism and computational speed. Among them, free-space diffractive deep neural networks (D$^2$NNs) based on the light diffraction, feature millions of neurons in each layer interconnected with neurons in neighboring layers. However, due to the challenge of implementing reconfigurability, deploying different DNNs algorithms requires re-building and duplicating the physical diffractive systems, which significantly degrades the hardware efficiency in practical application scenarios. Thus, this work proposes a novel hardware-software co-design method that enables robust and noise-resilient Multi-task Learning in D$^2$2NNs. Our experimental results demonstrate significant improvements in versatility and hardware efficiency, and also demonstrate the robustness of proposed multi-task D$^2$NN architecture under wide noise ranges of all system components. In addition, we propose a domain-specific regularization algorithm for training the proposed multi-task architecture, which can be used to flexibly adjust the desired performance for each task.

翻译:深心神经网络(DNNS)具有大量的计算要求,大大限制了其在资源紧张环境中的性能。最近,在光神经网络和光计算DNNS硬件方面正在加紧努力,这给深层学习系统带来巨大的动力效率、平行性和计算速度方面的优势。其中,基于轻分解的空空间不同式深心神经网络(D$2$NNS),每个层中数百万个神经元与周边神经元相连接。然而,由于实施可调整性的挑战,不同的DNNS算法需要重建并重复物理的硬硬化系统,这在实际应用情景中大大降低了硬件的效率。因此,这项工作提出了一个新的硬件软件共同设计方法,使D$2$2NNS能够强大和耐噪音的多功能学习。我们的实验结果表明,在多功能和硬件效率方面有了显著的改进,同时,还表明拟议的多任务D$2NNS算法需要重新建立和重复,从而大大降低硬件在实际应用情景中的效率。因此,我们还可以提议每个系统常规结构下进行一项拟议的灵活度调整。