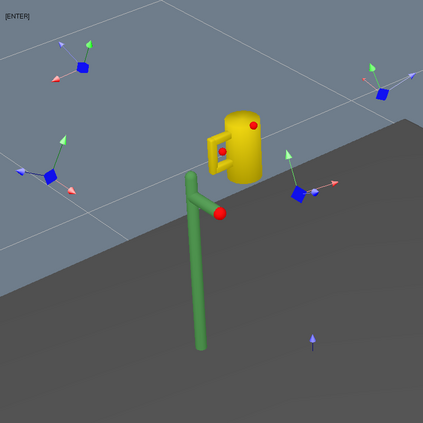

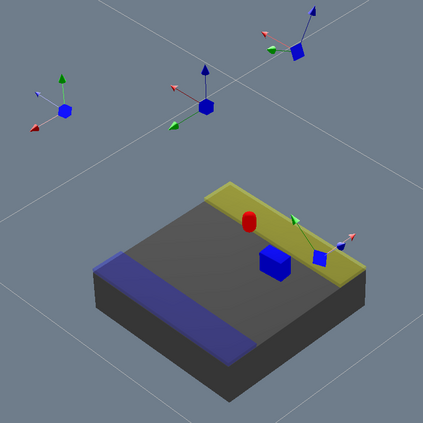

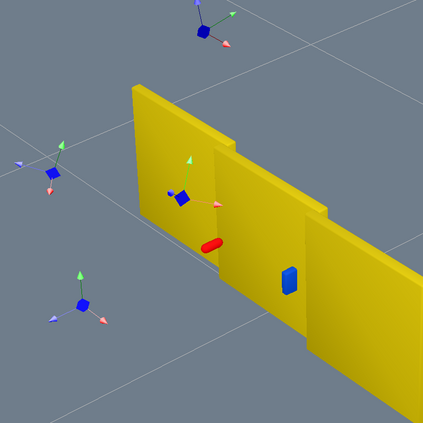

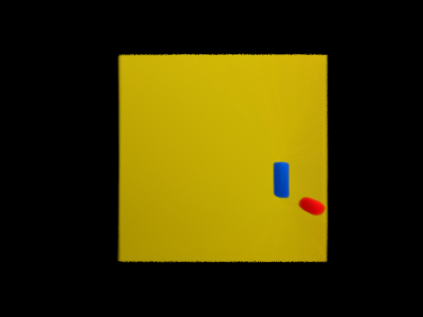

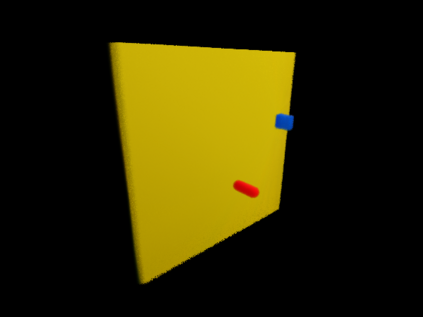

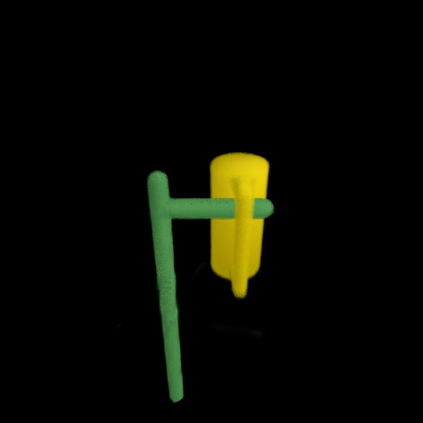

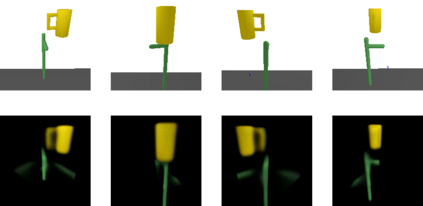

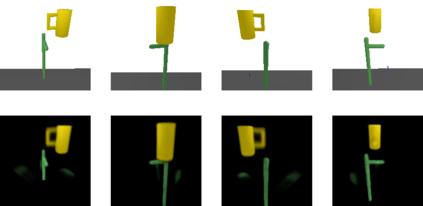

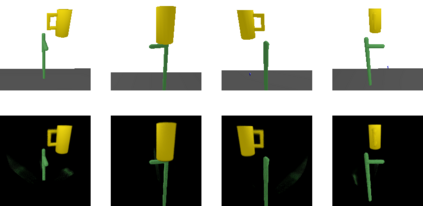

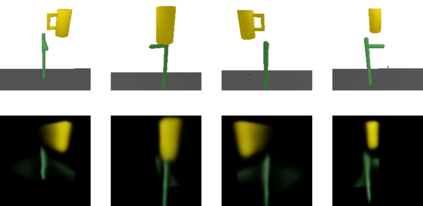

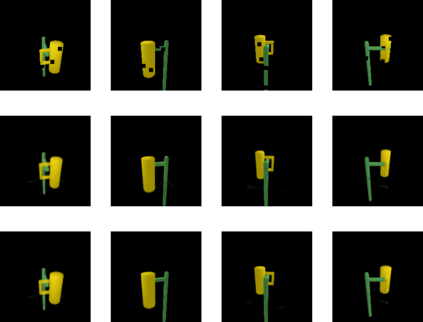

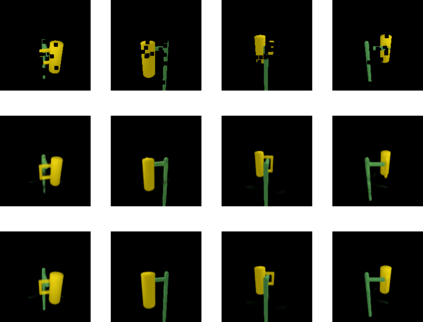

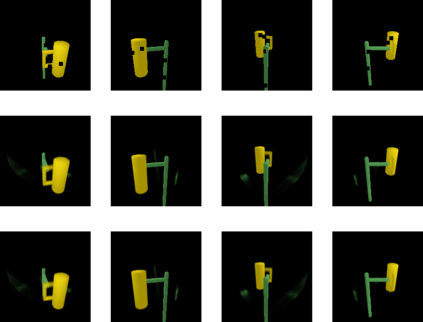

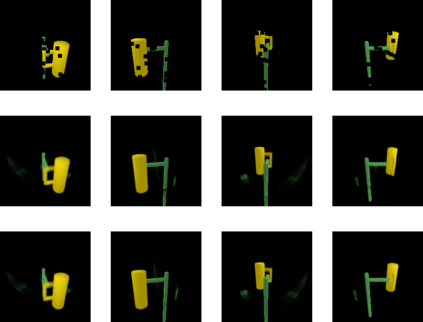

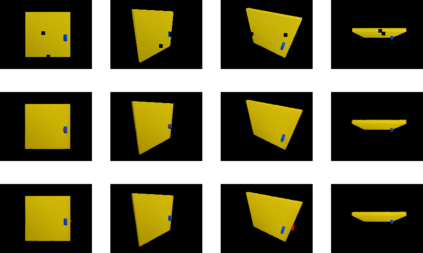

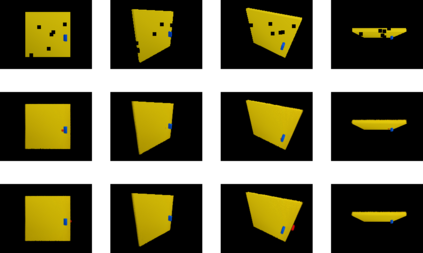

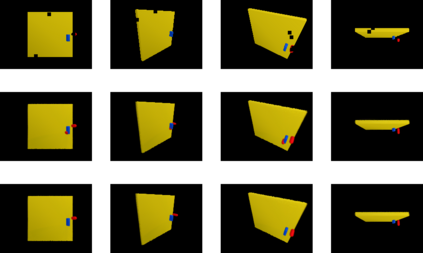

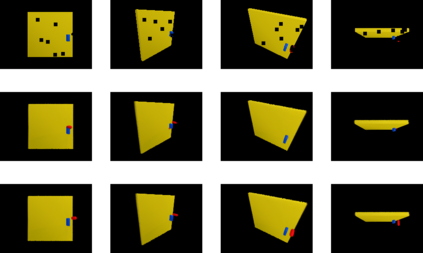

It is a long-standing problem to find effective representations for training reinforcement learning (RL) agents. This paper demonstrates that learning state representations with supervision from Neural Radiance Fields (NeRFs) can improve the performance of RL compared to other learned representations or even low-dimensional, hand-engineered state information. Specifically, we propose to train an encoder that maps multiple image observations to a latent space describing the objects in the scene. The decoder built from a latent-conditioned NeRF serves as the supervision signal to learn the latent space. An RL algorithm then operates on the learned latent space as its state representation. We call this NeRF-RL. Our experiments indicate that NeRF as supervision leads to a latent space better suited for the downstream RL tasks involving robotic object manipulations like hanging mugs on hooks, pushing objects, or opening doors. Video: https://dannydriess.github.io/nerf-rl

翻译:本文表明,在神经辐射场(NeRFs)的监督下,学习状态演示可以提高RL的性能,而与其他学习到的演示相比,甚至与其他低维的手工设计的国家信息相比,甚至与其他低维、手工设计的国家信息相比,这是长期存在的一个问题。具体地说,我们建议对一个编码器进行培训,该编码器将多图像观测映射成一个描述现场物体的潜在空间。从一个有潜质的NERF中建造的解码器充当了学习潜伏空间的监督信号。一个RL算法随后在所学的潜伏空间上运行,作为国家代表。我们称之为NeRF-RL。我们的实验表明,NERF作为监督器,可以导致一个更适合下游RL任务的潜在空间,涉及机械物体操纵,如挂钩、推动物体或打开门等。视频:https://dannydries.github.io/nerf-rl。