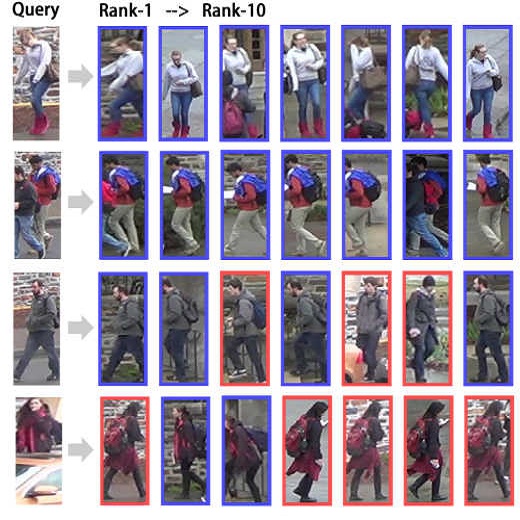

Finding target persons in full scene images with a query of text description has important practical applications in intelligent video surveillance.However, different from the real-world scenarios where the bounding boxes are not available, existing text-based person retrieval methods mainly focus on the cross modal matching between the query text descriptions and the gallery of cropped pedestrian images. To close the gap, we study the problem of text-based person search in full images by proposing a new end-to-end learning framework which jointly optimize the pedestrian detection, identification and visual-semantic feature embedding tasks. To take full advantage of the query text, the semantic features are leveraged to instruct the Region Proposal Network to pay more attention to the text-described proposals. Besides, a cross-scale visual-semantic embedding mechanism is utilized to improve the performance. To validate the proposed method, we collect and annotate two large-scale benchmark datasets based on the widely adopted image-based person search datasets CUHK-SYSU and PRW. Comprehensive experiments are conducted on the two datasets and compared with the baseline methods, our method achieves the state-of-the-art performance.

翻译:然而,现有的基于文本的人检索方法主要侧重于查询文本说明和田径行人图画廊之间的交叉模式匹配。为了缩小差距,我们通过提出一个新的端到端学习框架来研究基于文本的人全面搜索问题,该框架将联合优化行人搜索、识别和视觉-语义特征嵌入任务。为充分利用查询文本,将语义特征用于指示区域建议网络更多地注意文本描述的建议。此外,还利用一个跨尺度的视觉-语义嵌入机制来改进绩效。为了验证拟议方法,我们根据广泛采用的基于图像的人搜索数据集CUHK-SYSU和PRW收集并公布两个大型基准数据集。在两个数据集上进行了综合实验,并与基线方法进行比较,我们的方法实现了状态性能。