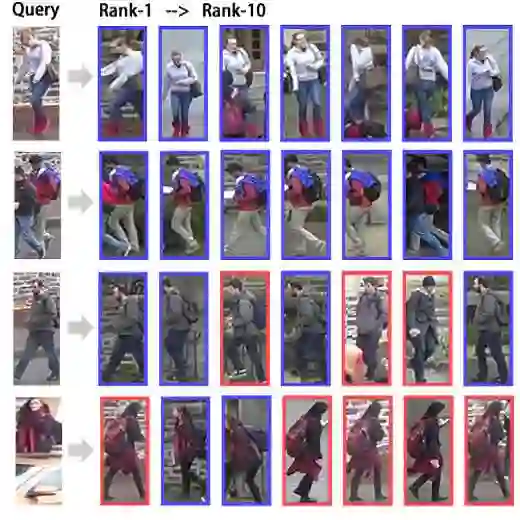

This paper proposes a new generative adversarial network for pose transfer, i.e., transferring the pose of a given person to a target pose. The generator of the network comprises a sequence of Pose-Attentional Transfer Blocks that each transfers certain regions it attends to, generating the person image progressively. Compared with those in previous works, our generated person images possess better appearance consistency and shape consistency with the input images, thus significantly more realistic-looking. The efficacy and efficiency of the proposed network are validated both qualitatively and quantitatively on Market-1501 and DeepFashion. Furthermore, the proposed architecture can generate training images for person re-identification, alleviating data insufficiency. Codes and models are available at: https://github.com/tengteng95/Pose-Transfer.git.

翻译:本文提出一个新的变形对抗网络,即将某人的姿势转移到目标姿势上。网络的生成者包括每个传输其所关注的某些地区的波斯-有意转移块序列,逐步生成人的形象。与以前作品相比,我们产生的个人图像与输入图像的外观更加一致,并形成与输入图像的一致,从而明显地更加现实。拟议的网络的功效和效率在质量和数量上都得到市场1501和DeepFashion的验证。此外,拟议的结构可以产生培训图像,供人重新识别,减轻数据不足。代码和模型见:https://github.com/tengteng95/Pose-Transfer.git。