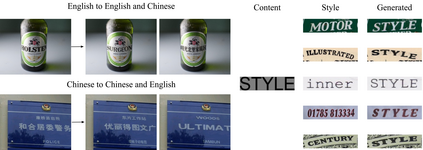

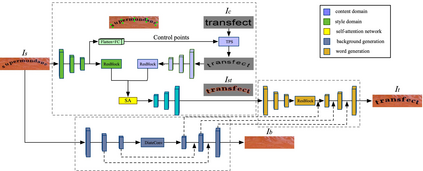

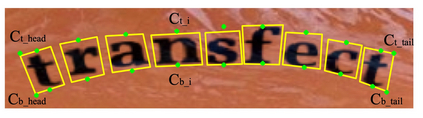

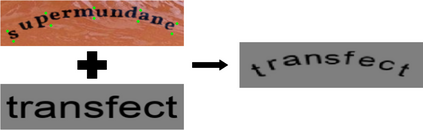

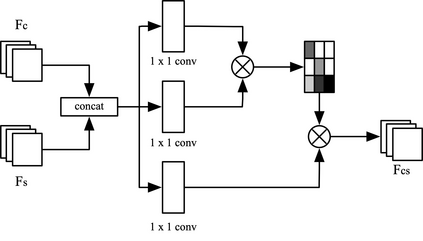

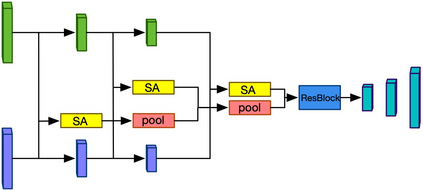

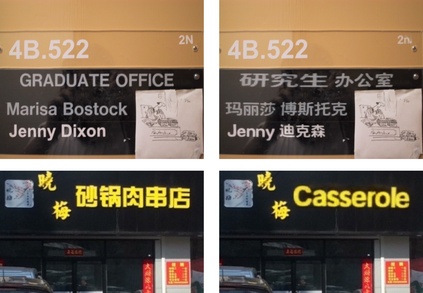

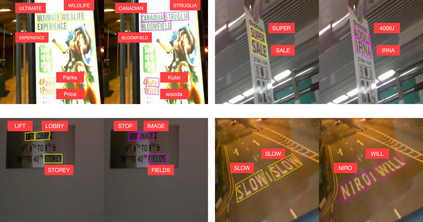

Swapping text in scene images while preserving original fonts, colors, sizes and background textures is a challenging task due to the complex interplay between different factors. In this work, we present SwapText, a three-stage framework to transfer texts across scene images. First, a novel text swapping network is proposed to replace text labels only in the foreground image. Second, a background completion network is learned to reconstruct background images. Finally, the generated foreground image and background image are used to generate the word image by the fusion network. Using the proposing framework, we can manipulate the texts of the input images even with severe geometric distortion. Qualitative and quantitative results are presented on several scene text datasets, including regular and irregular text datasets. We conducted extensive experiments to prove the usefulness of our method such as image based text translation, text image synthesis, etc.

翻译:在保存原始字体、颜色、大小和背景纹理的同时交换现场图像中的文本是一项艰巨的任务,因为不同因素之间的相互作用复杂。 在这项工作中,我们展示了 SwapText, 这是一个三阶段框架,用于在场景图像之间传输文本。 首先,我们建议建立一个新颖的文本互换网络,仅取代前景图像中的文本标签。 其次,我们学习了一个背景完成网络,以重建背景图像。 最后,通过聚合网络,生成的地表图像和背景图像被用来生成文字图像。我们可以利用提议框架来操纵输入图像的文本,即使有严重的几何扭曲。 在若干场景文本数据集中,包括常规和不规则文本数据集,都展示了定性和定量结果。 我们进行了广泛的实验,以证明我们方法的有用性,例如基于图像的文本翻译、文本图像合成等。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem