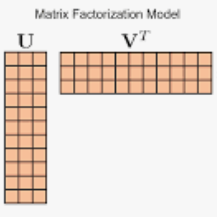

This article proposes new multiplicative updates for nonnegative matrix factorization (NMF) with the $\beta$-divergence objective function. Our new updates are derived from a joint majorization-minimization (MM) scheme, in which an auxiliary function (a tight upper bound of the objective function) is built for the two factors jointly and minimized at each iteration. This is in contrast with the classic approach in which a majorizer is derived for each factor separately. Like that classic approach, our joint MM algorithm also results in multiplicative updates that are simple to implement. They however yield a significant drop of computation time (for equally good solutions), in particular for some $\beta$-divergences of important applicative interest, such as the squared Euclidean distance and the Kullback-Leibler or Itakura-Saito divergences. We report experimental results using diverse datasets: face images, an audio spectrogram, hyperspectral data and song play counts. Depending on the value of $\beta$ and on the dataset, our joint MM approach can yield CPU time reductions from about $13\%$ to $78\%$ in comparison to the classic alternating scheme.

翻译:本文提出了一种新的非负矩阵分解(NMF)$\beta$-分布目标函数的乘法更新方法。我们的新算法是基于联合主导最小化方案得到的,其中通过联合优化目标函数的两个因子构建了一个辅助函数(目标函数的紧密上界),并在每个迭代中对其进行最小化。这与经典的方法不同,经典方法是为每个因子单独导出一个主导函数。和经典方法一样,我们的联合主导最小化算法也会产生简单易用的乘法更新方法。但是,我们的算法需要的计算时间更短,特别是对于某些有重要应用价值的$\beta$-分布问题,比如平方欧几里得距离和Kullback-Leibler或Itakura-Saito分布问题。我们使用了不同的数据集进行实验,包括人脸图像、音频频谱、高光谱数据和音乐播放次数等。根据$\beta$的值和数据集,我们的联合主导最小化方法相对于经典的交替方案可以将CPU时间减少约13%至78%。