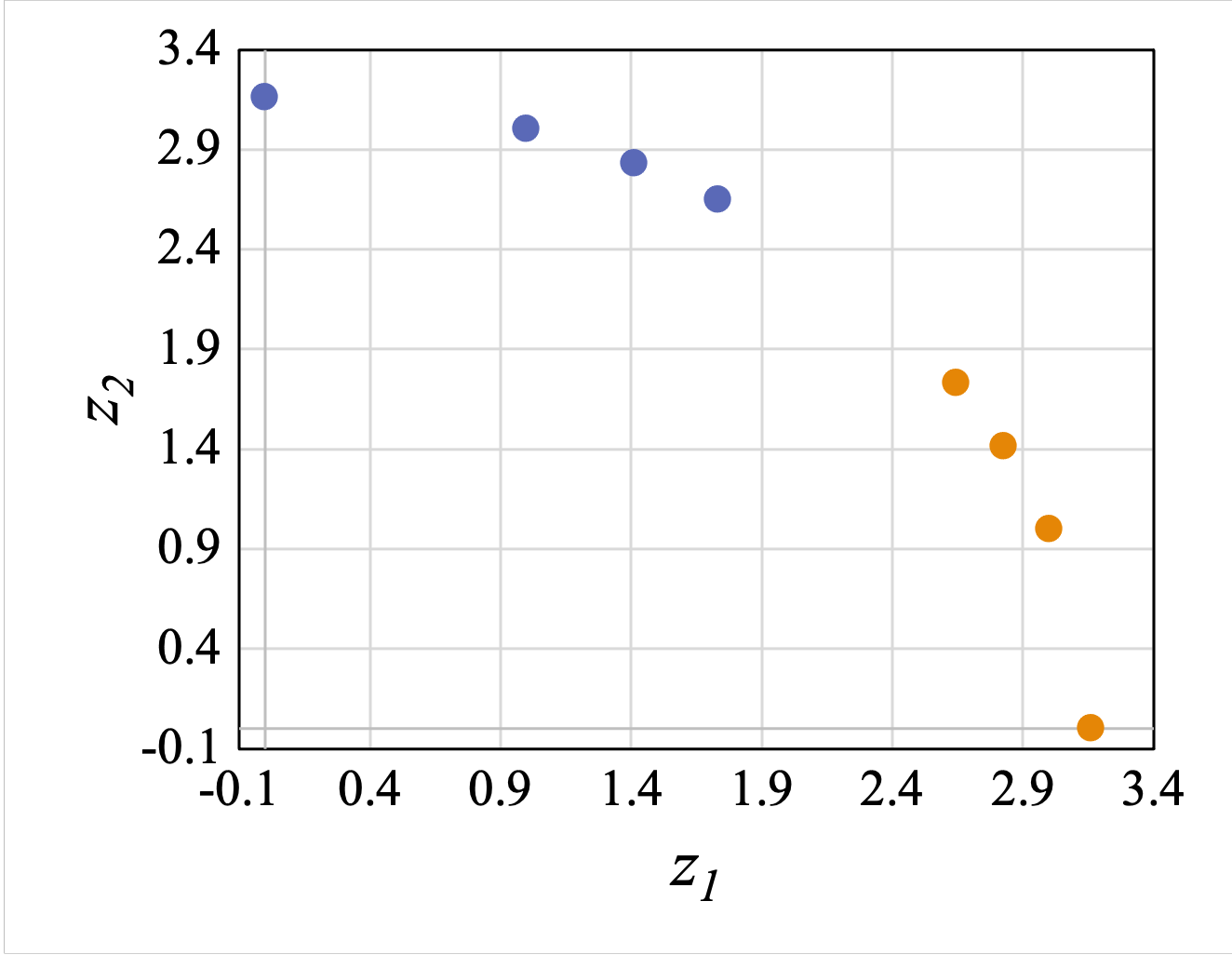

Transferability estimation has been an essential tool in selecting a pre-trained model and the layers of it to transfer, so as to maximize the performance on a target task and prevent negative transfer. Existing estimation algorithms either require intensive training on target tasks or have difficulties in evaluating the transferability between layers. We propose a simple, efficient, and effective transferability measure named TransRate. With single pass through the target data, TransRate measures the transferability as the mutual information between the features of target examples extracted by a pre-trained model and labels of them. We overcome the challenge of efficient mutual information estimation by resorting to coding rate that serves as an effective alternative to entropy. TransRate is theoretically analyzed to be closely related to the performance after transfer learning. Despite its extraordinary simplicity in 10 lines of codes, TransRate performs remarkably well in extensive evaluations on 22 pre-trained models and 16 downstream tasks.

翻译:现有估算算法要么需要就目标任务进行密集培训,要么在评估不同层次之间的可转让性方面有困难。我们建议了一个简单、高效和有效的可转让性措施,名为TransRate。在通过目标数据的单程中,TransRate测量了可转让性,作为通过预先培训模式和标签提取的目标示例特征之间的相互信息。我们克服了高效的相互信息估算的挑战,采用了编码率作为取代加密的有效替代物。从理论上分析 TransRate,使之与转移学习后的业绩密切相关。尽管在10行代码中非常简单, TransRate在对22个预先培训模式和16项下游任务进行广泛评价时表现得非常好。