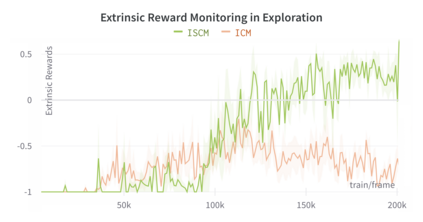

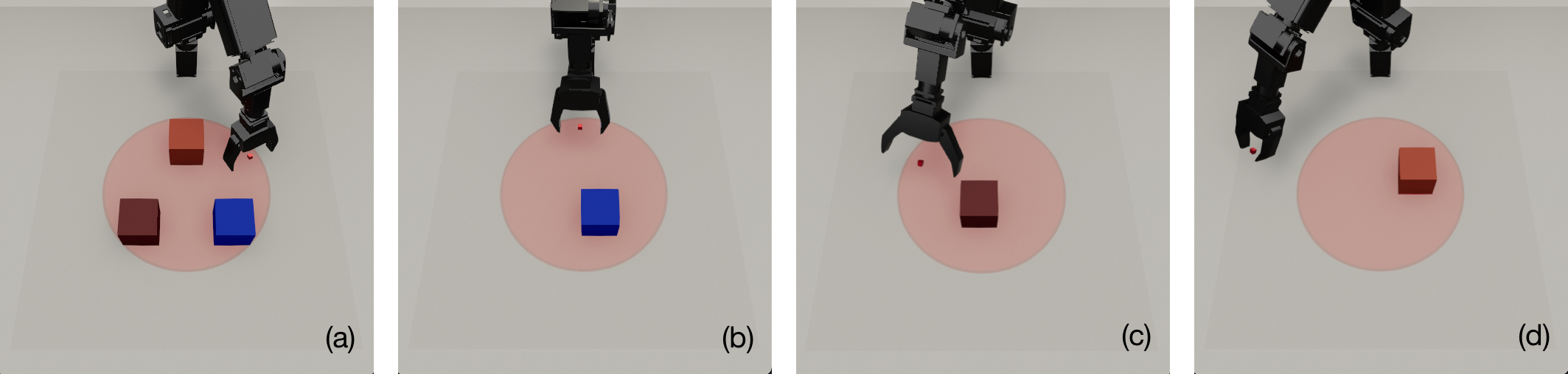

Sound is one of the most informative and abundant modalities in the real world while being robust to sense without contacts by small and cheap sensors that can be placed on mobile devices. Although deep learning is capable of extracting information from multiple sensory inputs, there has been little use of sound for the control and learning of robotic actions. For unsupervised reinforcement learning, an agent is expected to actively collect experiences and jointly learn representations and policies in a self-supervised way. We build realistic robotic manipulation scenarios with physics-based sound simulation and propose the Intrinsic Sound Curiosity Module (ISCM). The ISCM provides feedback to a reinforcement learner to learn robust representations and to reward a more efficient exploration behavior. We perform experiments with sound enabled during pre-training and disabled during adaptation, and show that representations learned by ISCM outperform the ones by vision-only baselines and pre-trained policies can accelerate the learning process when applied to downstream tasks.

翻译:声音是真实世界中最丰富和最丰富的方式之一,而这种声音在没有小型和廉价传感器接触的情况下是能够感知到的,可以放在移动设备上。虽然深层次的学习能够从多种感官投入中提取信息,但在控制和学习机器人动作方面却很少使用声音。为了在不受监督的情况下进行强化学习,一个代理机构应该积极收集经验,共同学习自我监督的表述和政策。我们用基于物理的音效模拟来建立现实的机器人操纵情景,并提出内晶声音判断能力模块(ISCM ) 。 ISCM 向一个强化学习者提供反馈,以学习稳健的表达方式,奖励更有效的探索行为。我们在培训前进行有声的实验,在适应期间也残疾了,并表明ISCM 所学的表述在应用到下游任务时,通过只视线基线和预先训练的政策可以超越这些表达方式。