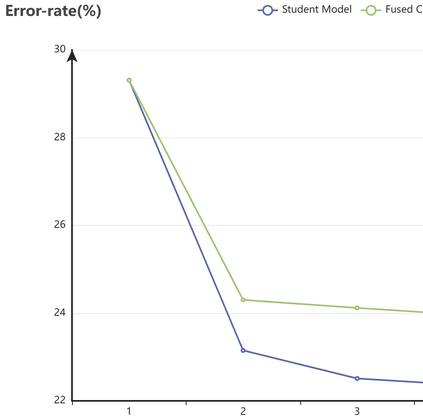

Online knowledge distillation conducts knowledge transfer among all student models to alleviate the reliance on pre-trained models. However, existing online methods rely heavily on the prediction distributions and neglect the further exploration of the representational knowledge. In this paper, we propose a novel Multi-scale Feature Extraction and Fusion method (MFEF) for online knowledge distillation, which comprises three key components: Multi-scale Feature Extraction, Dual-attention and Feature Fusion, towards generating more informative feature maps for distillation. The multiscale feature extraction exploiting divide-and-concatenate in channel dimension is proposed to improve the multi-scale representation ability of feature maps. To obtain more accurate information, we design a dual-attention to strengthen the important channel and spatial regions adaptively. Moreover, we aggregate and fuse the former processed feature maps via feature fusion to assist the training of student models. Extensive experiments on CIF AR-10, CIF AR-100, and CINIC-10 show that MFEF transfers more beneficial representational knowledge for distillation and outperforms alternative methods among various network architectures

翻译:在线知识蒸馏在所有学生模式中进行知识转让,以减轻对预先培训的模型的依赖;然而,现有的在线方法严重依赖预测的分布,忽视了对代表性知识的进一步探索;在本文件中,我们提出一种新的在线知识蒸馏多尺度抽取和融合法(MFEF),用于在线知识蒸馏,其中包括三个关键组成部分:多尺度地貌提取、双重关注和地貌融合,目的是为蒸馏制作更多的信息性特征图;提议利用频道层面的分解和混合的多规模地貌提取功能,以提高地貌图的多尺度代表性能力;为获取更准确的信息,我们设计了一种双重意图,以加强重要的渠道和空间区域适应性;此外,我们通过地貌融合综合和合并了以前处理过的地貌图,以协助培训学生模型;关于CIFAAR-10、CIF AR-100和CINIC-10的广泛实验表明,MF在各种网络架构中转让了更有益的蒸馏和排外替代方法。