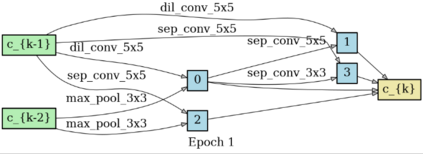

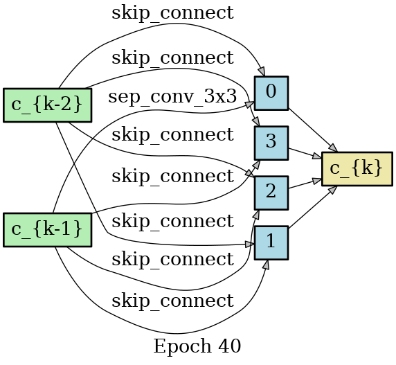

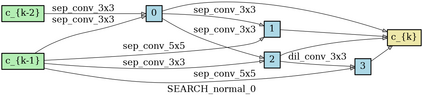

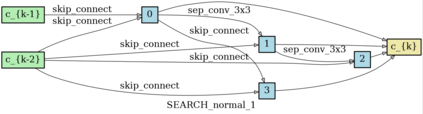

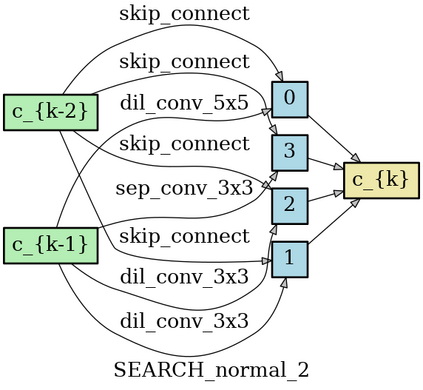

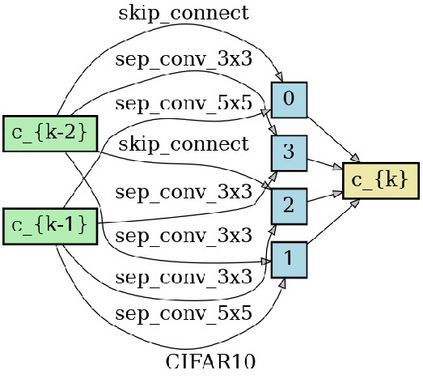

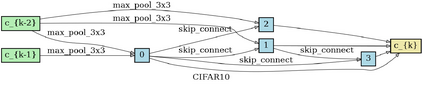

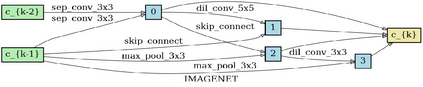

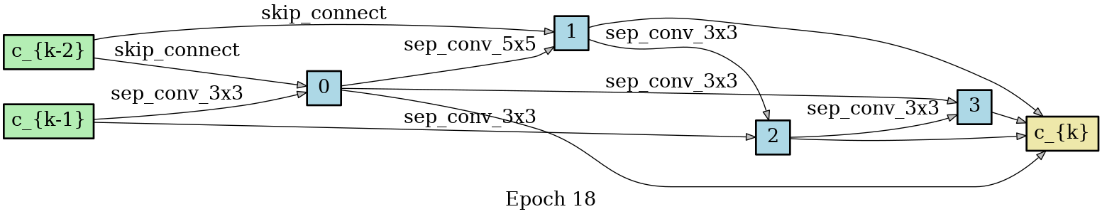

Recently, there has been a growing interest in automating the process of neural architecture design, and the Differentiable Architecture Search (DARTS) method makes the process available within a few GPU days. In particular, a hyper-network called one-shot model is introduced, over which the architecture can be searched continuously with gradient descent. However, the performance of DARTS is often observed to collapse when the number of search epochs becomes large. Meanwhile, lots of "skip-connects" are found in the selected architectures. In this paper, we claim that the cause of the collapse is that there exist cooperation and competition in the bi-level optimization in DARTS, where the architecture parameters and model weights are updated alternatively. Therefore, we propose a simple and effective algorithm, named "DARTS+", to avoid the collapse and improve the original DARTS, by "early stopping" the search procedure when meeting a certain criterion. We demonstrate that the proposed early stopping criterion is effective in avoiding the collapse issue. We also conduct experiments on benchmark datasets and show the effectiveness of our DARTS+ algorithm, where DARTS+ achieves $2.32\%$ test error on CIFAR10, $14.87\%$ on CIFAR100, and $23.7\%$ on ImageNet. We further remark that the idea of "early stopping" is implicitly included in some existing DARTS variants by manually setting a small number of search epochs, while we give an explicit criterion for "early stopping".

翻译:最近,人们日益关注神经结构设计过程的自动化,而不同的建筑搜索(DARTS)方法使这一过程在几GPU日内可以使用。特别是,引入了一个称为一发模型的超网络,在这种网络上,建筑可以不断以坡度下降的方式搜索。然而,当搜索时代的数量大时,DARSS的性能经常观察到崩溃。与此同时,在选定的结构中发现了许多“跳动连接”的问题。在本文中,我们声称崩溃的原因是在DARTS的双层优化中存在合作和竞争,在那里,建筑参数和模型重量可以相互更新。因此,我们建议了一个简单有效的算法,叫做“DARTS+”,以避免崩溃,改进原始的DARTS的性能,在达到某一标准时“及早停止”搜索程序。我们提出的早期停止标准对于避免崩溃问题。我们还进行了基准数据集实验,并展示了我们DARS+的双层优化,在DARTS的双层优化中,“DARTS+ 标准在“DAR+ ” 的低位标准中“停止使用“DRARC2” 。