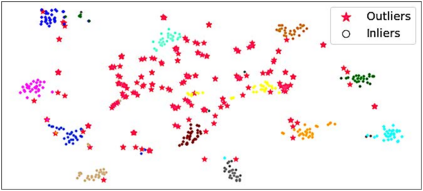

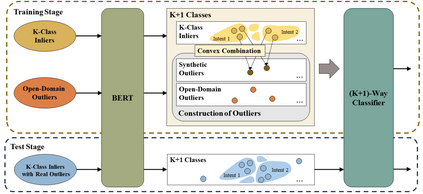

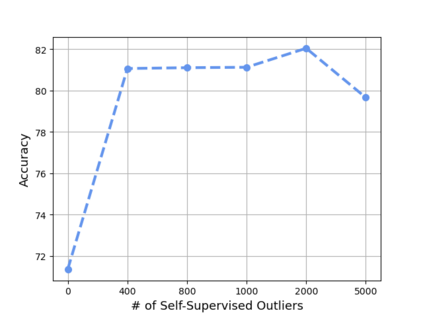

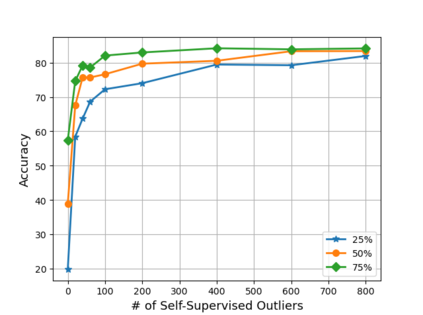

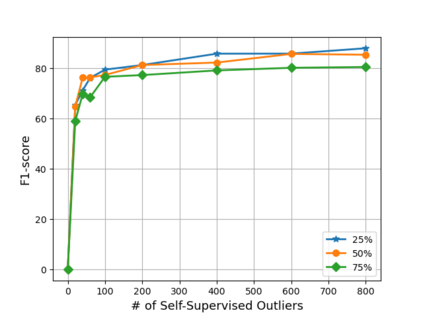

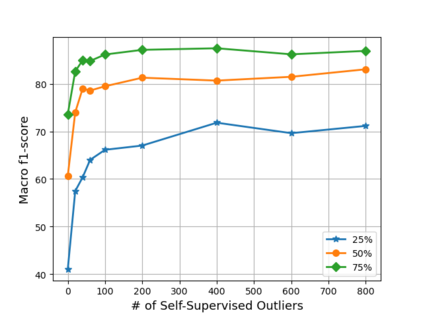

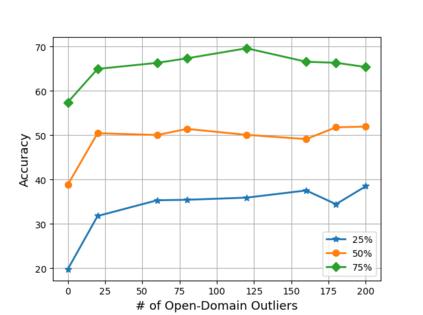

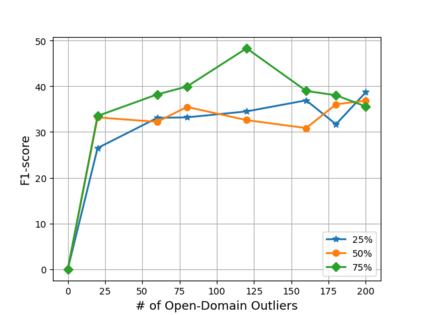

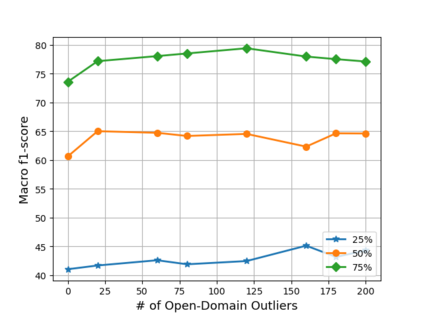

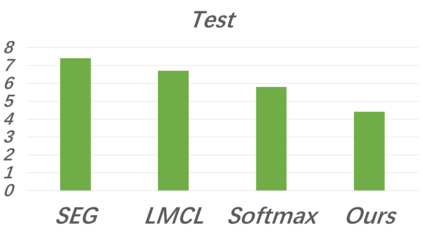

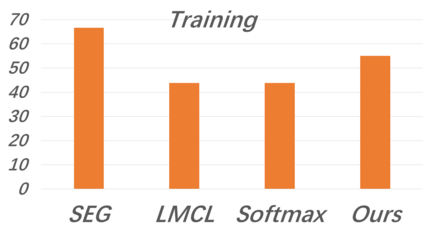

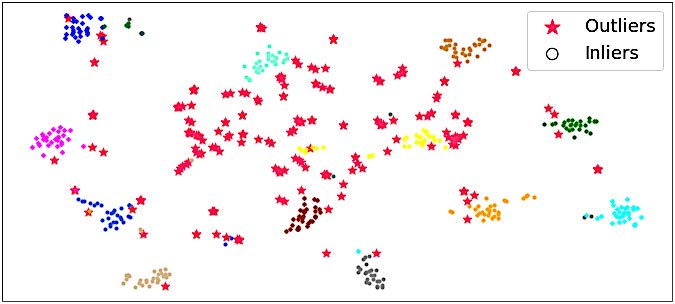

Out-of-scope intent detection is of practical importance in task-oriented dialogue systems. Since the distribution of outlier utterances is arbitrary and unknown in the training stage, existing methods commonly rely on strong assumptions on data distribution such as mixture of Gaussians to make inference, resulting in either complex multi-step training procedures or hand-crafted rules such as confidence threshold selection for outlier detection. In this paper, we propose a simple yet effective method to train an out-of-scope intent classifier in a fully end-to-end manner by simulating the test scenario in training, which requires no assumption on data distribution and no additional post-processing or threshold setting. Specifically, we construct a set of pseudo outliers in the training stage, by generating synthetic outliers using inliner features via self-supervision and sampling out-of-scope sentences from easily available open-domain datasets. The pseudo outliers are used to train a discriminative classifier that can be directly applied to and generalize well on the test task. We evaluate our method extensively on four benchmark dialogue datasets and observe significant improvements over state-of-the-art approaches. Our code has been released at https://github.com/liam0949/DCLOOS.

翻译:在面向任务的对话系统中,外在意图探测具有实际重要性。由于在培训阶段分发外在语句是任意的,而且并不为人所知,现有方法通常依赖对数据分配的强烈假设,例如高斯人混合进行推断,从而产生复杂的多步培训程序,或手工制定规则,例如为外在探测选择信任阈值。在本文件中,我们提出一个简单而有效的方法,通过模拟培训中的测试情景,以完全终端到终端的方式培训外在意图分类员,这不需要对数据分布进行假设,也不需要额外的后处理或阈值设定。具体地说,我们建造了一组在培训阶段的假外在人,通过自我监督视生成合成外在班轮的特征,并从容易获得的开放域数据集取样外的句子。我们使用的假外在软件中用来培训一个可直接应用于测试任务的歧视性分类员。我们广泛评价了我们的方法,对四个基准对话数据集进行了基准,并观察了超标/超标/超标。