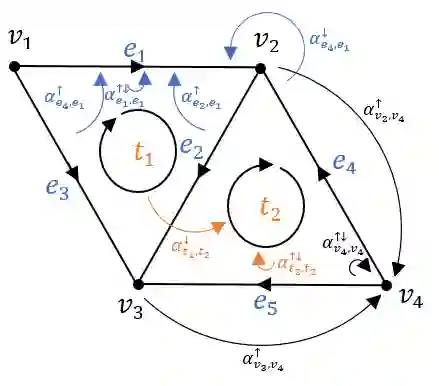

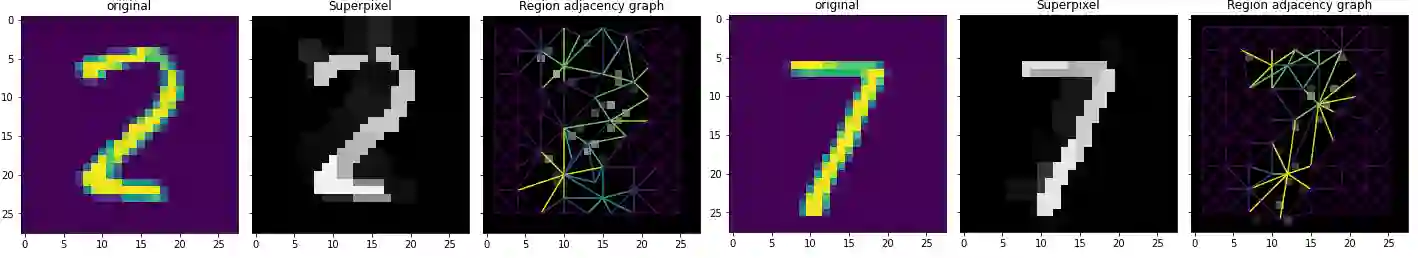

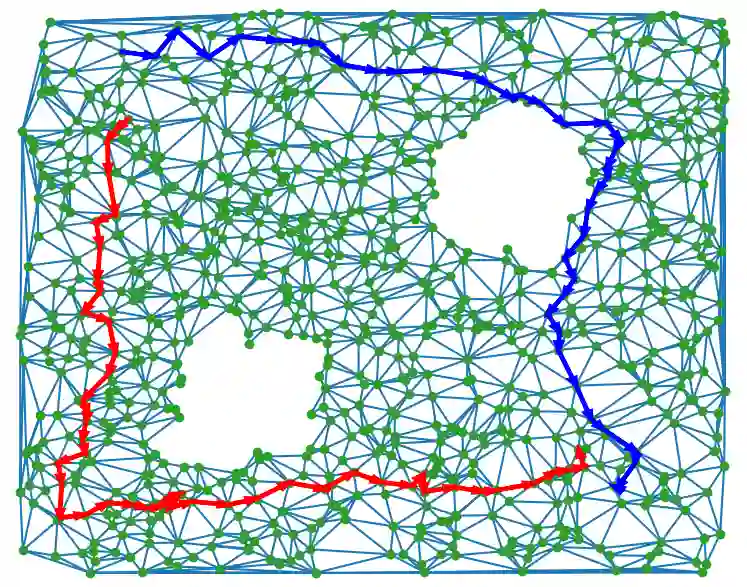

Graph representation learning methods have mostly been limited to the modelling of node-wise interactions. Recently, there has been an increased interest in understanding how higher-order structures can be utilised to further enhance the learning abilities of graph neural networks (GNNs) in combinatorial spaces. Simplicial Neural Networks (SNNs) naturally model these interactions by performing message passing on simplicial complexes, higher-dimensional generalisations of graphs. Nonetheless, the computations performed by most existent SNNs are strictly tied to the combinatorial structure of the complex. Leveraging the success of attention mechanisms in structured domains, we propose Simplicial Attention Networks (SAT), a new type of simplicial network that dynamically weighs the interactions between neighbouring simplicies and can readily adapt to novel structures. Additionally, we propose a signed attention mechanism that makes SAT orientation equivariant, a desirable property for models operating on (co)chain complexes. We demonstrate that SAT outperforms existent convolutional SNNs and GNNs in two image and trajectory classification tasks.

翻译:图表代表学习方法大多局限于节点互动的建模。最近,人们越来越有兴趣了解如何利用更高级结构来进一步加强组合空间中图形神经网络(GNN)的学习能力。简易神经网络(SNN)自然地通过在简易综合体上传递信息来模拟这些互动。虽然如此,大多数存在的SNNE的计算严格地与综合体的组合结构挂钩。利用结构化领域的关注机制的成功作用,我们提出简化注意网络(SAT),这是一种新型的简化网络,能动态地衡量相邻的简易体之间的相互作用,并能够随时适应新的结构。此外,我们提议一个签字的注意机制,使SAT定向变异体成为在(共同)链综合体上操作模型的可取属性。我们证明,SAT在两种图像和轨迹分类任务中超越了现有的同革命性 SNNPs和GNNPs。