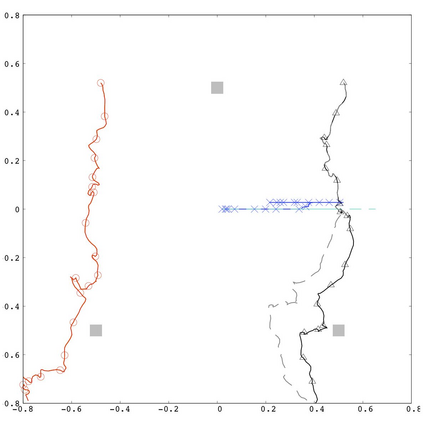

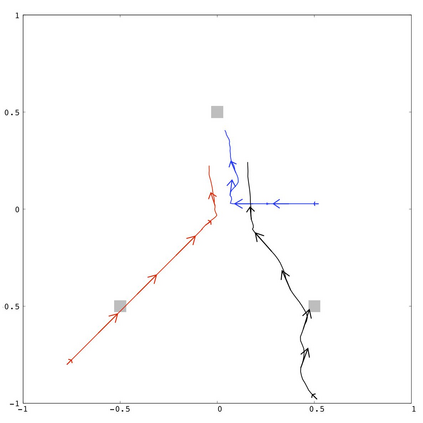

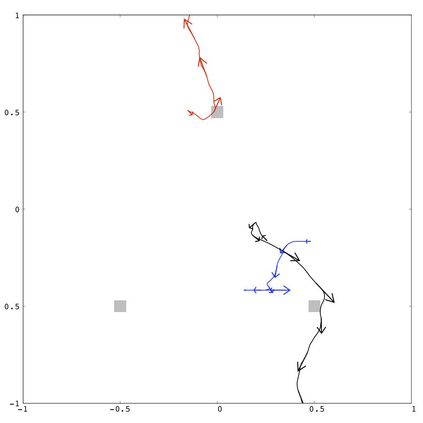

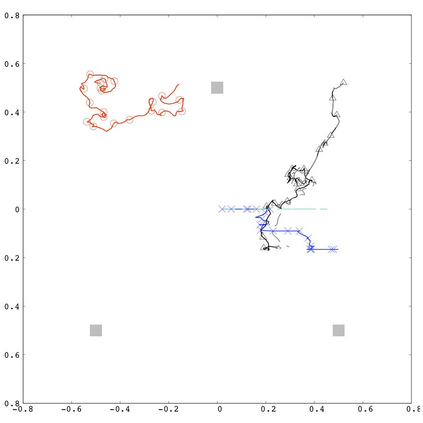

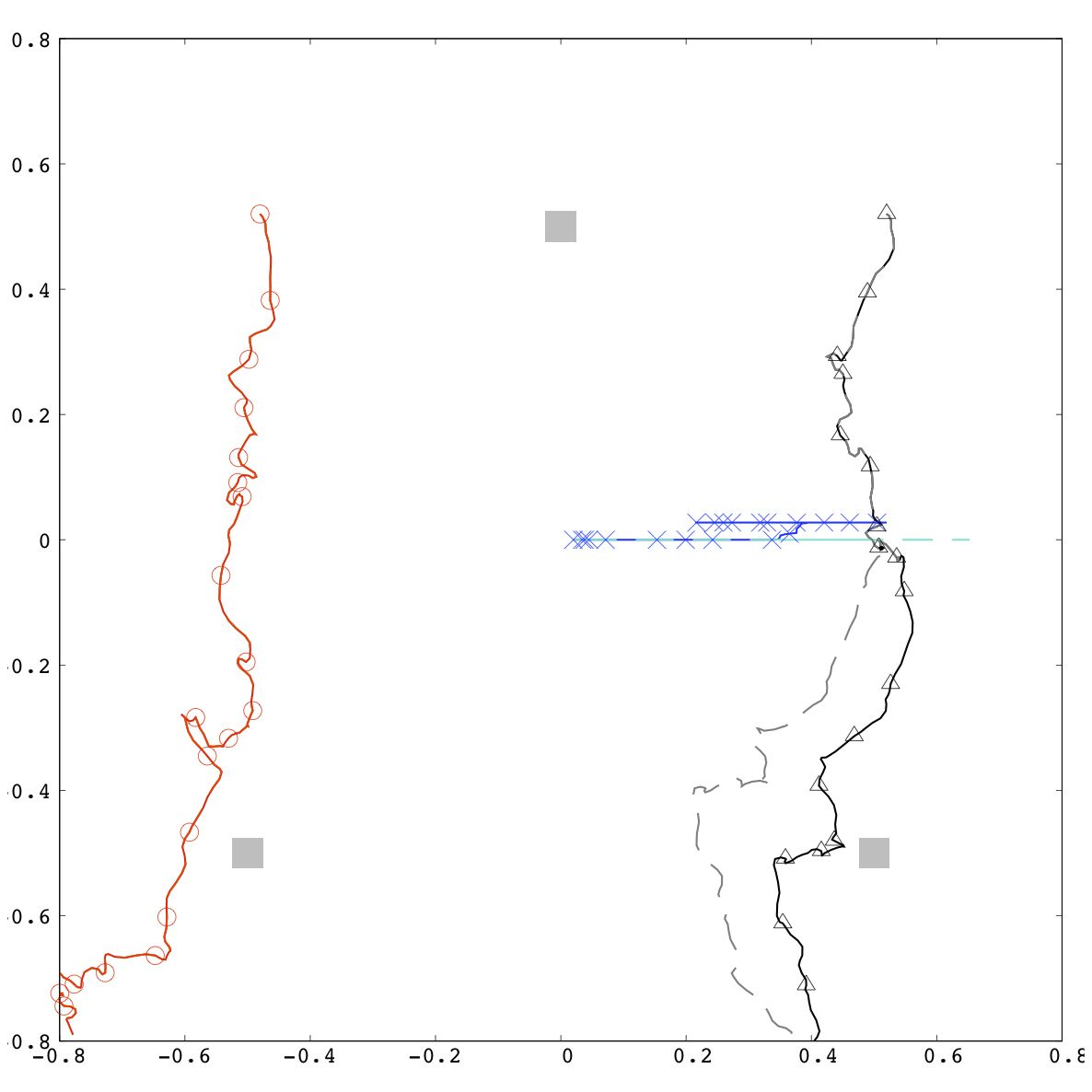

When agents are swarmed to execute a mission, there is often a sudden failure of some of the agents observed from the command base. Solely relying on the communication between the command base and the concerning agent, it is generally difficult to determine whether the failure is caused by actuators (hypothesis, $h_a$) or sensors (hypothesis, $h_s$) However, by instigating collusion between the agents, we can pinpoint the cause of the failure, that is, for $h_a$, we expect to detect corresponding displacements while for $h_a$ we do not. We recommend that such swarm strategies applied to grasp the situation be autonomously generated by artificial intelligence (AI). Preferable actions (${e.g.,}$ the collision) for the distinction will be those maximizing the difference between the expected behaviors for each hypothesis, as a value function. Such actions exist, however, only very sparsely in the whole possibilities, for which the conventional search based on gradient methods does not make sense. To mitigate the abovementioned shortcoming, we (successfully) applied the reinforcement learning technique, achieving the maximization of such a sparse value function. Machine learning was concluded autonomously.The colliding action is the basis of distinguishing the hypothesizes. To pinpoint the agent with the actuator error via this action, the agents behave as if they are assisting the malfunctioning one to achieve a given mission.

翻译:当代理人被吓得执行任务时,从指挥基地观察到的一些代理人往往突然失灵。仅仅依靠指挥基地和代理人之间的沟通,一般很难确定失败是因动因(假冒,美元)还是传感器(假冒,美元美元)造成的(假冒,美元美元)造成的。然而,通过煽动代理人之间的串通,我们可以确定失败的原因,即美元(a),我们期望发现相应的流离失所,而美元(a)则没有。我们建议,为掌握局势而采用的这种温和战略应该由人工智能(AI)自动产生。为了区别,可能的行动(({e.g.美元,美元)或传感器(假冒,美元)是作为价值函数,使每种假设的预期行为之间的差别最大化。但是,这些行动在总体可能性中是很少的,因为基于梯度方法的传统搜索没有意义。为了减轻上述的缺陷,我们(必然)应用了强化策略,通过人工智能智能(AI)来控制局势。为了最大限度地提高机能的机能的精确性,因此,最有可能使机能达到最短的精确性。