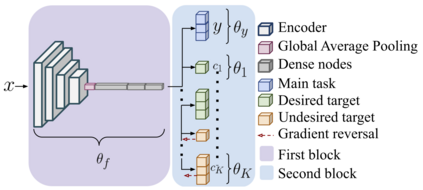

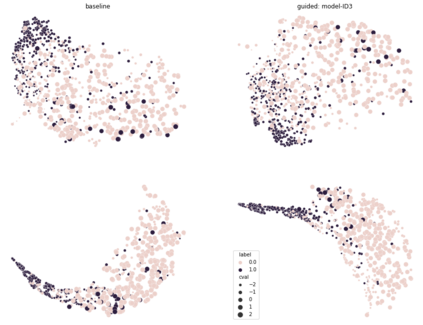

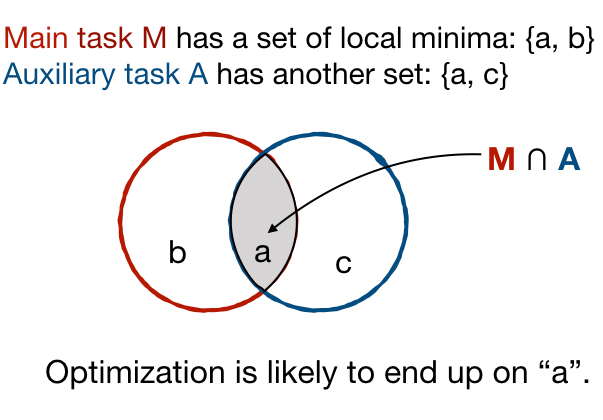

Adopting Convolutional Neural Networks (CNNs) in the daily routine of primary diagnosis requires not only near-perfect precision, but also a sufficient degree of generalization to data acquisition shifts and transparency. Existing CNN models act as black boxes, not ensuring to the physicians that important diagnostic features are used by the model. Building on top of successfully existing techniques such as multi-task learning, domain adversarial training and concept-based interpretability, this paper addresses the challenge of introducing diagnostic factors in the training objectives. Here we show that our architecture, by learning end-to-end an uncertainty-based weighting combination of multi-task and adversarial losses, is encouraged to focus on pathology features such as density and pleomorphism of nuclei, e.g. variations in size and appearance, while discarding misleading features such as staining differences. Our results on breast lymph node tissue show significantly improved generalization in the detection of tumorous tissue, with best average AUC 0.89 (0.01) against the baseline AUC 0.86 (0.005). By applying the interpretability technique of linearly probing intermediate representations, we also demonstrate that interpretable pathology features such as nuclei density are learned by the proposed CNN architecture, confirming the increased transparency of this model. This result is a starting point towards building interpretable multi-task architectures that are robust to data heterogeneity. Our code is available at https://bit.ly/356yQ2u.

翻译:在初级诊断的日常日常常规中采用进化神经网络(CNNs)不仅需要近效精确性,而且需要足够程度的对数据获取变化和透明度的概括性。现有的CNN模型作为黑盒,不能确保医生使用该模型的重要诊断特征。除了成功的现有技术外,如多任务学习、域对立培训和基于概念的可解释性等,本文件还论述了在培训目标中引入诊断性因素的挑战。这里,我们展示了我们的架构,通过学习基于不确定性的多任务和对称损失的加权组合,不仅需要近效精确性,而且还需要足够程度的概括性。现有的CNN模型模式作为黑盒,例如,大小和外观的变化,不能确保医生们使用重要的诊断性特征。我们关于乳腺淋巴节组织的调查结果显示,在检测肿瘤组织方面已大大改进了总体性,根据AUC 0.89 (0.01) 和 AUC 0.86 (0.00 ) 基线,通过将线性建筑的可解释性计算方法用于开始的中间结构,例如,大小和外观的外观,我们还通过解释性解释性地展示了我们目前所研究的数学结构的路径,这样可以解释的系统结构。我们所研判的模型,这是一个可理解的系统结构的路径,我们所研的可理解性,我们所研判的模型所研判的解的解的图。