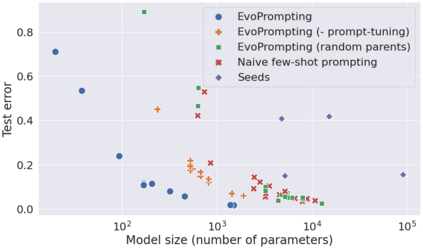

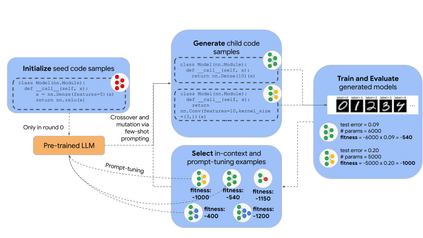

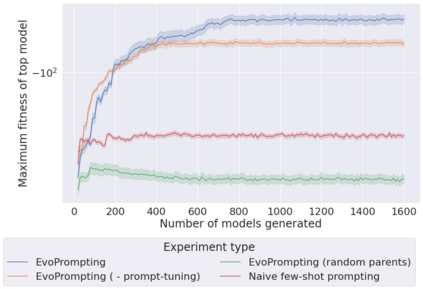

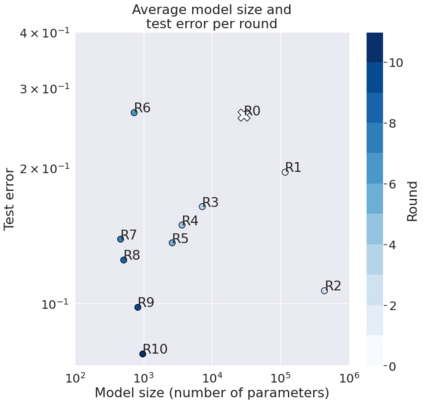

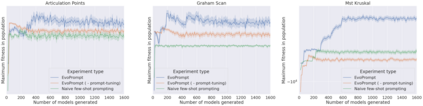

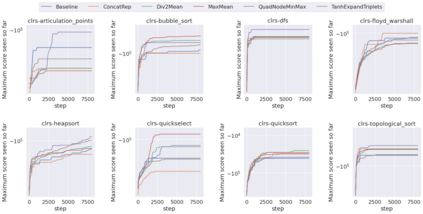

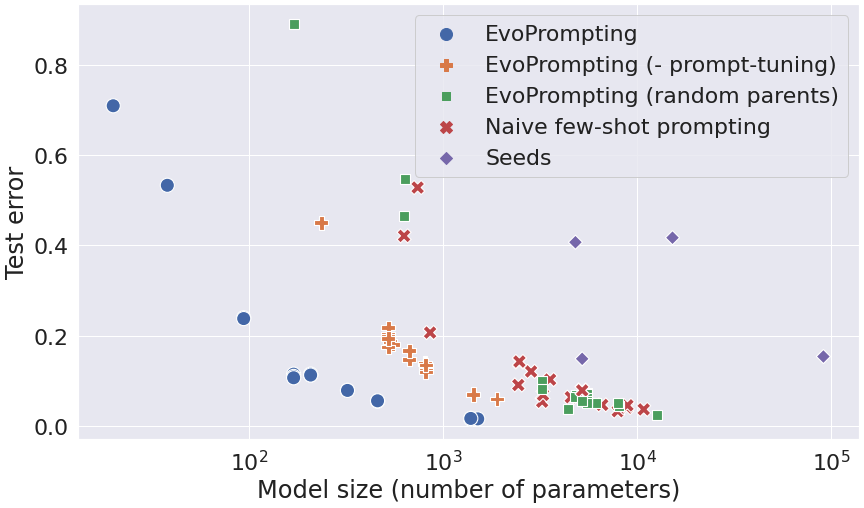

Given the recent impressive accomplishments of language models (LMs) for code generation, we explore the use of LMs as adaptive mutation and crossover operators for an evolutionary neural architecture search (NAS) algorithm. While NAS still proves too difficult a task for LMs to succeed at solely through prompting, we find that the combination of evolutionary prompt engineering with soft prompt-tuning, a method we term EvoPrompting, consistently finds diverse and high performing models. We first demonstrate that EvoPrompting is effective on the computationally efficient MNIST-1D dataset, where EvoPrompting produces convolutional architecture variants that outperform both those designed by human experts and naive few-shot prompting in terms of accuracy and model size. We then apply our method to searching for graph neural networks on the CLRS Algorithmic Reasoning Benchmark, where EvoPrompting is able to design novel architectures that outperform current state-of-the-art models on 21 out of 30 algorithmic reasoning tasks while maintaining similar model size. EvoPrompting is successful at designing accurate and efficient neural network architectures across a variety of machine learning tasks, while also being general enough for easy adaptation to other tasks beyond neural network design.

翻译:鉴于最近生成代码的语言模型(LMS)取得了令人印象深刻的成就,我们探索了使用LMS作为适应性突变和交叉操作操作者来进行进化神经结构搜索算法。虽然NAS仍然证明对于LMS来说一项任务过于困难,无法仅仅通过推动而取得成功,但我们发现,进化快速工程与软快速调相结合,这是一种我们称为EvoPrompting(EvoPPrompting)的方法,始终发现不同和高性能的模型。我们首先证明EvoPrompting(EvoPrompting)对于计算高效的MNIST-1D数据集是有效的,在这个数据集中,EvoPrompting(EvoPrompting)生成的演化式结构变异于由人类专家设计的变异体,在精确性和模型大小方面却鲜少见的提示。我们随后运用了我们的方法在CLRS Algorithmical Excalogising Bram) 基准上搜索图形神经网络网络网络网络。Evopress reforal commastrical redustrations redustrations for an lating dust for ful suble made dust betradustruble made dust bestrublestrual tradustrual sublestrations</s>