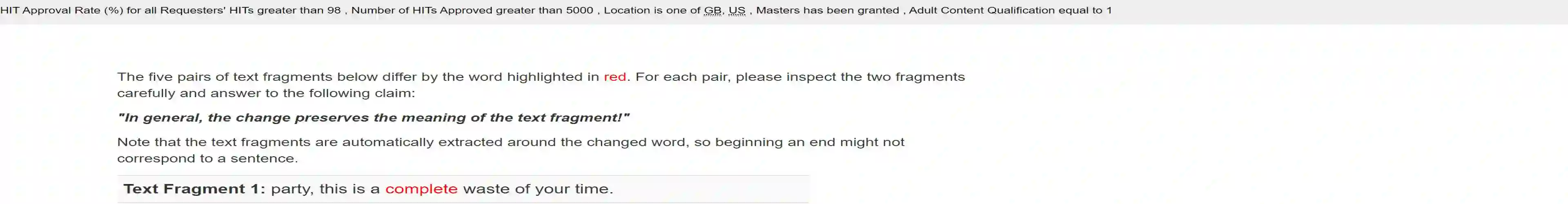

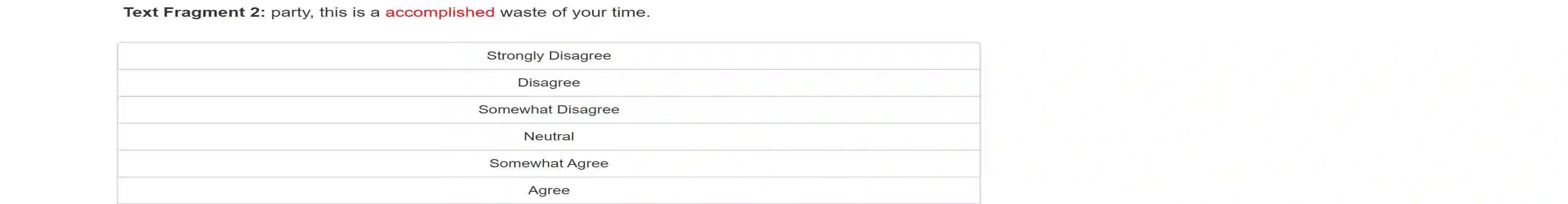

Deep Neural Networks have taken Natural Language Processing by storm. While this led to incredible improvements across many tasks, it also initiated a new research field, questioning the robustness of these neural networks by attacking them. In this paper, we investigate four word substitution-based attacks on BERT. We combine a human evaluation of individual word substitutions and a probabilistic analysis to show that between 96% and 99% of the analyzed attacks do not preserve semantics, indicating that their success is mainly based on feeding poor data to the model. To further confirm that, we introduce an efficient data augmentation procedure and show that many adversarial examples can be prevented by including data similar to the attacks during training. An additional post-processing step reduces the success rates of state-of-the-art attacks below 5%. Finally, by looking at more reasonable thresholds on constraints for word substitutions, we conclude that BERT is a lot more robust than research on attacks suggests.

翻译:深神经网络通过风暴对自然语言进行了自然语言处理。 虽然这在很多任务中带来了令人难以置信的改进, 但它也启动了一个新的研究领域, 通过攻击这些神经网络的坚固性来质疑这些神经网络。 在本文中, 我们调查了四个字对BERT的替代攻击。 我们结合了个人对单词替换的评价和概率分析, 以表明96%至99%的被分析攻击并不保存语义学, 表明它们的成功主要基于向模型输入贫乏的数据。 为了进一步证实这一点, 我们引入了一个有效的数据增强程序, 并表明许多对抗性的例子可以通过在培训中包括类似攻击的数据来防止。 额外的后处理步骤将最新技术攻击的成功率降低到5%以下。 最后, 通过寻找更合理的替换词限制门槛, 我们得出结论, BERT比攻击研究显示的强得多。