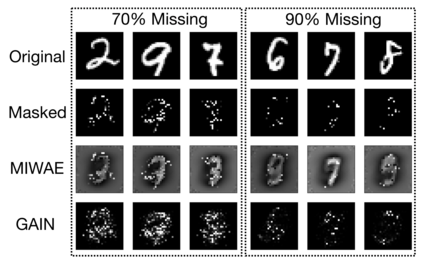

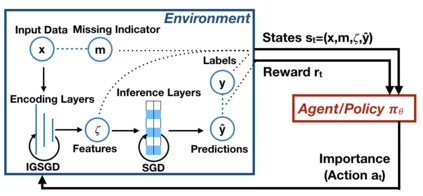

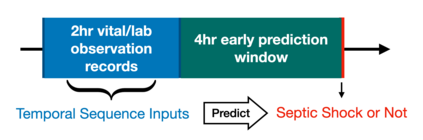

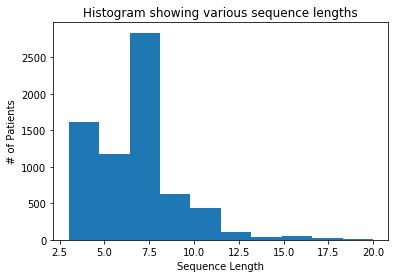

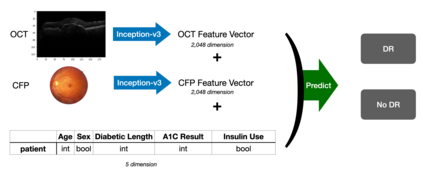

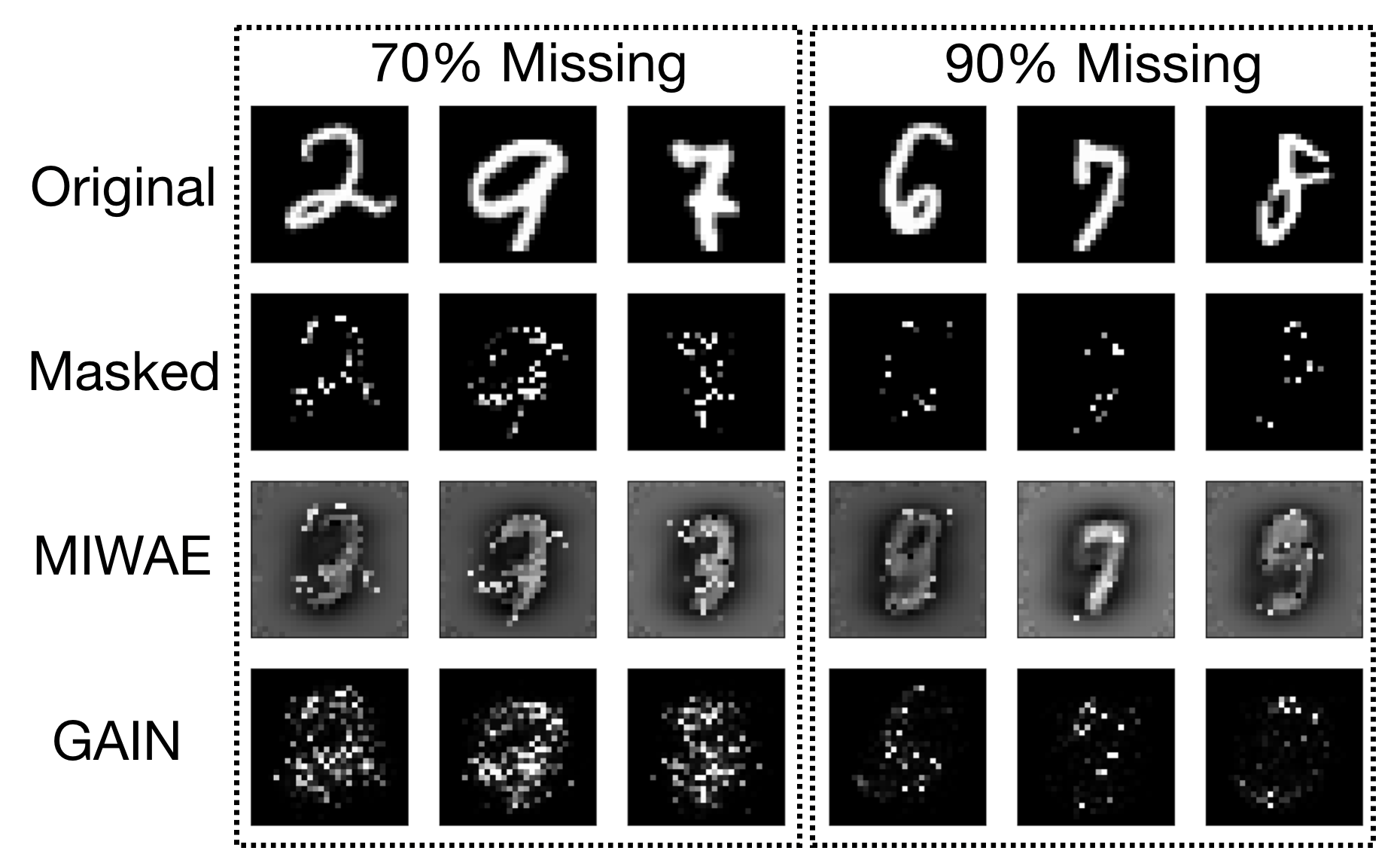

Although recent works have developed methods that can generate estimations (or imputations) of the missing entries in a dataset to facilitate downstream analysis, most depend on assumptions that may not align with real-world applications and could suffer from poor performance in subsequent tasks. This is particularly true if the data have large missingness rates or a small population. More importantly, the imputation error could be propagated into the prediction step that follows, causing the gradients used to train the prediction models to be biased. Consequently, in this work, we introduce the importance guided stochastic gradient descent (IGSGD) method to train multilayer perceptrons (MLPs) and long short-term memories (LSTMs) to directly perform inference from inputs containing missing values without imputation. Specifically, we employ reinforcement learning (RL) to adjust the gradients used to train the models via back-propagation. This not only reduces bias but allows the model to exploit the underlying information behind missingness patterns. We test the proposed approach on real-world time-series (i.e., MIMIC-III), tabular data obtained from an eye clinic, and a standard dataset (i.e., MNIST), where our imputation-free predictions outperform the traditional two-step imputation-based predictions using state-of-the-art imputation methods.

翻译:虽然最近的工作已经开发出一些方法来估计(或估算)数据集中缺失的条目,以便利下游分析,但多数取决于可能与现实应用不相符的假设,并可能在未来工作中表现不佳。如果数据缺少率高或人口少,情况尤其如此。更重要的是,估算错误可以传播到随后的预测步骤中,造成用于培训预测模型的梯度偏差。因此,我们在此工作中引入了指导的随机梯度下降法(IGSGD)的重要性,以培训多层透视器(MLPs)和长期短期记忆(LSTMs),直接从含有缺失值的输入中进行推断,而无需估算。具体地说,我们利用强化学习(RL)来调整用于通过回调来培训模型的梯度。这不仅减少了偏差,而且使模型能够利用缺失模式背后的基本信息。我们测试了在现实世界时间序列(i.i.MIC-III)上拟议的方法,即从一个视像诊所获得的列表数据,以及使用一种标准的数据模型。