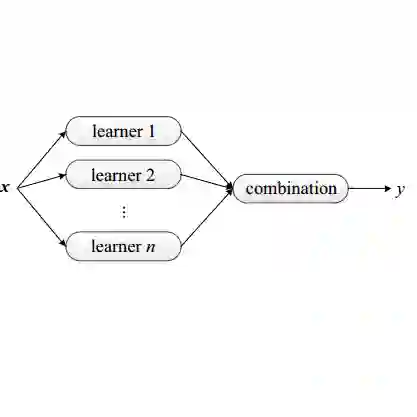

We propose a fundamental theory on ensemble learning that evaluates a given ensemble system by a well-grounded set of metrics. Previous studies used a variant of Fano's inequality of information theory and derived a lower bound of the classification error rate on the basis of the accuracy and diversity of models. We revisit the original Fano's inequality and argue that the studies did not take into account the information lost when multiple model predictions are combined into a final prediction. To address this issue, we generalize the previous theory to incorporate the information loss. Further, we empirically validate and demonstrate the proposed theory through extensive experiments on actual systems. The theory reveals the strengths and weaknesses of systems on each metric, which will push the theoretical understanding of ensemble learning and give us insights into designing systems.

翻译:我们提出了一套共同学习的基本理论,用一套有充分依据的衡量标准来评价一个特定组合系统。以前的研究使用了法诺信息不平等理论的变体,根据模型的准确性和多样性,得出了分类错误率的较低界限。我们重新审视了原法诺的不平等,认为这些研究没有考虑到在将多种模型预测合并到最后预测时丢失的信息。为了解决这一问题,我们概括了以前的理论,将信息损失纳入其中。此外,我们通过对实际系统的广泛试验,从经验上验证并展示了拟议的理论。该理论揭示了每种指标的系统优缺点,这将推动对共同学习的理论理解,并让我们深入了解系统的设计。