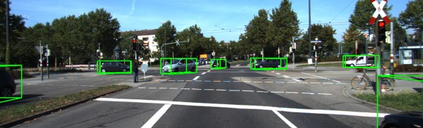

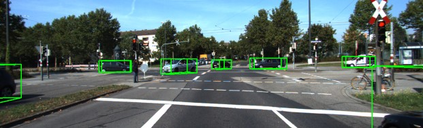

Multi-object tracking (MOT) with camera-LiDAR fusion demands accurate results of object detection, affinity computation and data association in real time. This paper presents an efficient multi-modal MOT framework with online joint detection and tracking schemes and robust data association for autonomous driving applications. The novelty of this work includes: (1) development of an end-to-end deep neural network for joint object detection and correlation using 2D and 3D measurements; (2) development of a robust affinity computation module to compute occlusion-aware appearance and motion affinities in 3D space; (3) development of a comprehensive data association module for joint optimization among detection confidences, affinities and start-end probabilities. The experiment results on the KITTI tracking benchmark demonstrate the superior performance of the proposed method in terms of both tracking accuracy and processing speed.

翻译:多目标跟踪(MOT)与照相机-LiDAR聚合,要求实时对天体探测、亲近度计算和数据关联得出准确结果,本文件介绍了一个高效的多模式模拟跟踪框架,包括在线联合探测和跟踪计划以及自动驾驶应用程序的稳健数据协会,这项工作的新颖之处包括:(1) 开发一个端到端深神经网络,使用2D和3D测量方法对天体进行联合探测和关联;(2) 开发一个稳健的亲近计算模块,以计算3D空间的外观和运动亲近度;(3) 开发一个综合数据联系模块,在探测信心、亲近性和起始概率方面联合优化;KITTI跟踪基准的实验结果显示,拟议方法在跟踪准确度和处理速度两方面的优异性表现。