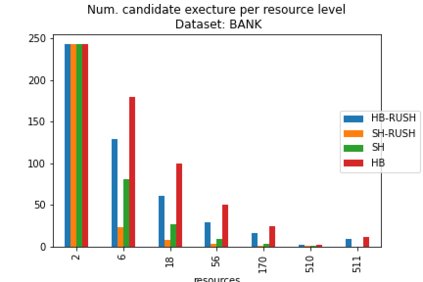

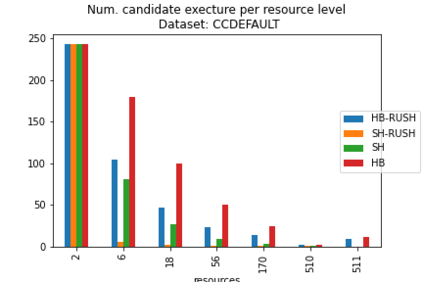

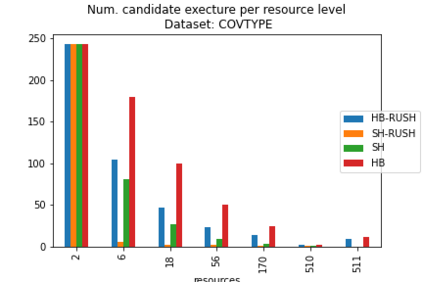

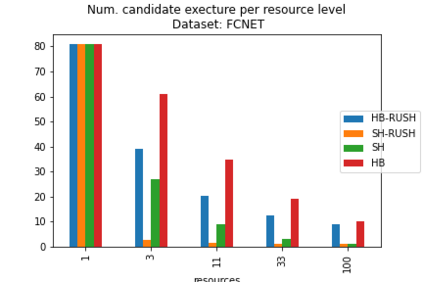

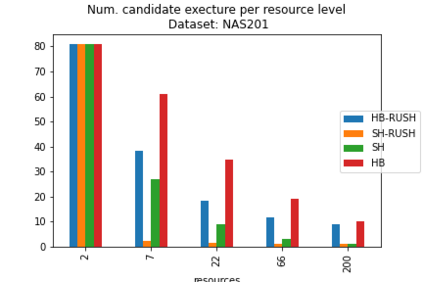

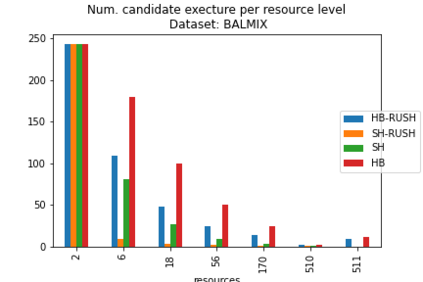

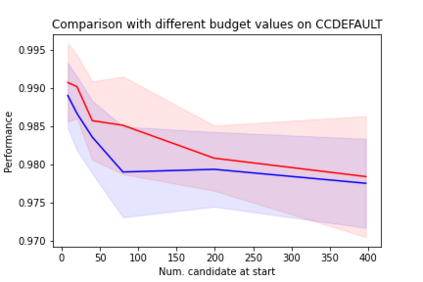

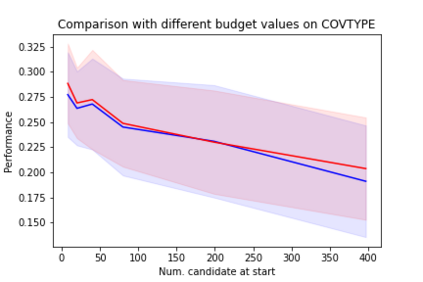

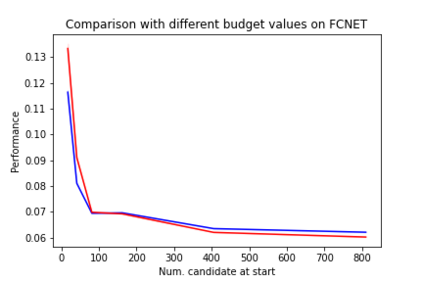

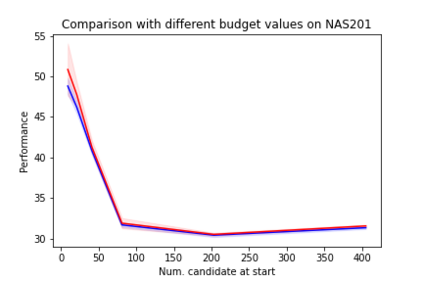

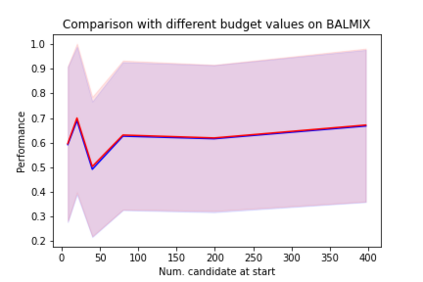

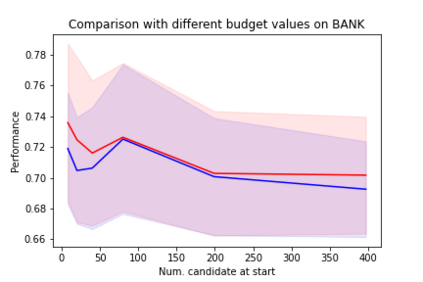

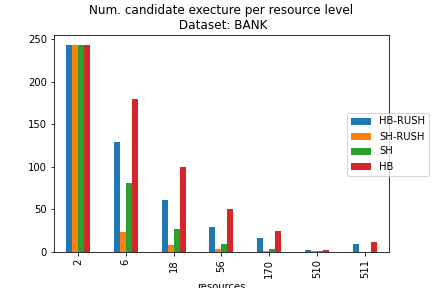

In this work we consider the problem of repeated hyperparameter and neural architecture search (HNAS). We propose an extension of Successive Halving that is able to leverage information gained in previous HNAS problems with the goal of saving computational resources. We empirically demonstrate that our solution is able to drastically decrease costs while maintaining accuracy and being robust to negative transfer. Our method is significantly simpler than competing transfer learning approaches, setting a new baseline for transfer learning in HNAS.

翻译:在这项工作中,我们考虑了反复超参数和神经结构搜索的问题。我们建议延长连续二分法的延长,以便能够利用以往的超参数和神经结构搜索问题获得的信息,从而节省计算资源。我们从经验上证明,我们的解决办法能够大幅降低成本,同时保持准确性,并能够有力地抵御负转移。我们的方法比相互竞争的转移学习方法简单得多,为在超参数和神经结构中进行转移学习设定了新的基准。

相关内容

iOS 8 提供的应用间和应用跟系统的功能交互特性。

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem

Arxiv

0+阅读 · 2021年9月14日

Arxiv

3+阅读 · 2019年9月3日