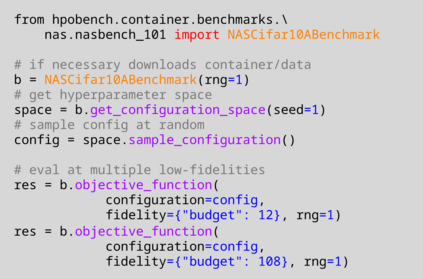

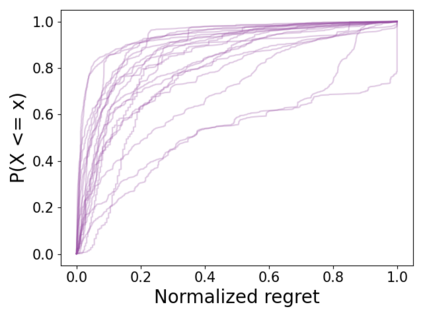

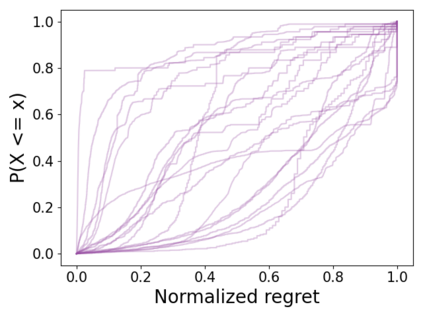

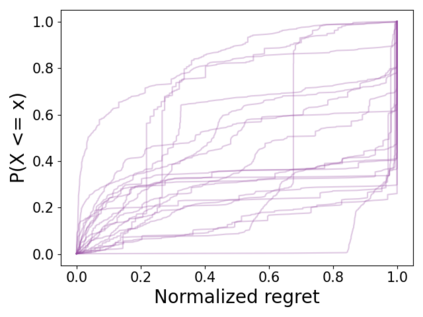

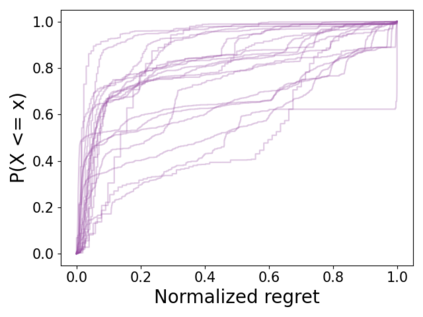

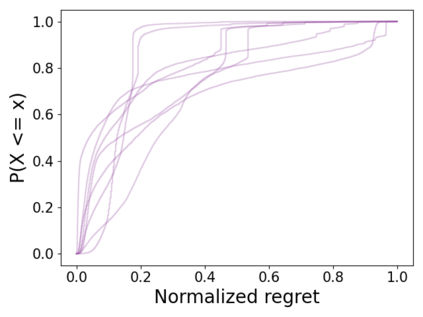

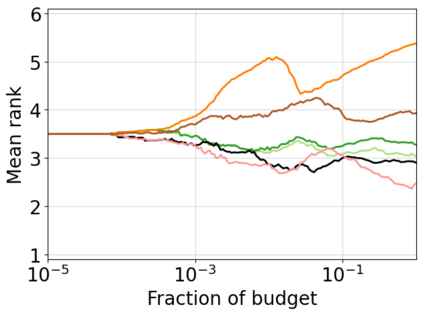

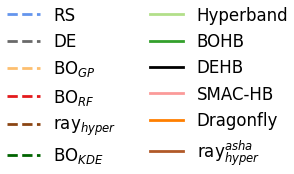

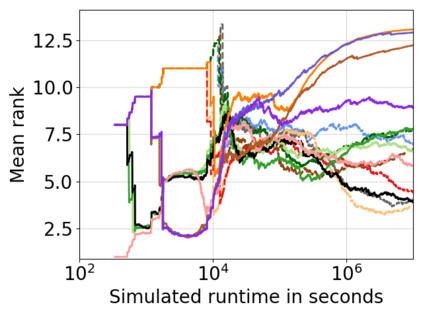

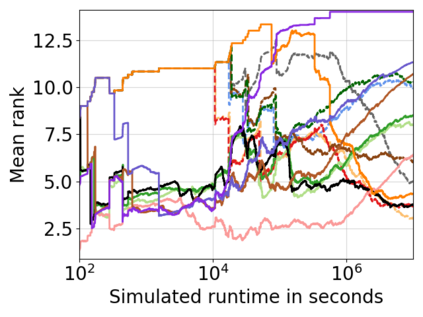

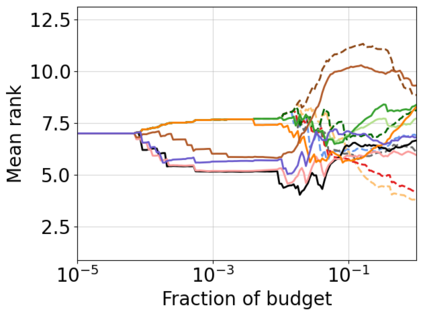

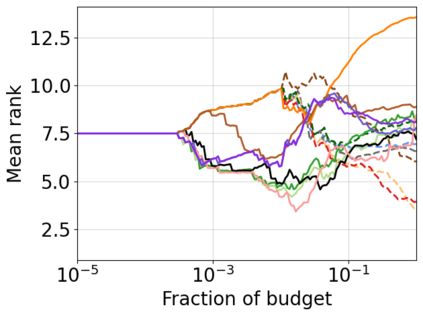

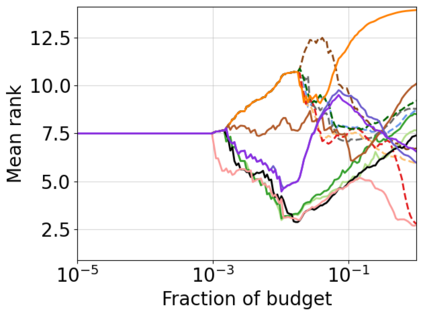

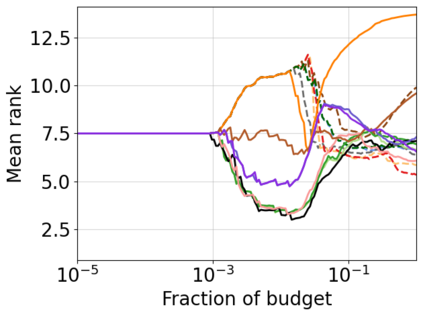

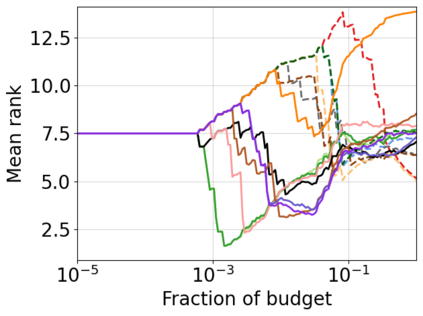

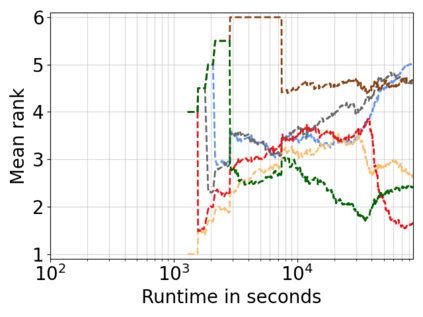

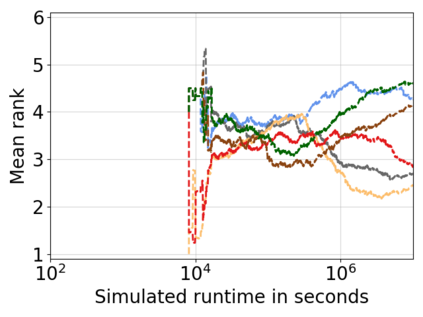

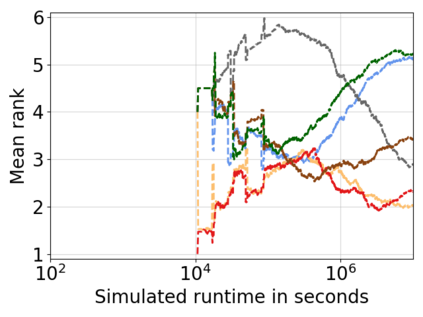

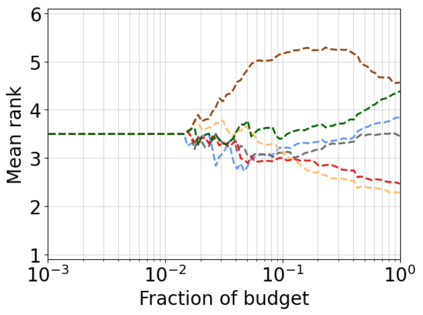

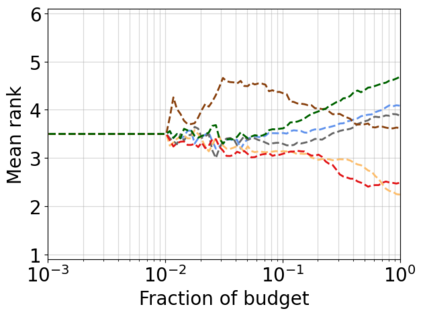

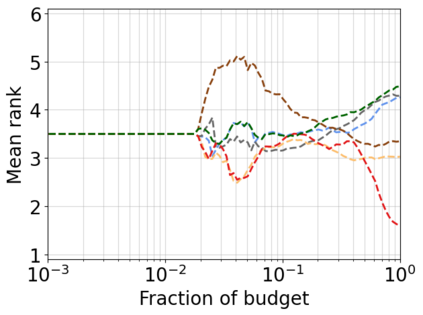

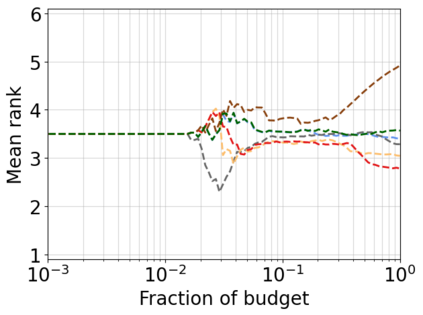

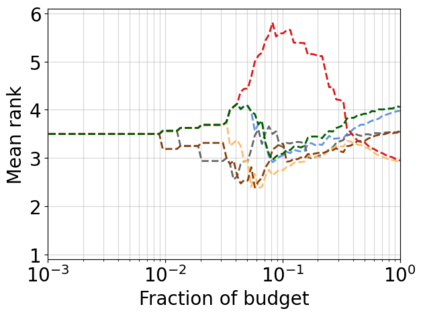

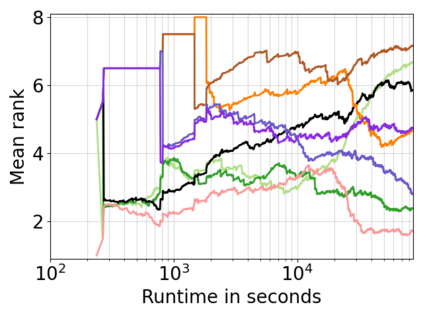

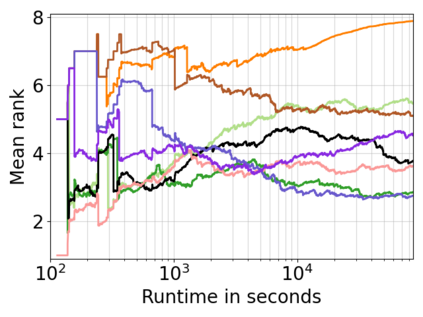

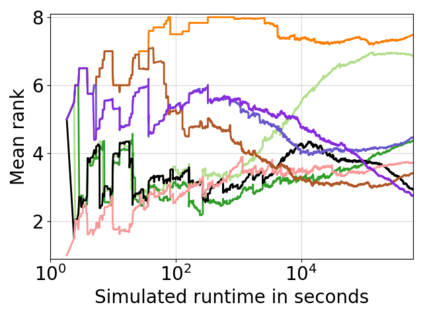

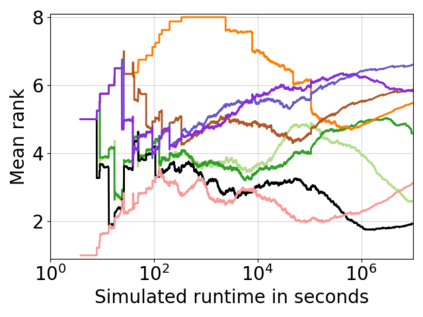

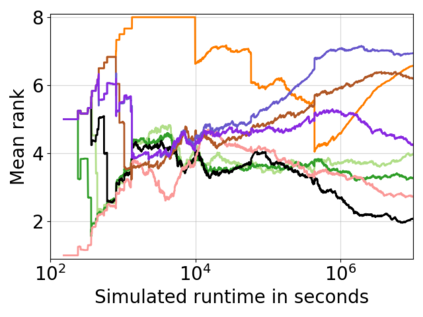

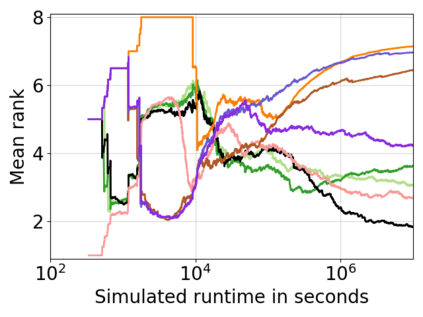

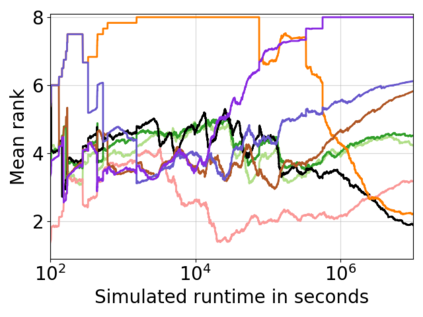

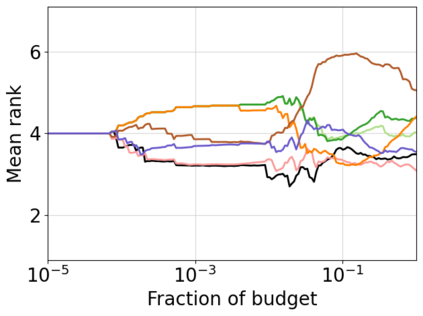

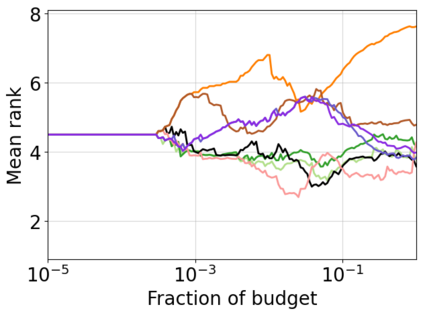

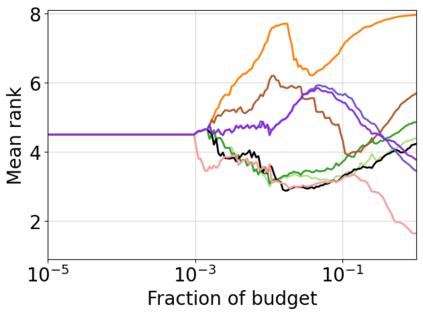

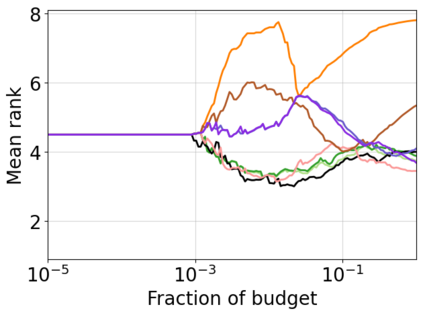

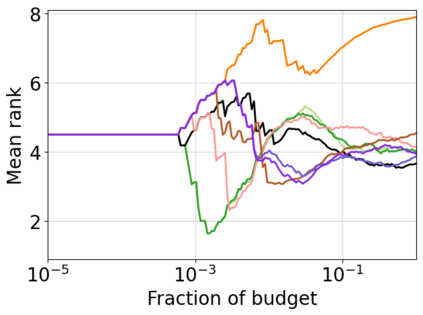

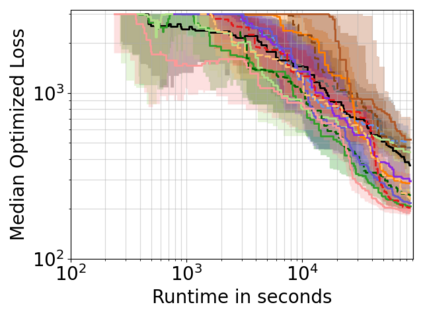

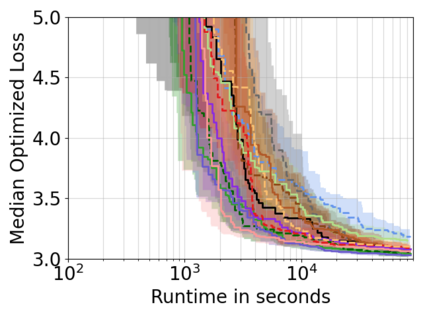

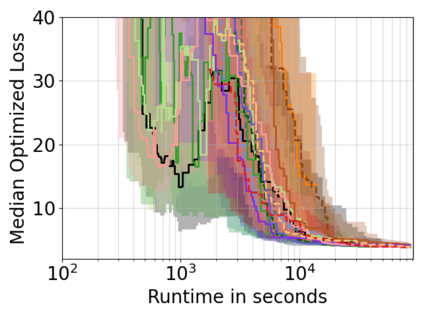

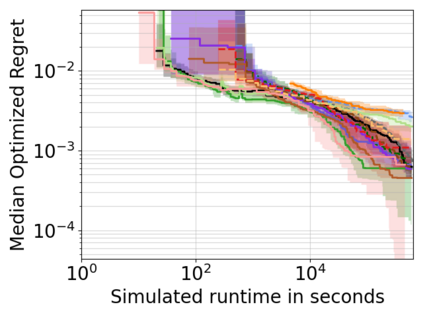

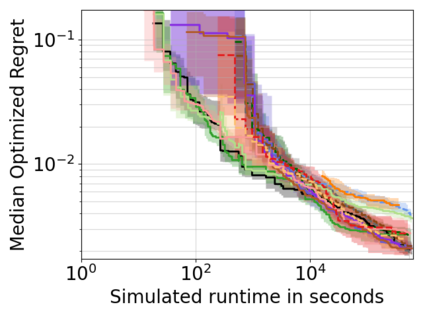

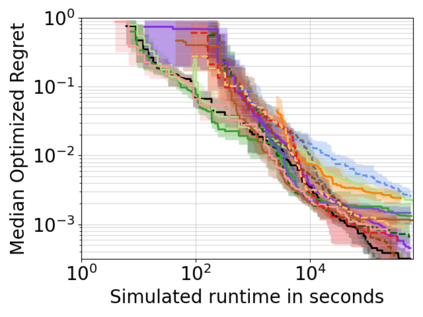

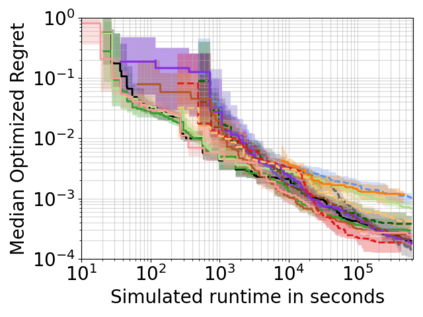

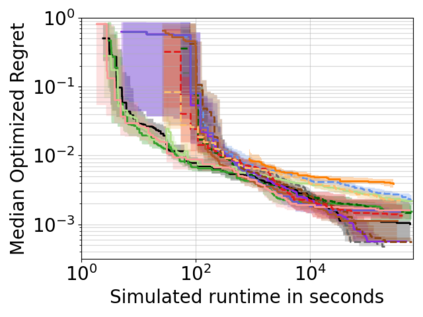

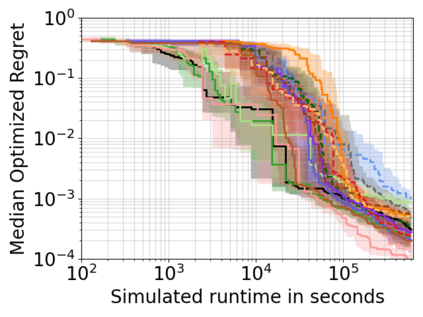

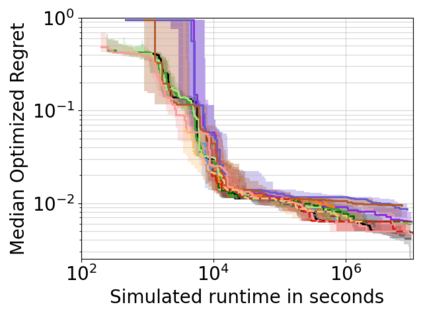

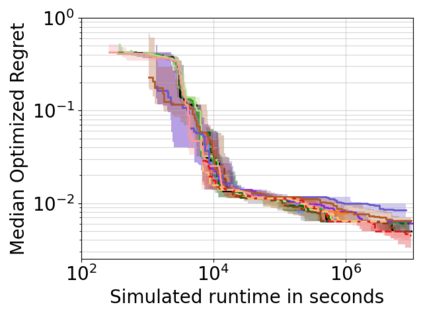

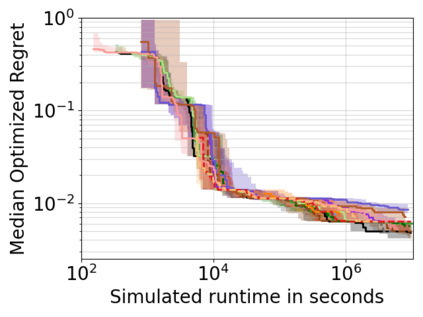

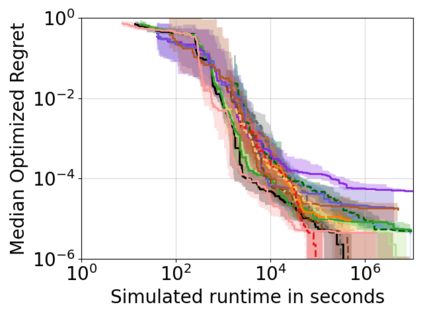

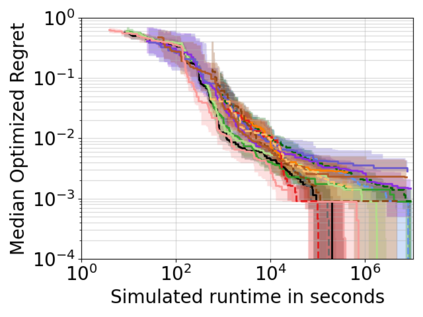

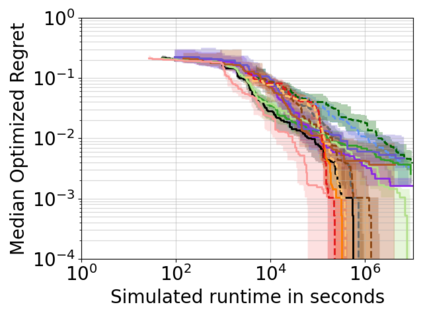

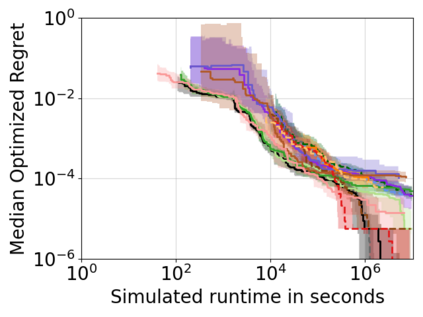

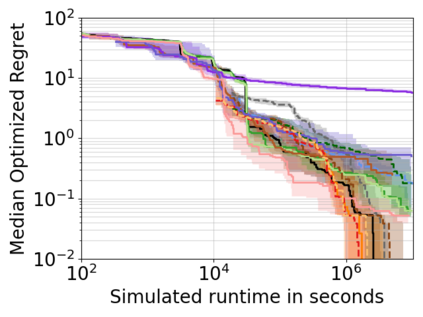

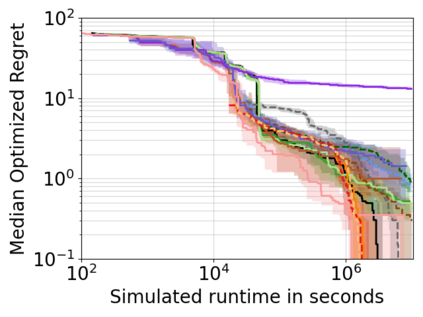

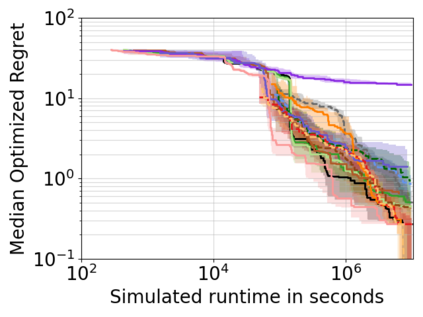

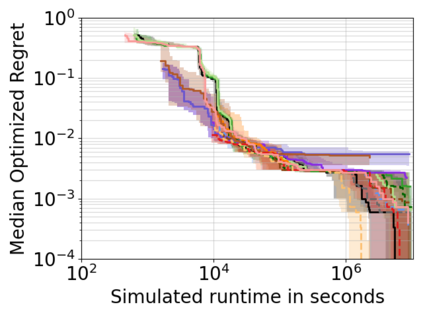

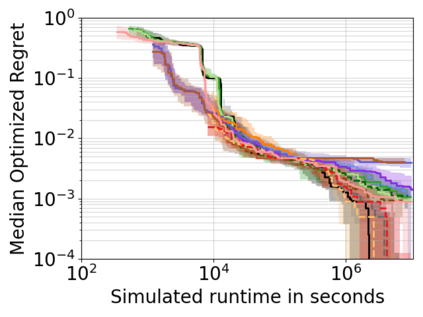

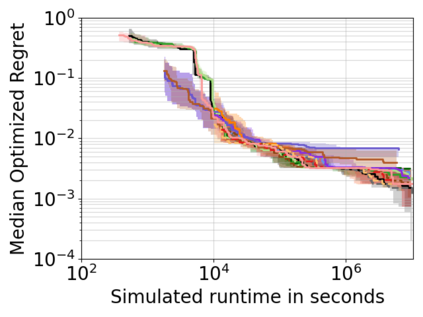

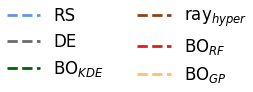

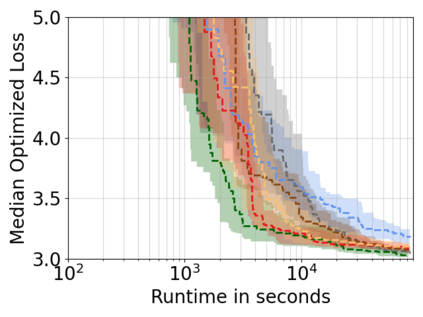

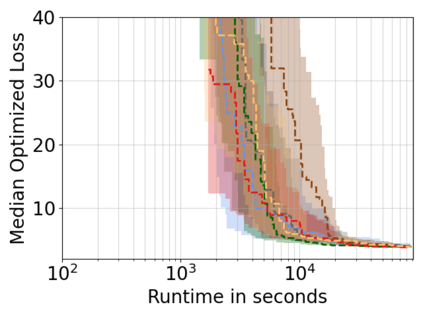

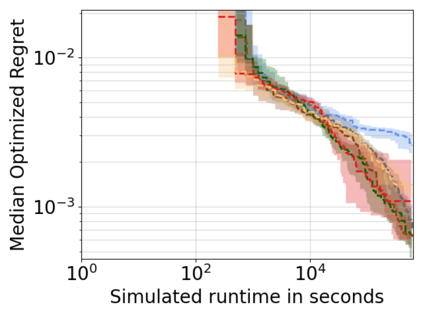

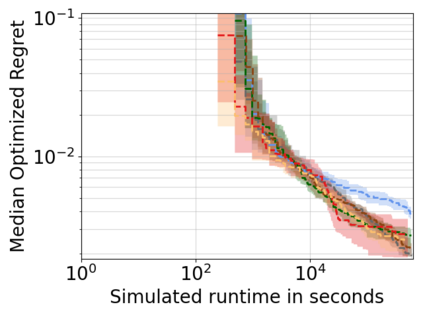

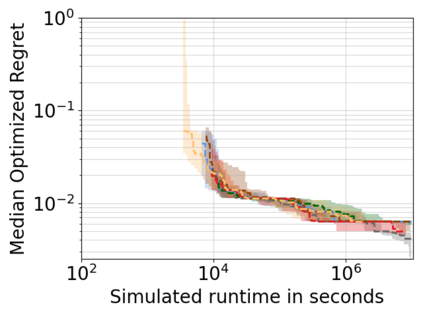

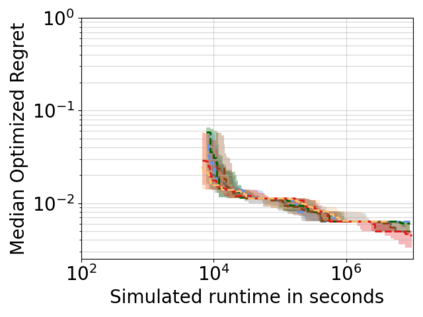

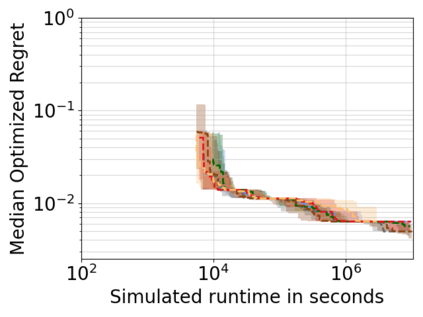

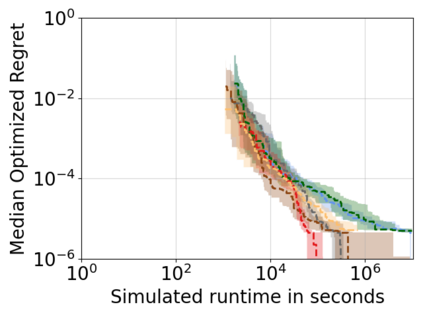

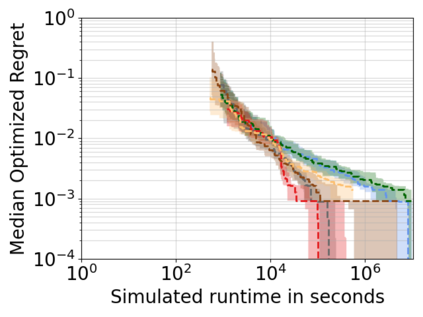

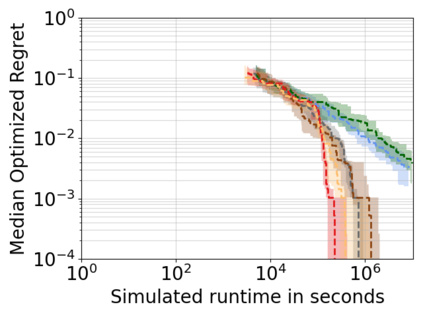

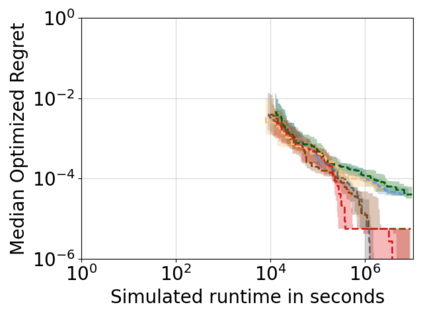

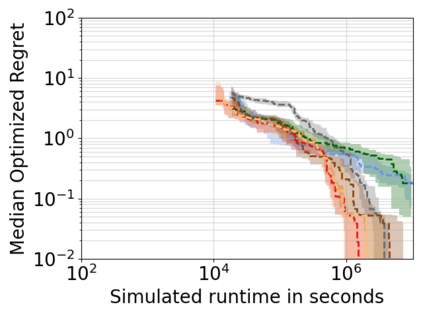

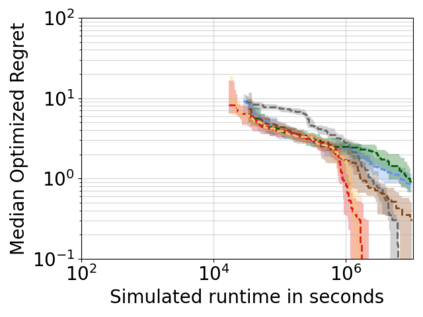

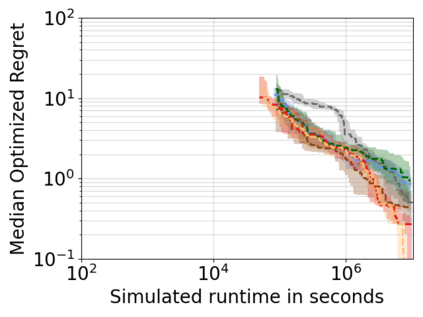

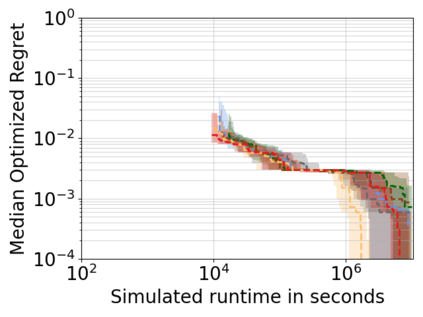

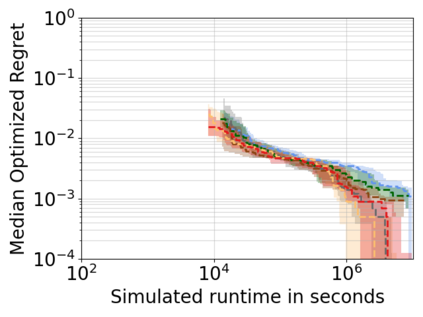

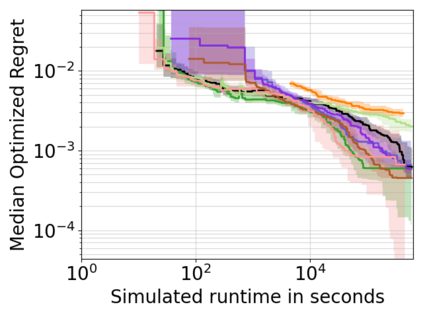

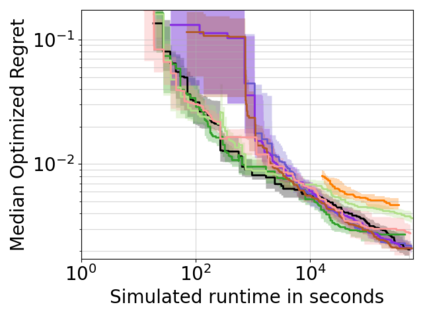

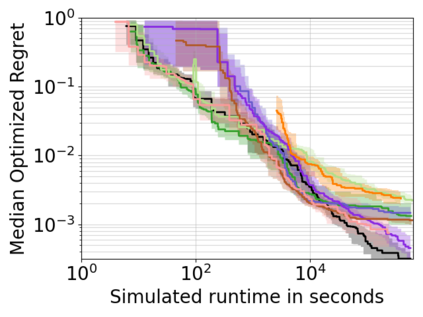

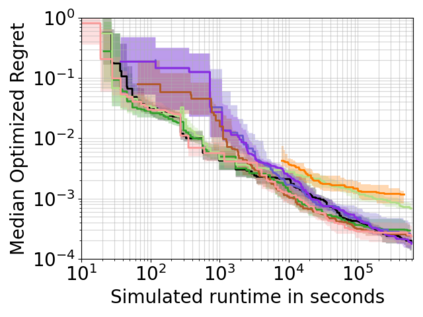

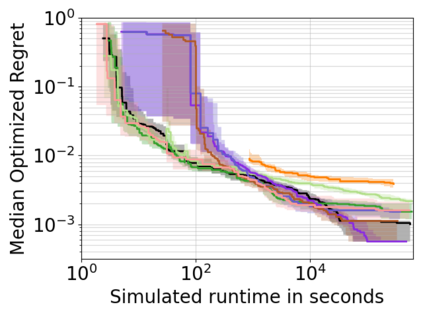

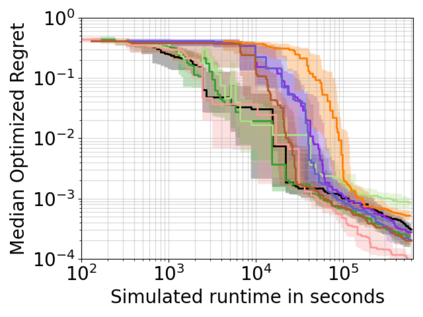

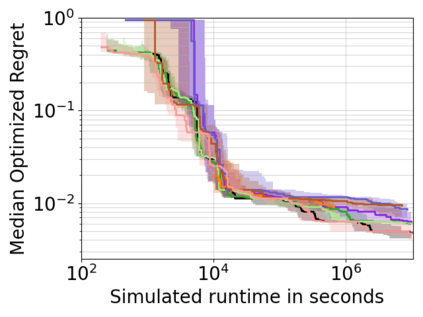

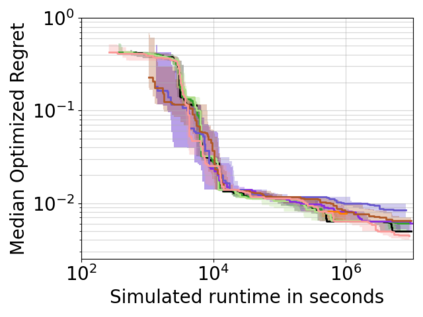

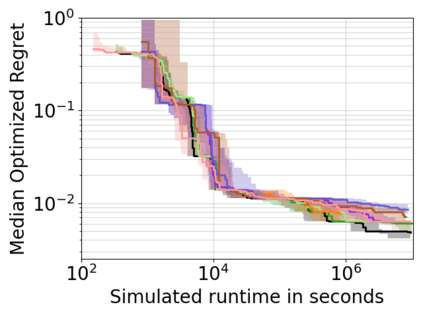

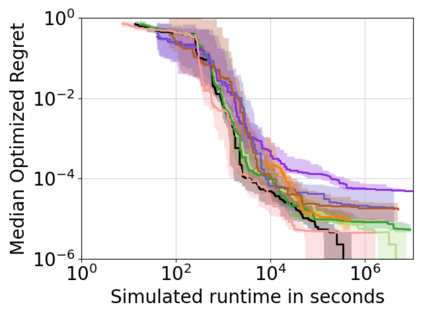

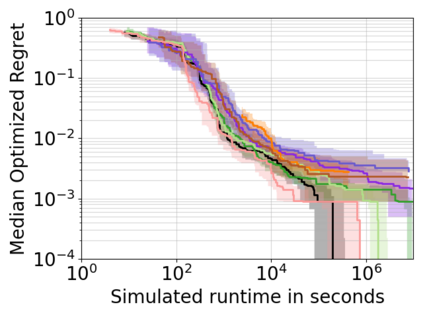

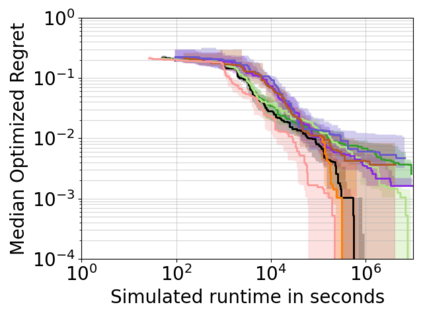

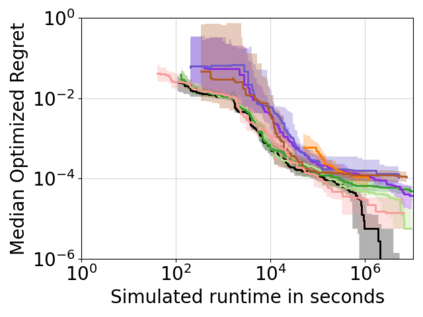

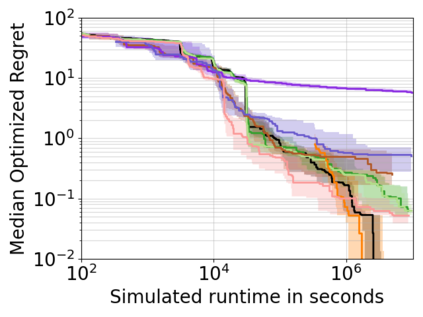

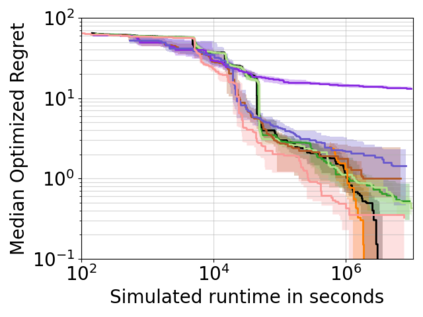

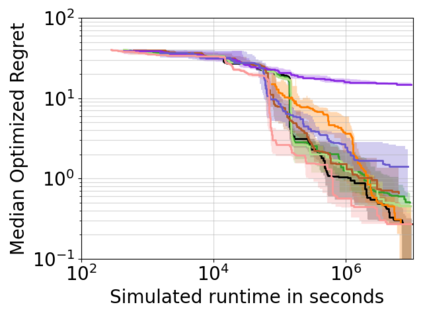

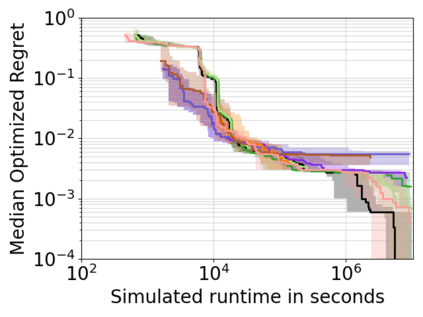

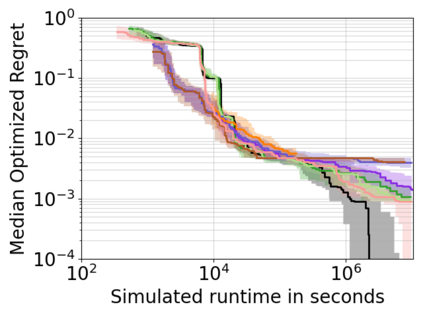

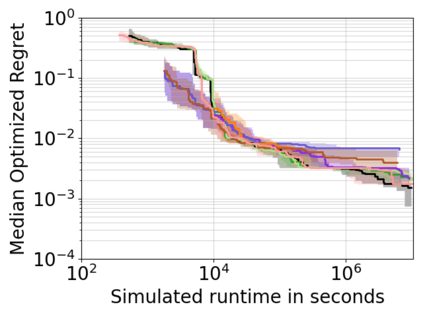

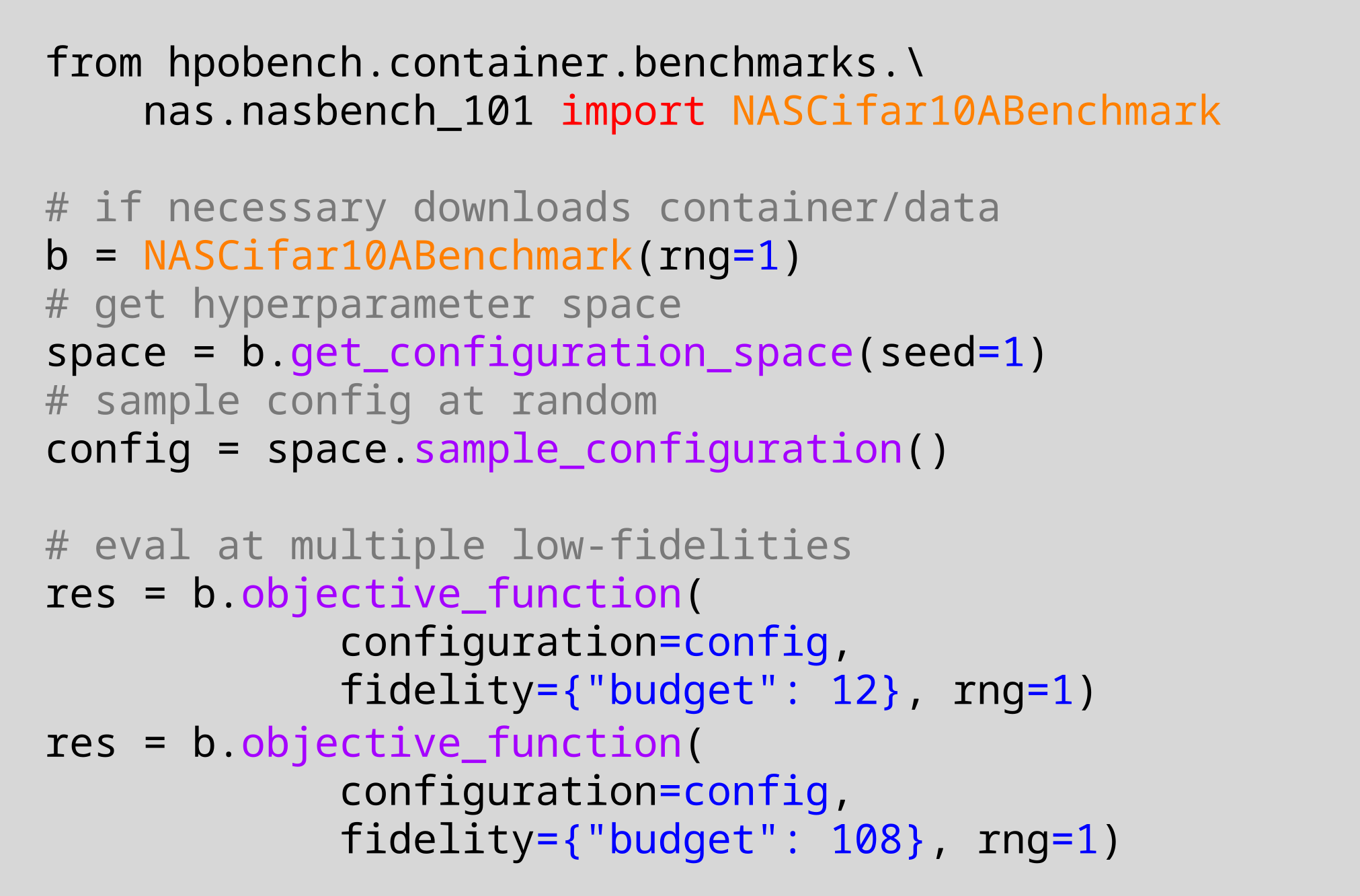

To achieve peak predictive performance, hyperparameter optimization (HPO) is a crucial component of machine learning and its applications. Over the last years,the number of efficient algorithms and tools for HPO grew substantially. At the same time, the community is still lacking realistic, diverse, computationally cheap,and standardized benchmarks. This is especially the case for multi-fidelity HPO methods. To close this gap, we propose HPOBench, which includes 7 existing and 5 new benchmark families, with in total more than 100 multi-fidelity benchmark problems. HPOBench allows to run this extendable set of multi-fidelity HPO benchmarks in a reproducible way by isolating and packaging the individual benchmarks in containers. It also provides surrogate and tabular benchmarks for computationally affordable yet statistically sound evaluations. To demonstrate the broad compatibility of HPOBench and its usefulness, we conduct an exemplary large-scale study evaluating 6 well known multi-fidelity HPO tools.

翻译:为了达到高峰预测性能,超参数优化(HPO)是机器学习及其应用的关键组成部分。过去几年,HPO的有效算法和工具数量大幅增长。与此同时,社区仍然缺乏现实、多样、计算便宜和标准化的基准。多信仰HPO方法尤其如此。为了缩小这一差距,我们提议HPOBench,它包括7个现有和5个新的基准家庭,总共100多个多信仰基准问题。HPOBench允许通过隔离和包装集装箱中的单个基准,以可复制的方式运行这一套可扩展的多信仰HPO基准。它还为计算可负担得起但具有统计正确性的评价提供代用基准和表格基准。为了显示HPOBench的广泛兼容性及其效用,我们进行了一项堪称性的大规模研究,评估6个众所周知的多信仰HPO工具。